- About This Guide

- I Advanced Administration

- II System

- 8 32-Bit and 64-Bit Applications in a 64-Bit System Environment

- 9 Introduction to the Boot Process

- 10 The

systemdDaemon - 11

journalctl: Query thesystemdJournal - 12 The Boot Loader GRUB 2

- 13 Basic Networking

- 14 UEFI (Unified Extensible Firmware Interface)

- 15 Special System Features

- 16 Dynamic Kernel Device Management with

udev

- III Services

- IV Mobile Computers

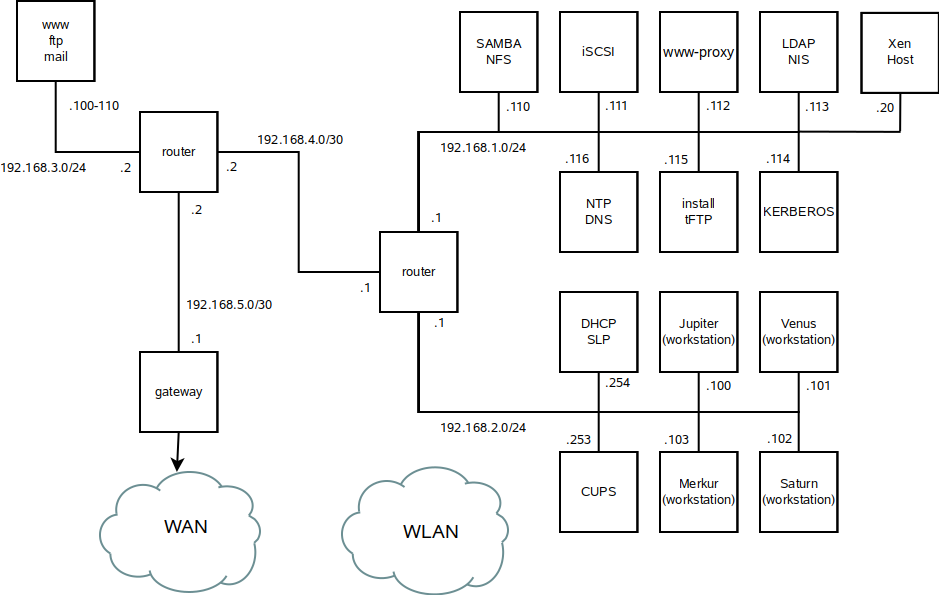

- A An Example Network

- B GNU Licenses

openSUSE Leap 15.2

Reference

- About This Guide

- I Advanced Administration

- 1 YaST in Text Mode

- 2 Managing Software with Command Line Tools

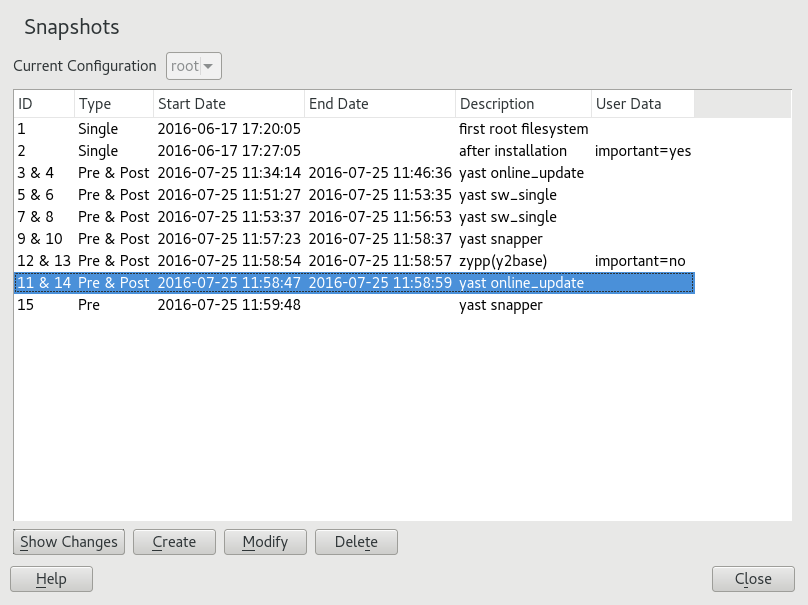

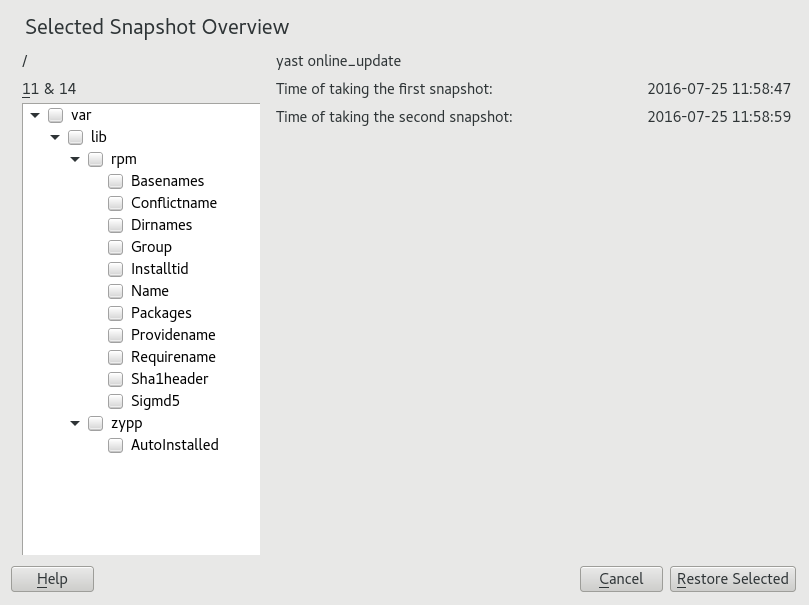

- 3 System Recovery and Snapshot Management with Snapper

- 3.1 Default Setup

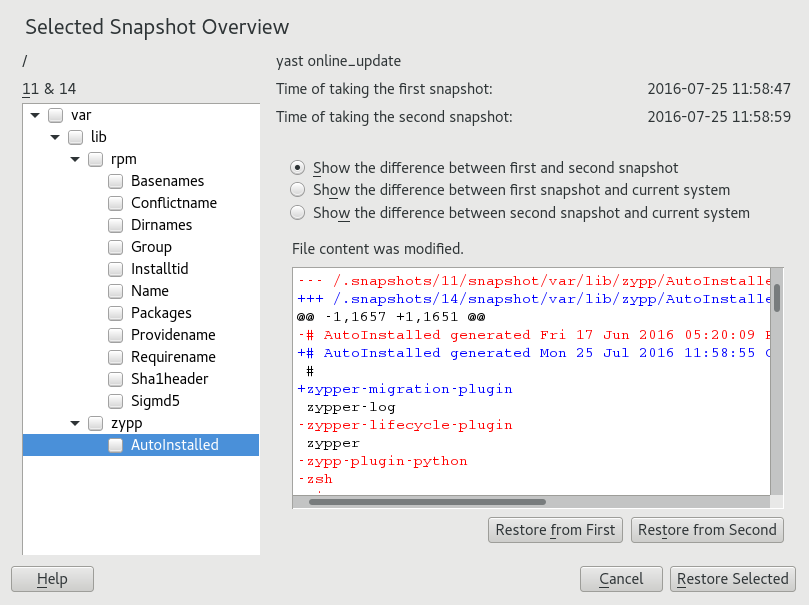

- 3.2 Using Snapper to Undo Changes

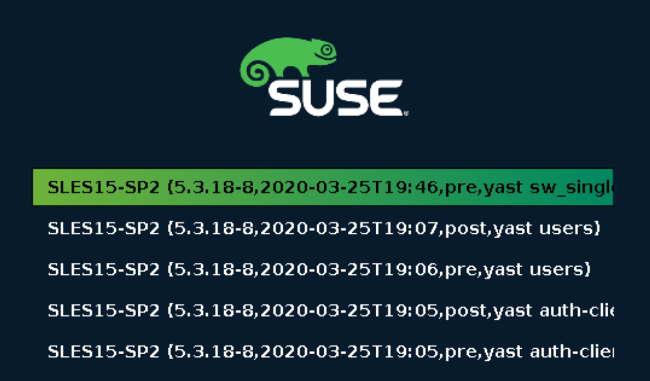

- 3.3 System Rollback by Booting from Snapshots

- 3.4 Enabling Snapper in User Home Directories

- 3.5 Creating and Modifying Snapper Configurations

- 3.6 Manually Creating and Managing Snapshots

- 3.7 Automatic Snapshot Clean-Up

- 3.8 Showing Exclusive Disk Space Used by Snapshots

- 3.9 Frequently Asked Questions

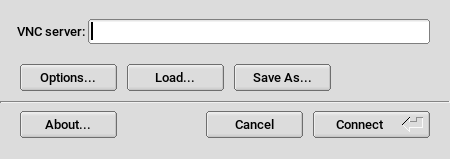

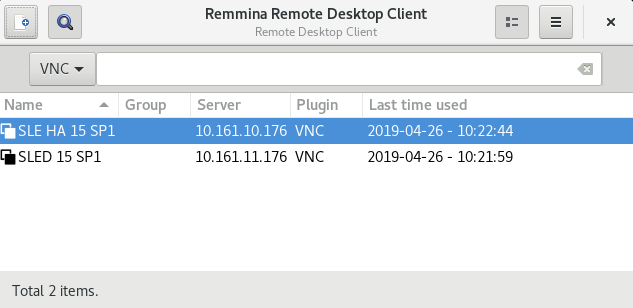

- 4 Remote Graphical Sessions with VNC

- 5 Expert Partitioner

- 6 Installing Multiple Kernel Versions

- 7 Graphical User Interface

- II System

- 8 32-Bit and 64-Bit Applications in a 64-Bit System Environment

- 9 Introduction to the Boot Process

- 10 The

systemdDaemon - 11

journalctl: Query thesystemdJournal - 12 The Boot Loader GRUB 2

- 13 Basic Networking

- 13.1 IP Addresses and Routing

- 13.2 IPv6—The Next Generation Internet

- 13.3 Name Resolution

- 13.4 Configuring a Network Connection with YaST

- 13.5 NetworkManager

- 13.6 Configuring a Network Connection Manually

- 13.7 Basic Router Setup

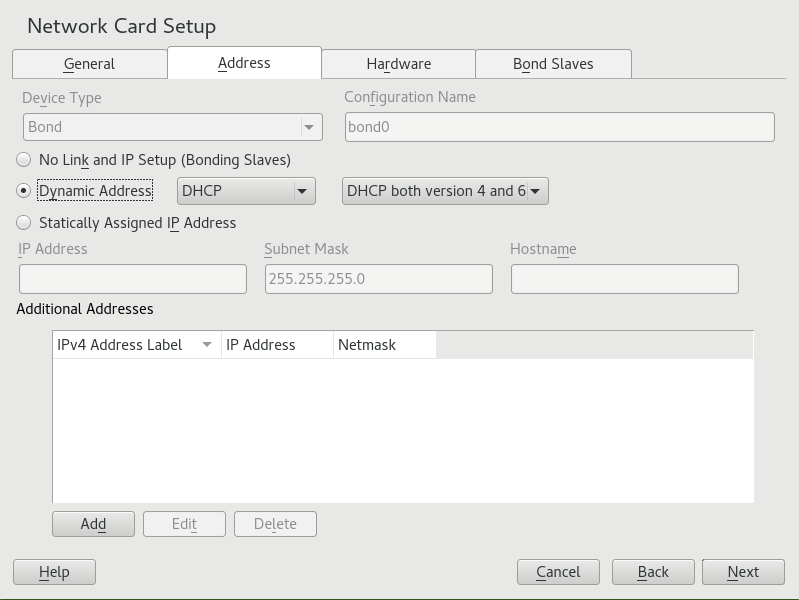

- 13.8 Setting Up Bonding Devices

- 13.9 Setting Up Team Devices for Network Teaming

- 13.10 Software-Defined Networking with Open vSwitch

- 14 UEFI (Unified Extensible Firmware Interface)

- 15 Special System Features

- 16 Dynamic Kernel Device Management with

udev - 16.1 The

/devDirectory - 16.2 Kernel

ueventsandudev - 16.3 Drivers, Kernel Modules and Devices

- 16.4 Booting and Initial Device Setup

- 16.5 Monitoring the Running

udevDaemon - 16.6 Influencing Kernel Device Event Handling with

udevRules - 16.7 Persistent Device Naming

- 16.8 Files used by

udev - 16.9 For More Information

- 16.1 The

- III Services

- 17 SLP

- 18 Time Synchronization with NTP

- 19 The Domain Name System

- 20 DHCP

- 21 Samba

- 22 Sharing File Systems with NFS

- 23 On-Demand Mounting with Autofs

- 24 The Apache HTTP Server

- 24.1 Quick Start

- 24.2 Configuring Apache

- 24.3 Starting and Stopping Apache

- 24.4 Installing, Activating, and Configuring Modules

- 24.5 Enabling CGI Scripts

- 24.6 Setting Up a Secure Web Server with SSL

- 24.7 Running Multiple Apache Instances on the Same Server

- 24.8 Avoiding Security Problems

- 24.9 Troubleshooting

- 24.10 For More Information

- 25 Setting Up an FTP Server with YaST

- 26 Squid Caching Proxy Server

- 26.1 Some Facts about Proxy Servers

- 26.2 System Requirements

- 26.3 Basic Usage of Squid

- 26.4 The YaST Squid Module

- 26.5 The Squid Configuration File

- 26.6 Configuring a Transparent Proxy

- 26.7 Using the Squid Cache Manager CGI Interface (

cachemgr.cgi) - 26.8 Cache Report Generation with Calamaris

- 26.9 For More Information

- IV Mobile Computers

- A An Example Network

- B GNU Licenses

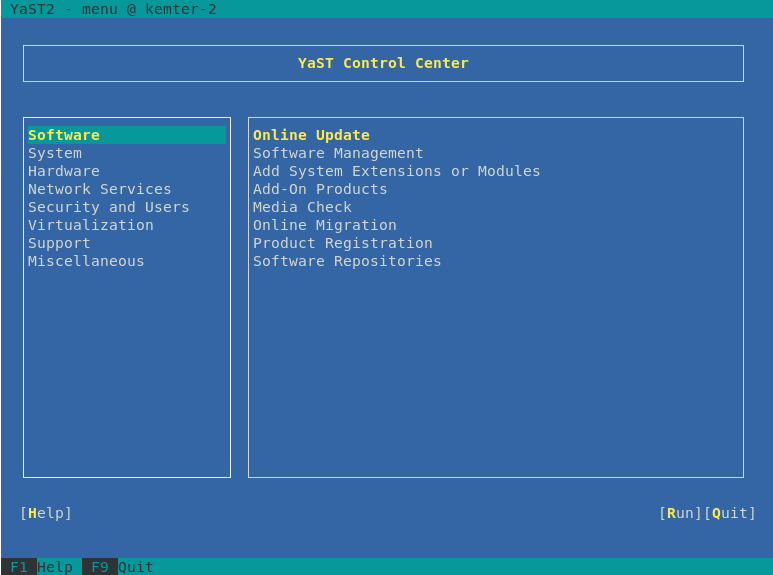

- 1.1 Main Window of YaST in Text Mode

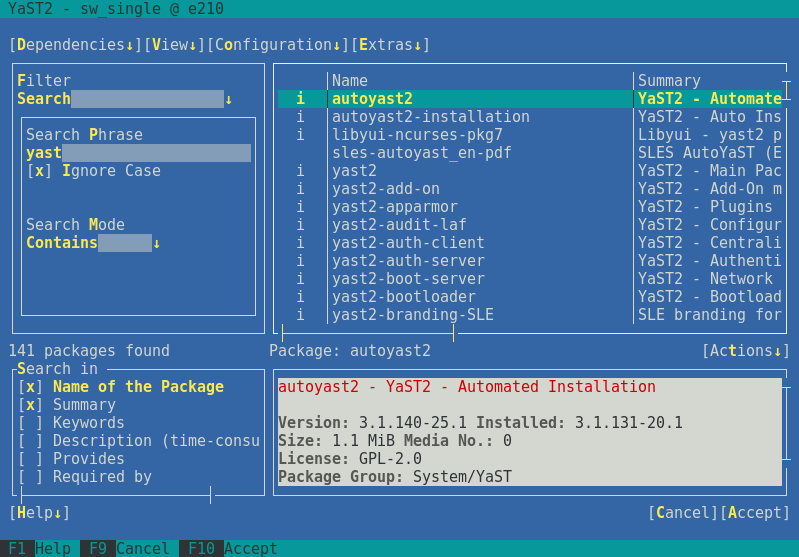

- 1.2 The Software Installation Module

- 3.1 Boot Loader: Snapshots

- 4.1 vncviewer

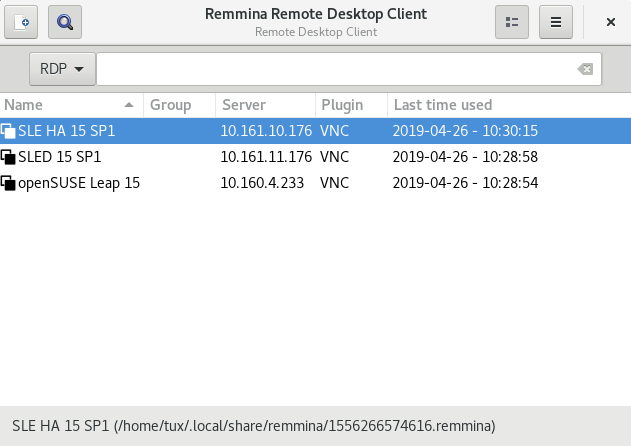

- 4.2 Remmina's Main Window

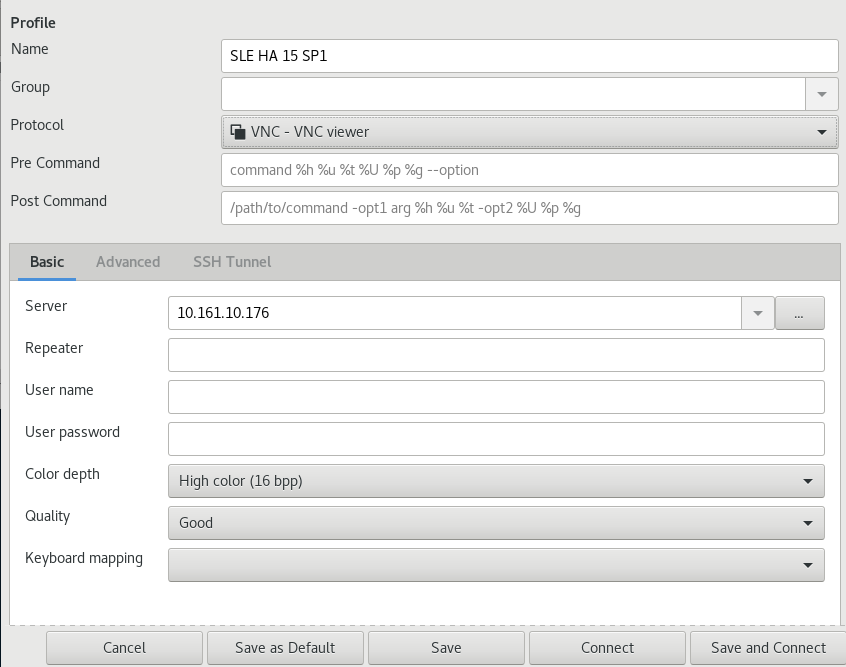

- 4.3 Remote Desktop Preference

- 4.4 Quick-starting

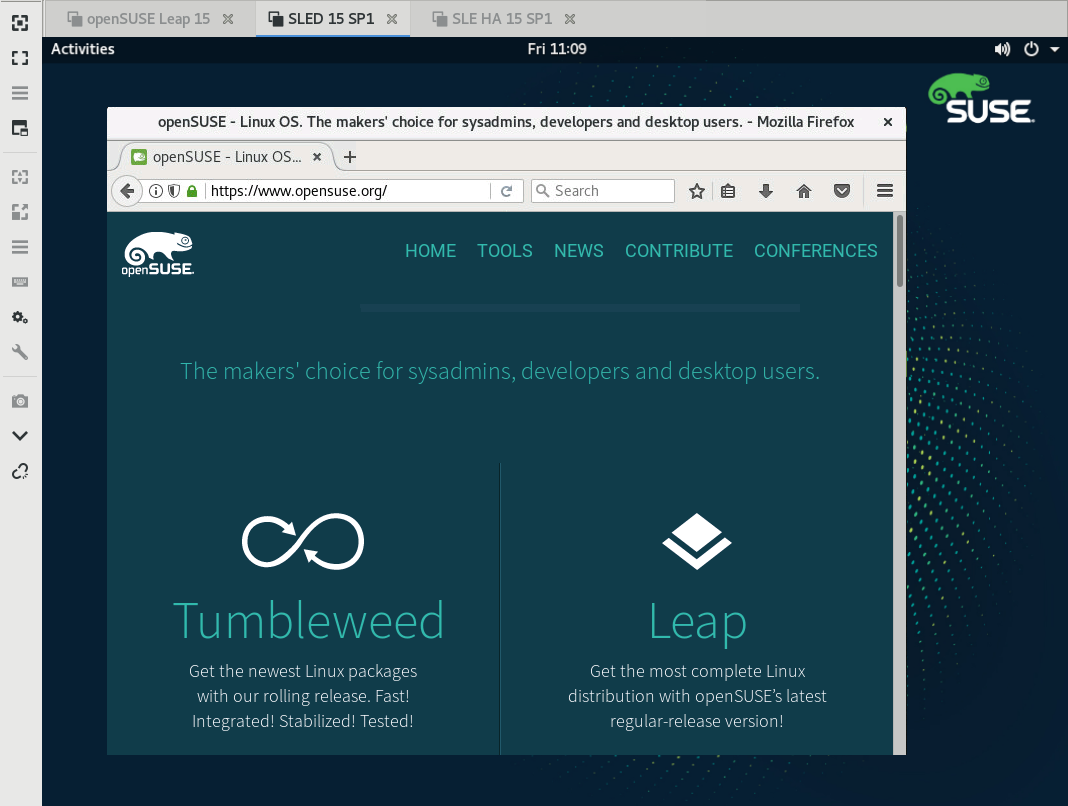

- 4.5 Remmina Viewing Remote Session

- 4.6 Reading Path to the Profile File

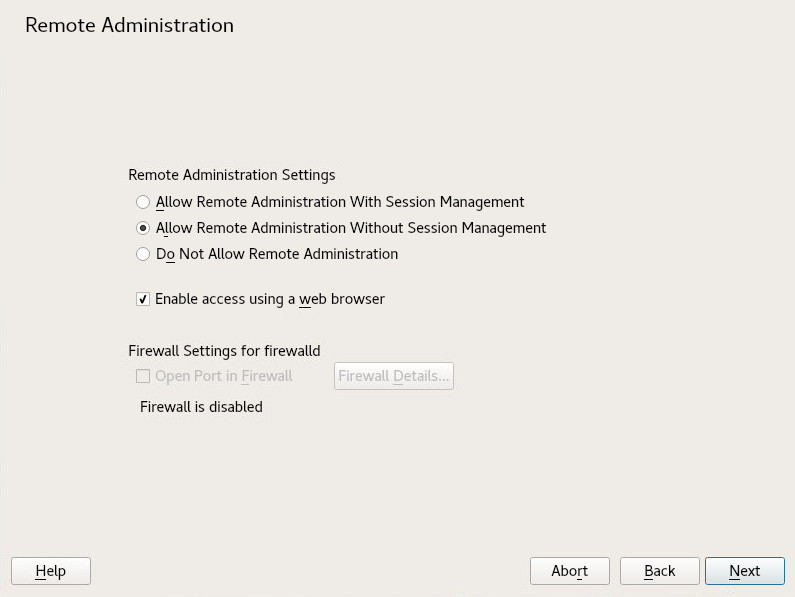

- 4.7 Remote Administration

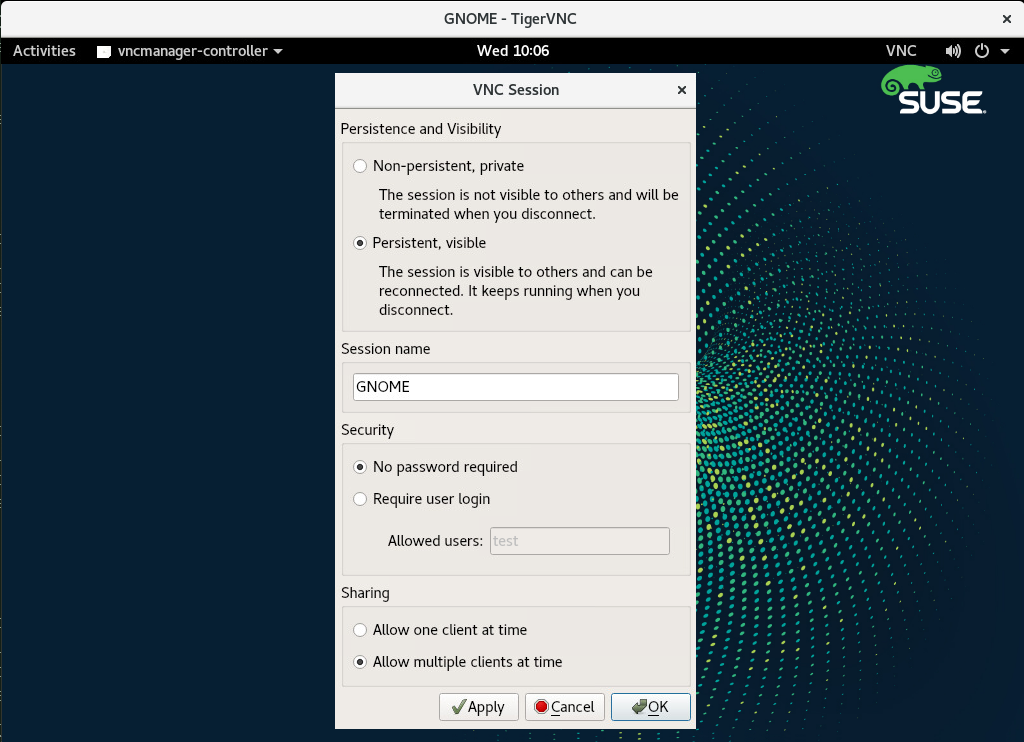

- 4.8 VNC Session Settings

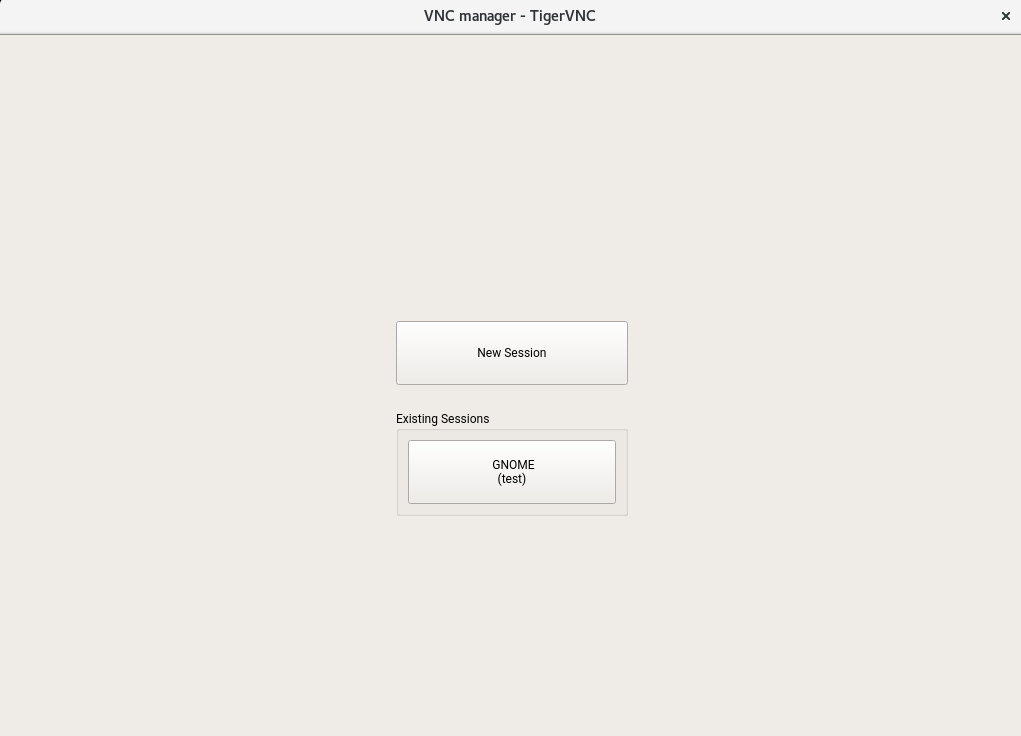

- 4.9 Joining a Persistent VNC Session

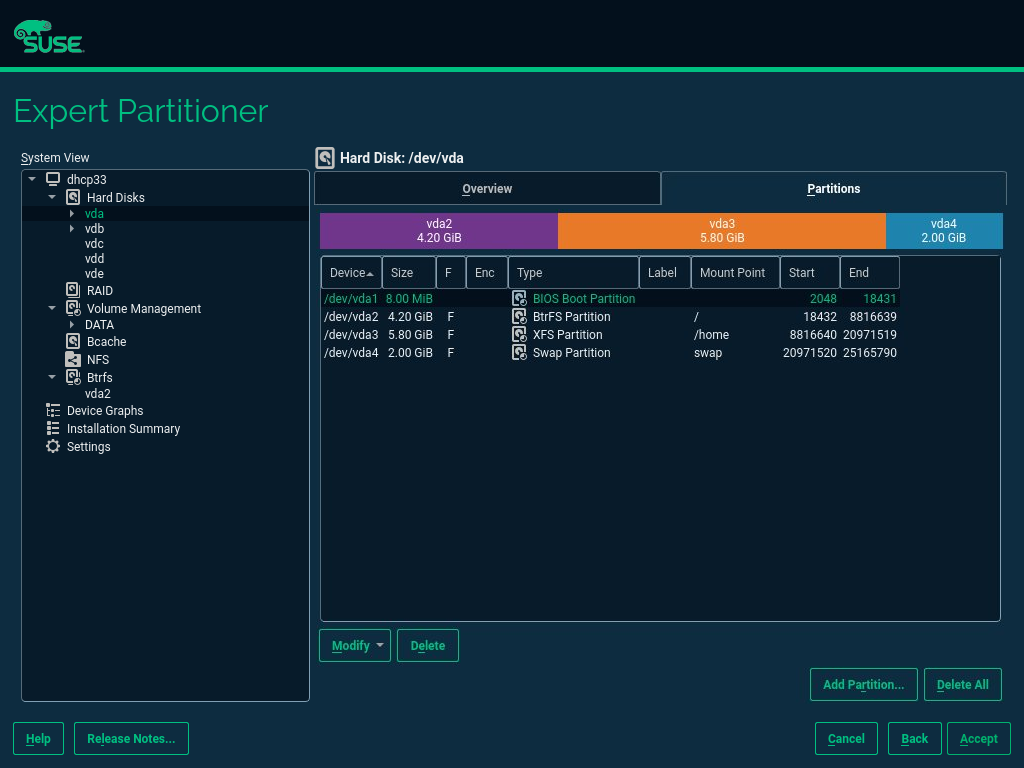

- 5.1 The YaST Partitioner

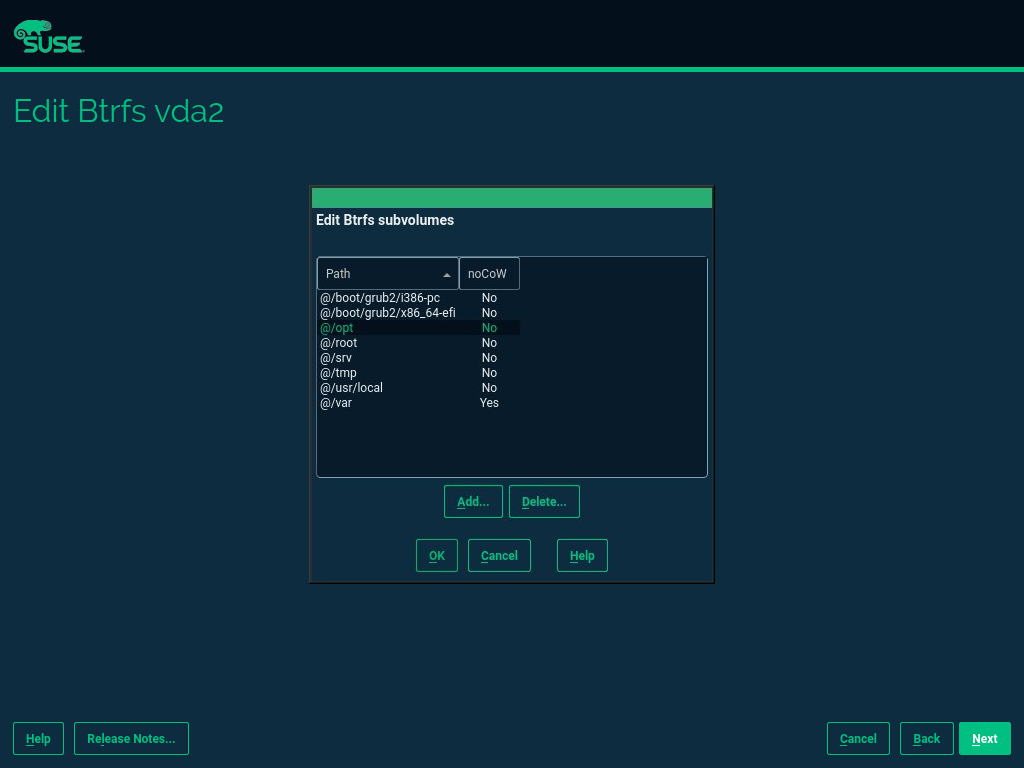

- 5.2 Btrfs Subvolumes in YaST Partitioner

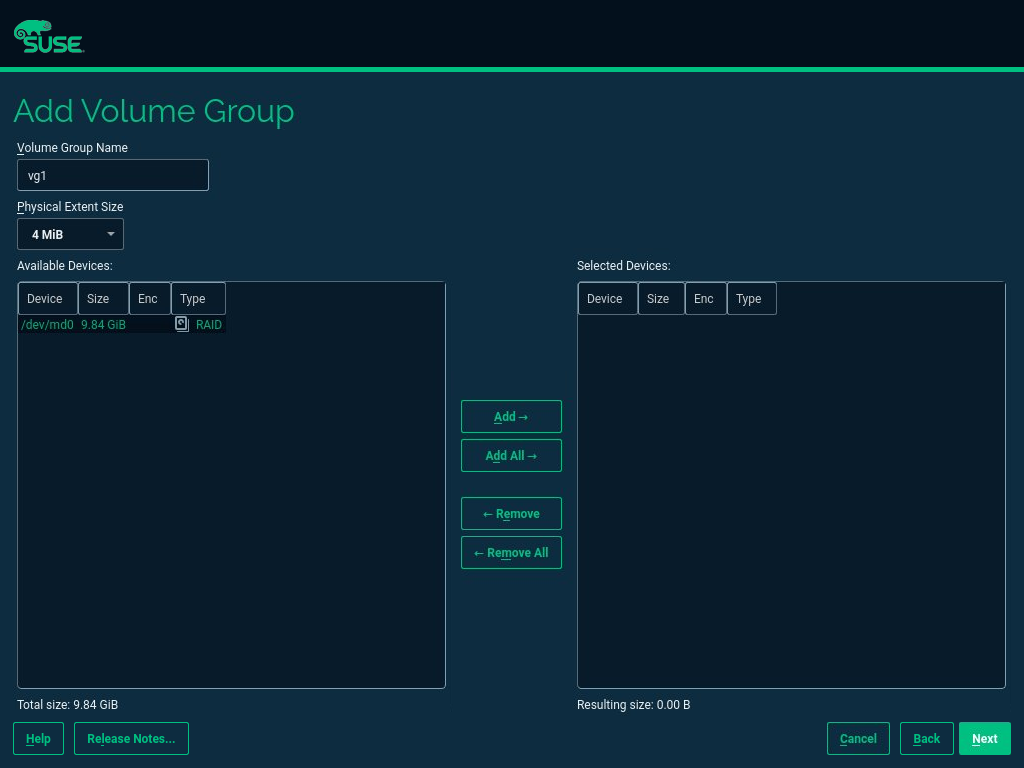

- 5.3 Creating a Volume Group

- 5.4 Logical Volume Management

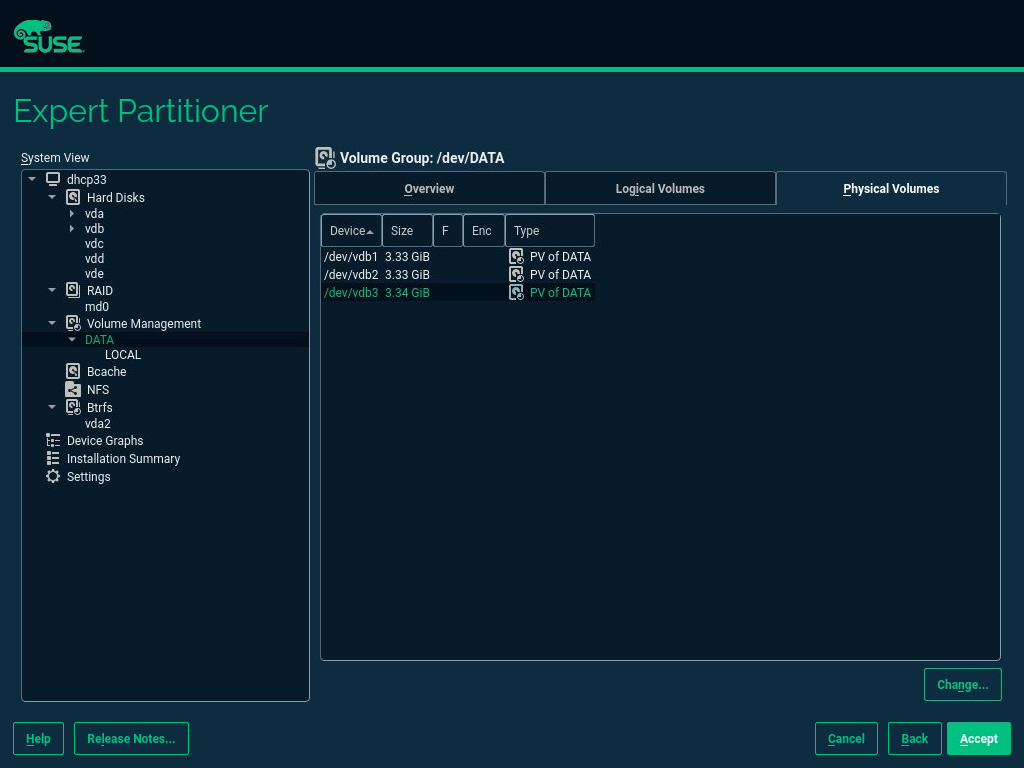

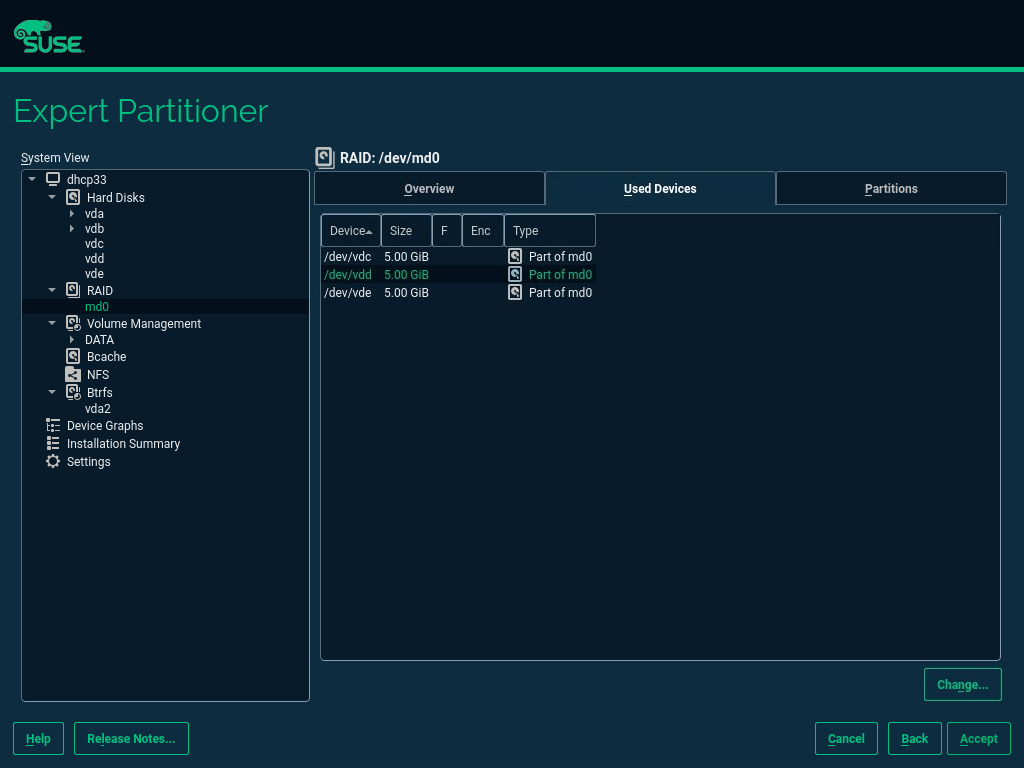

- 5.5 RAID Partitions

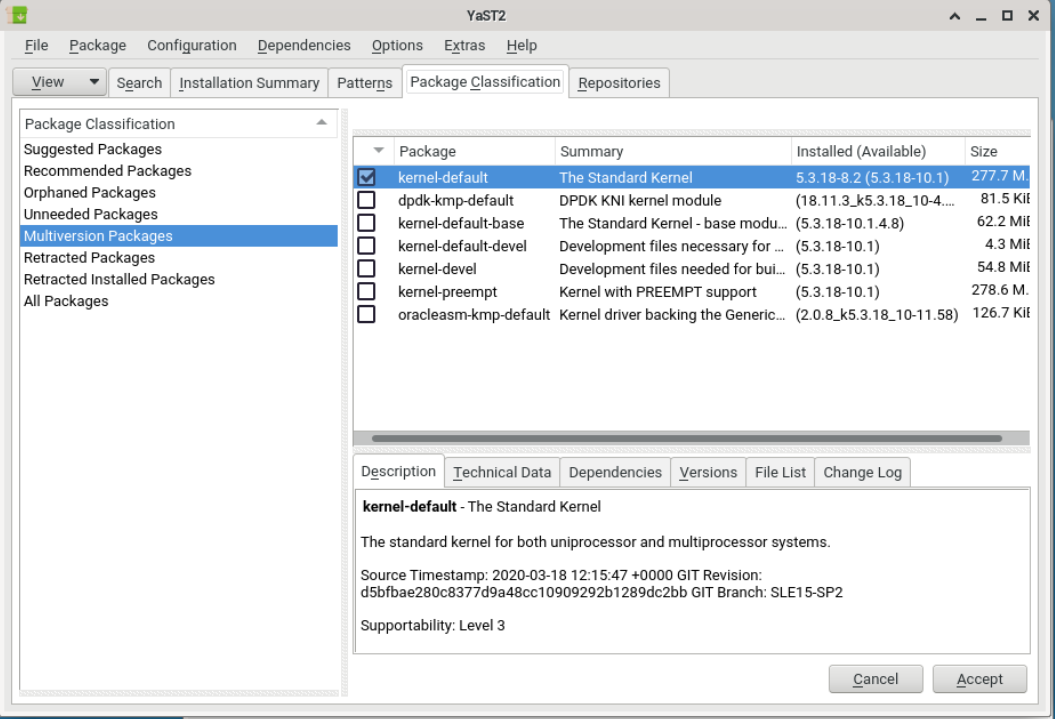

- 6.1 The YaST Software Manager: Multiversion View

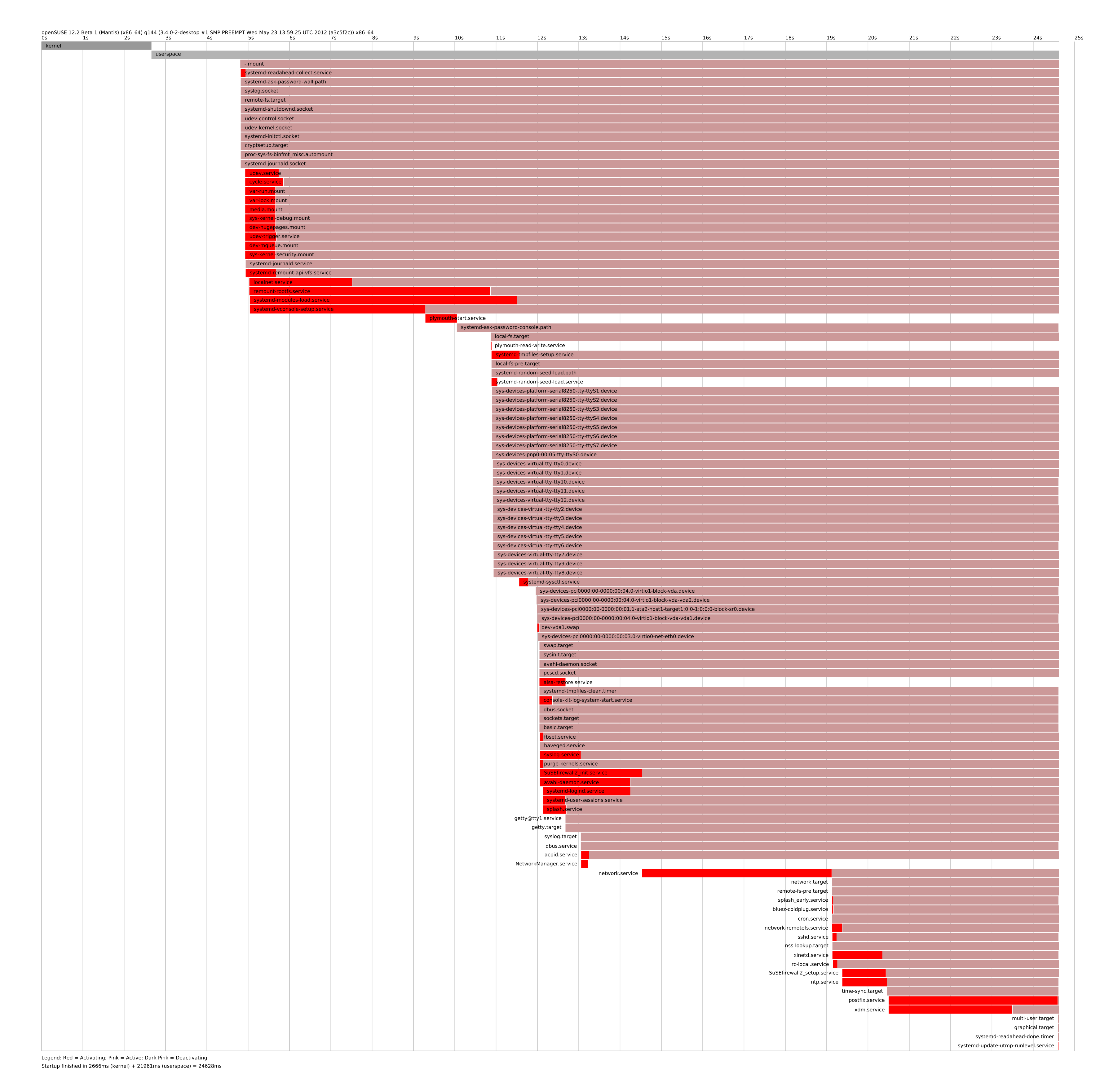

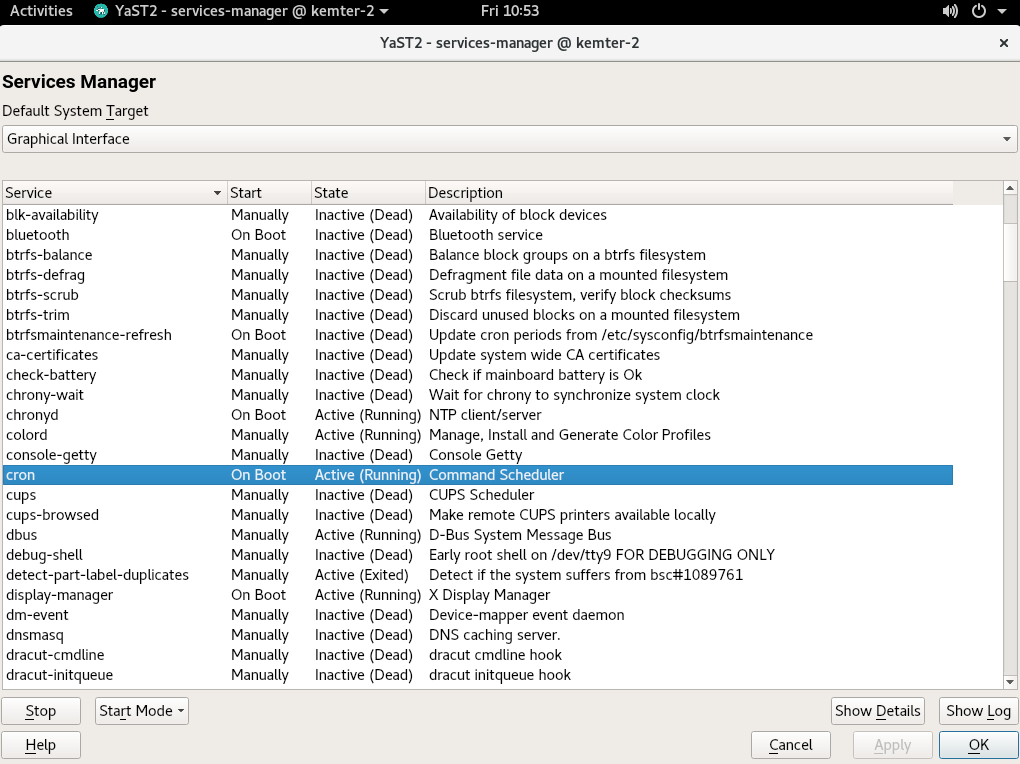

- 10.1 Services Manager

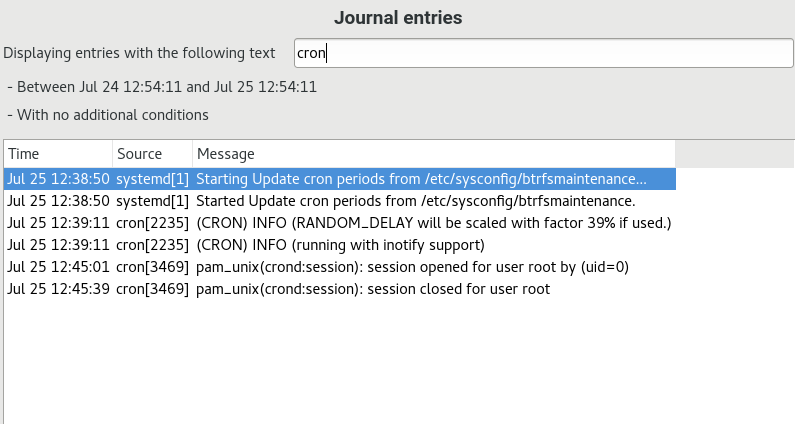

- 11.1 YaST systemd Journal

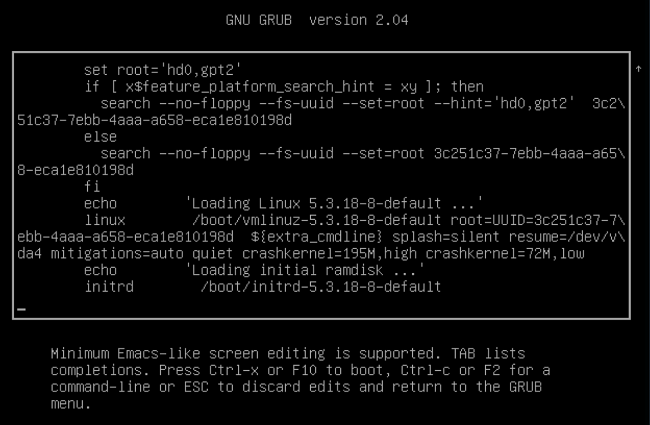

- 12.1 GRUB 2 Boot Editor

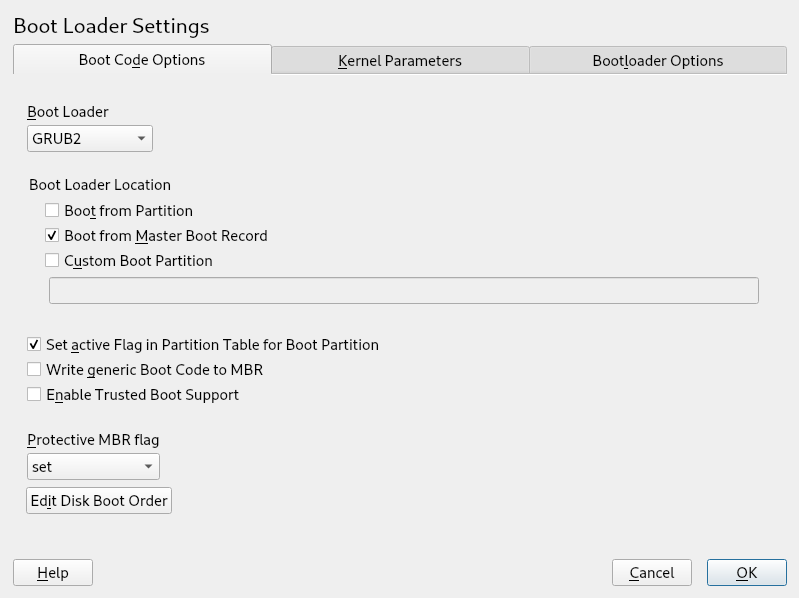

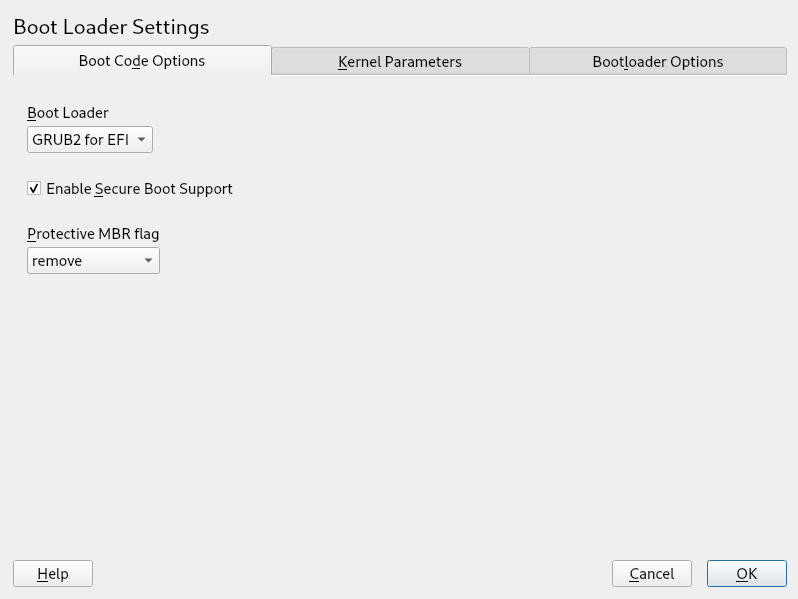

- 12.2 Boot Code Options

- 12.3 Code Options

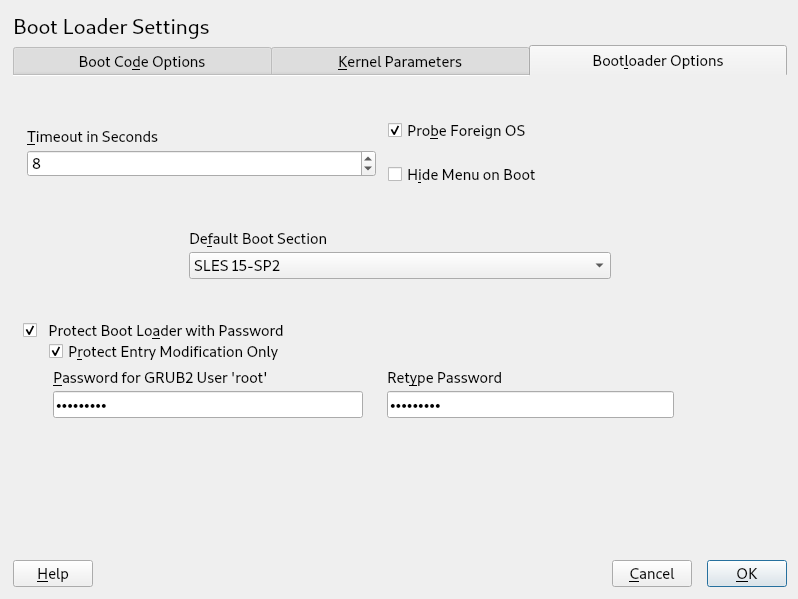

- 12.4 Boot Loader Options

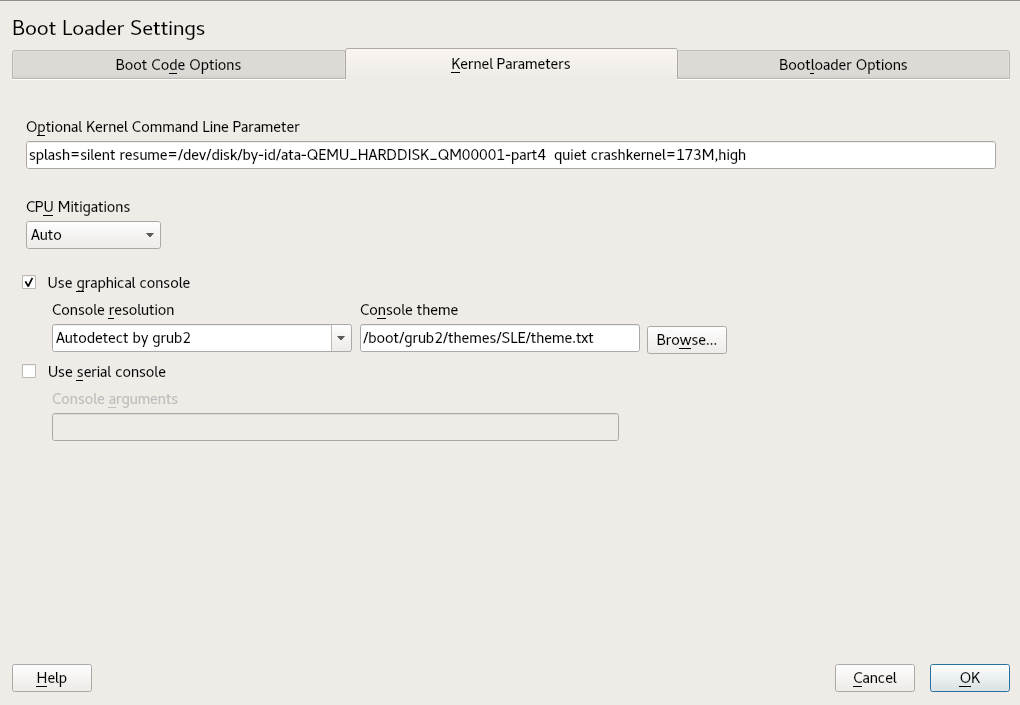

- 12.5 Kernel Parameters

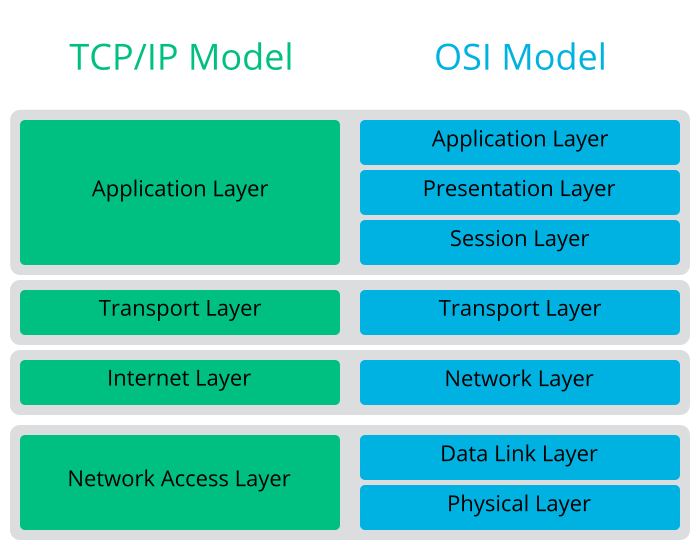

- 13.1 Simplified Layer Model for TCP/IP

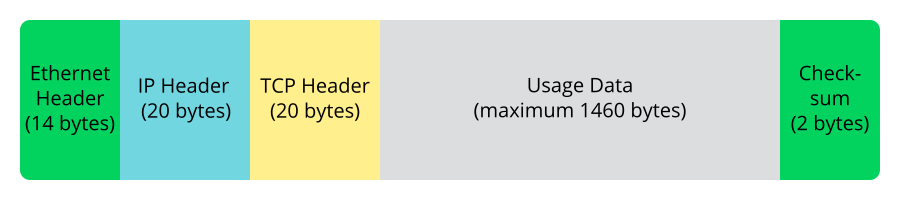

- 13.2 TCP/IP Ethernet Packet

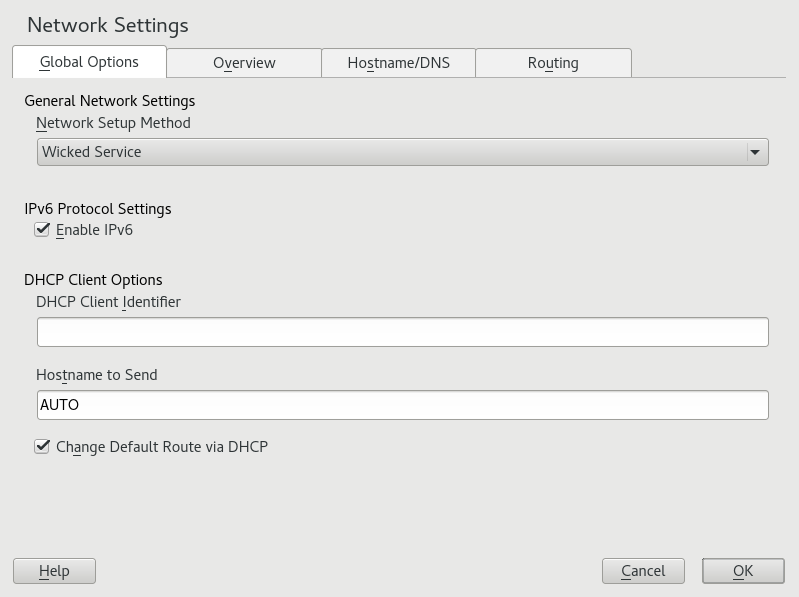

- 13.3 Configuring Network Settings

- 13.4

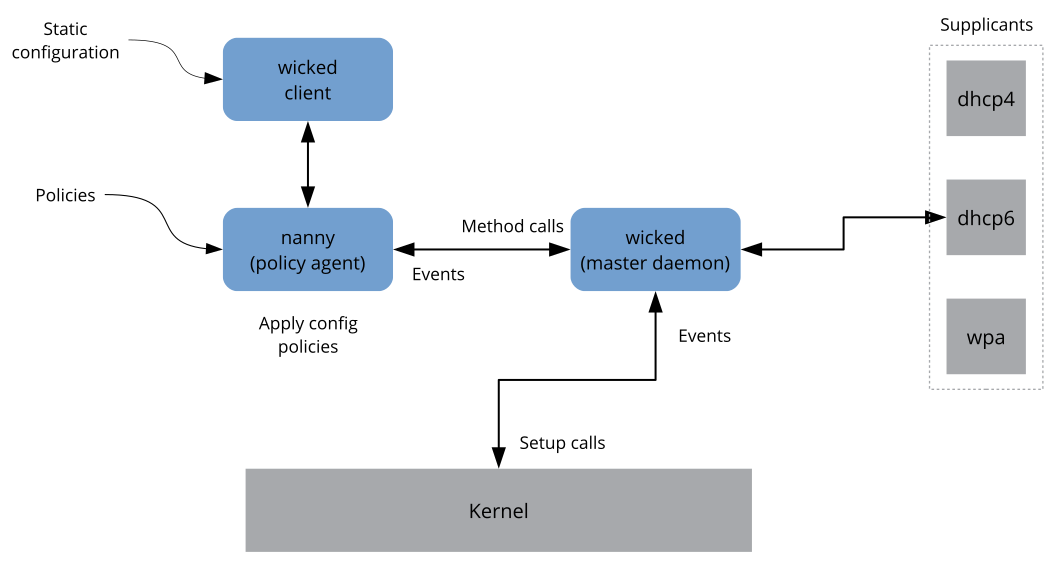

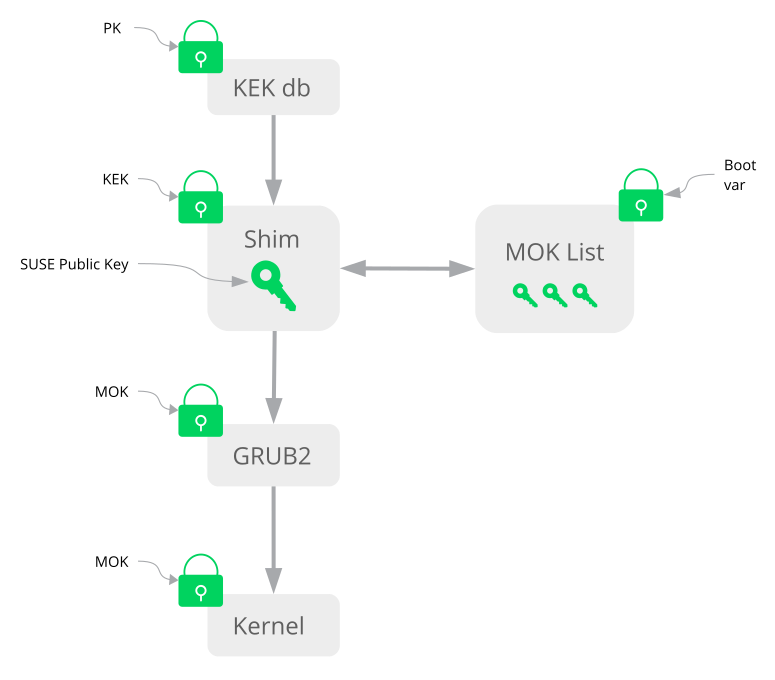

wickedarchitecture - 14.1 Secure Boot Support

- 14.2 UEFI: Secure Boot Process

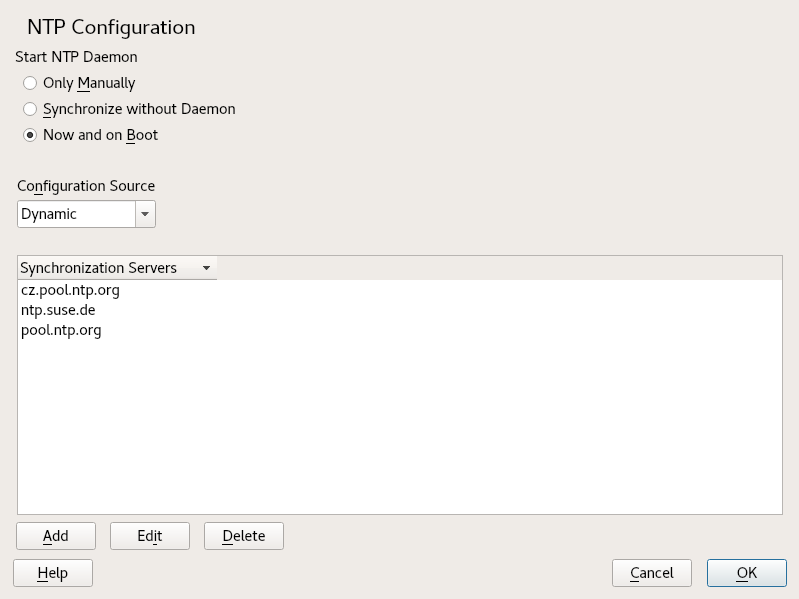

- 18.1 NTP Configuration Window

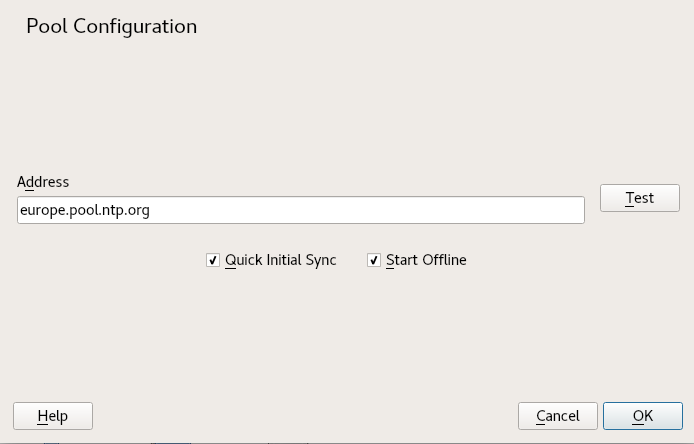

- 18.2 Adding a Time Server

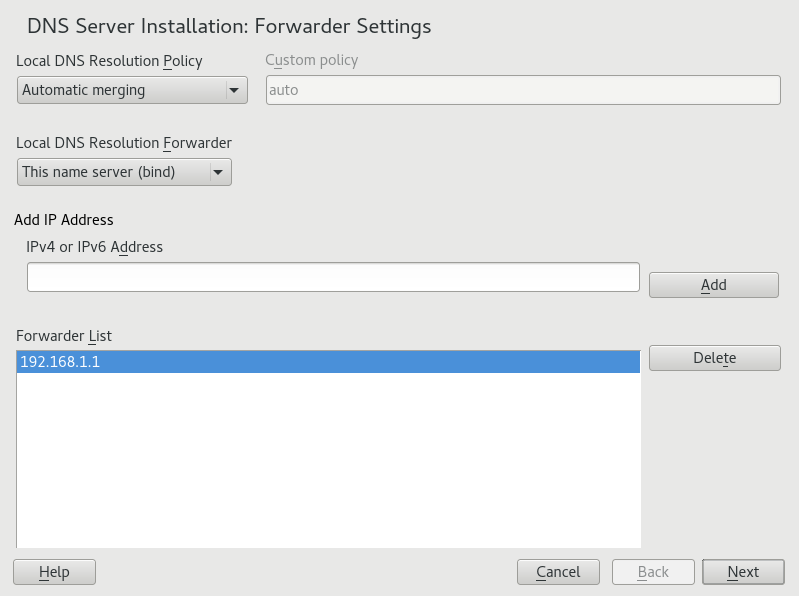

- 19.1 DNS Server Installation: Forwarder Settings

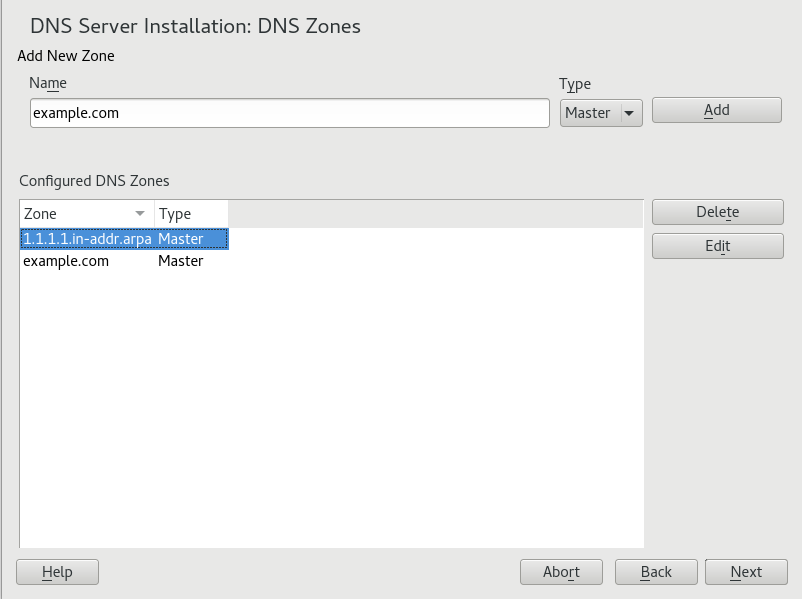

- 19.2 DNS Server Installation: DNS Zones

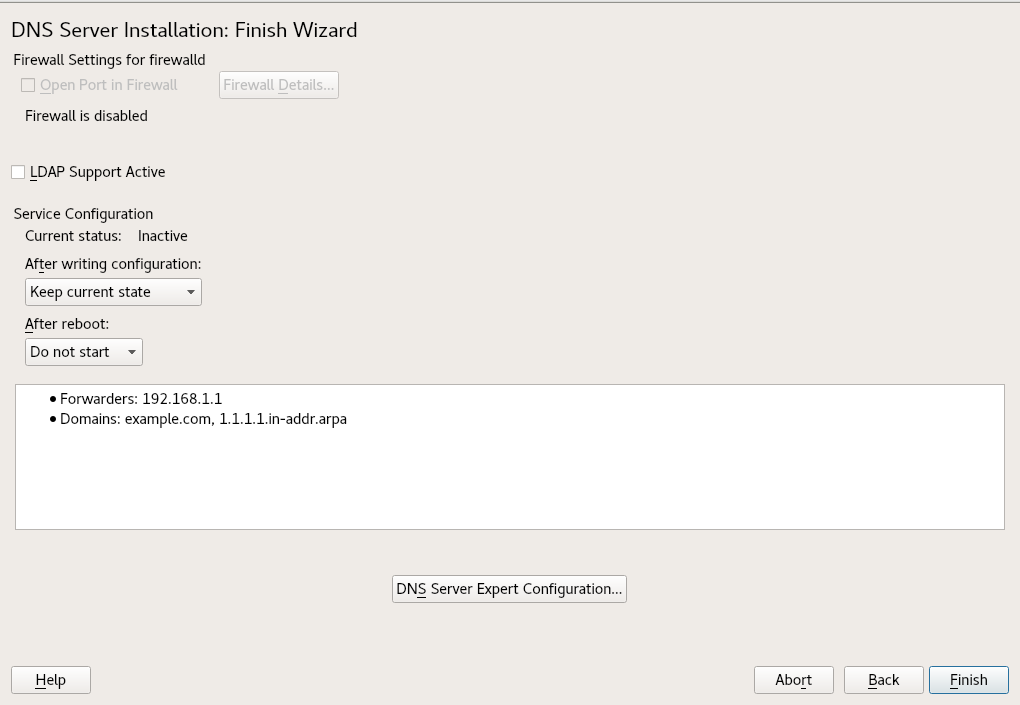

- 19.3 DNS Server Installation: Finish Wizard

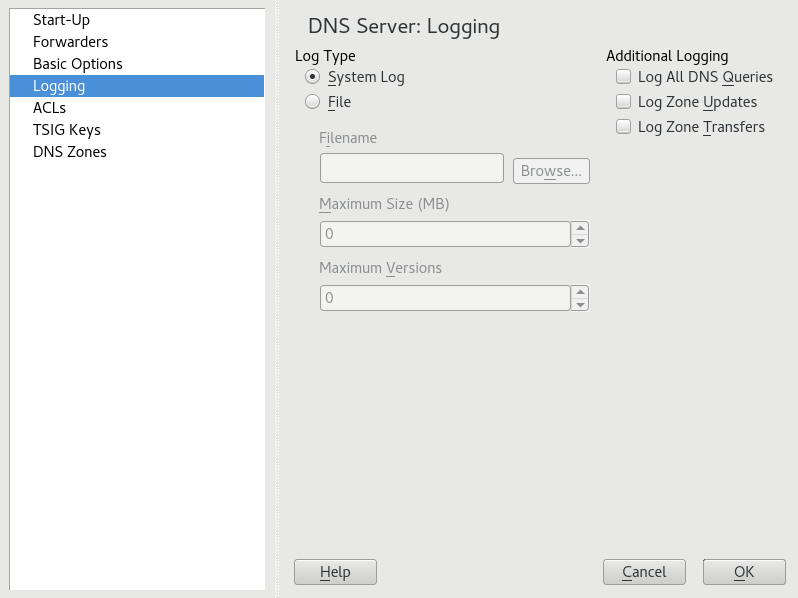

- 19.4 DNS Server: Logging

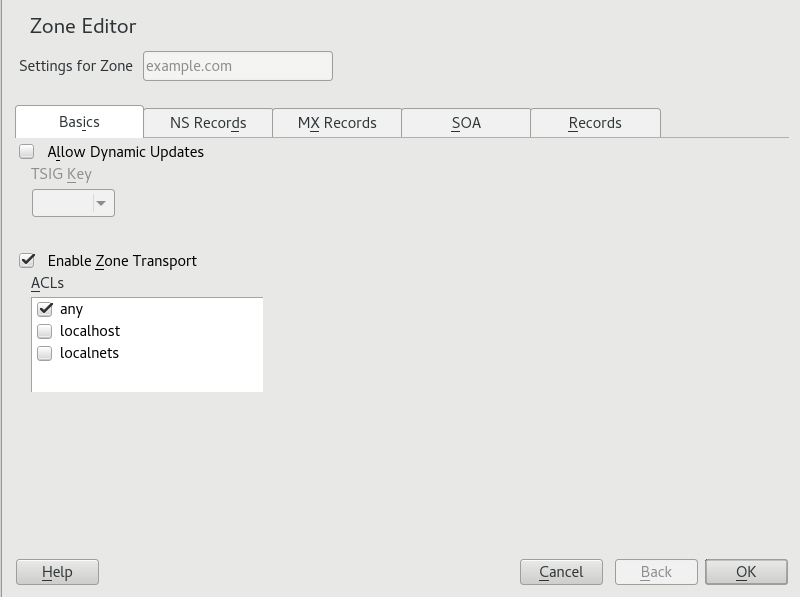

- 19.5 DNS Server: Zone Editor (Basics)

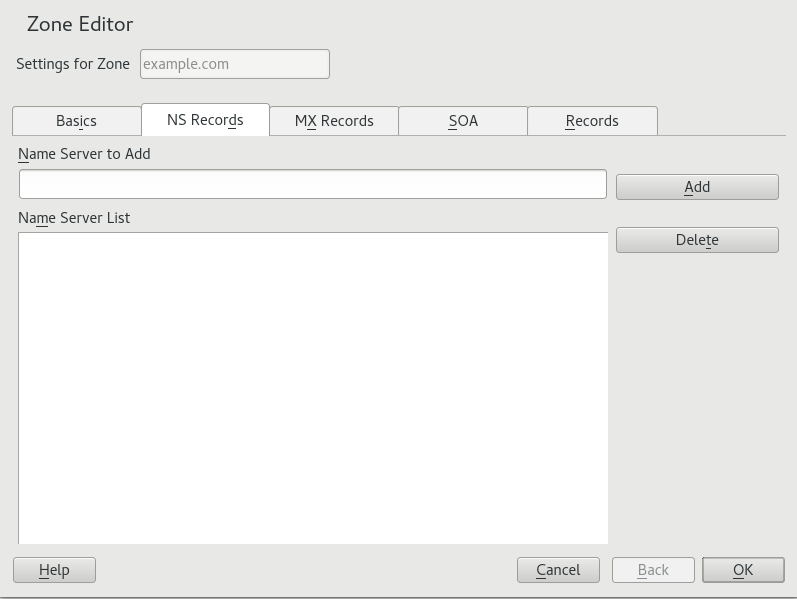

- 19.6 DNS Server: Zone Editor (NS Records)

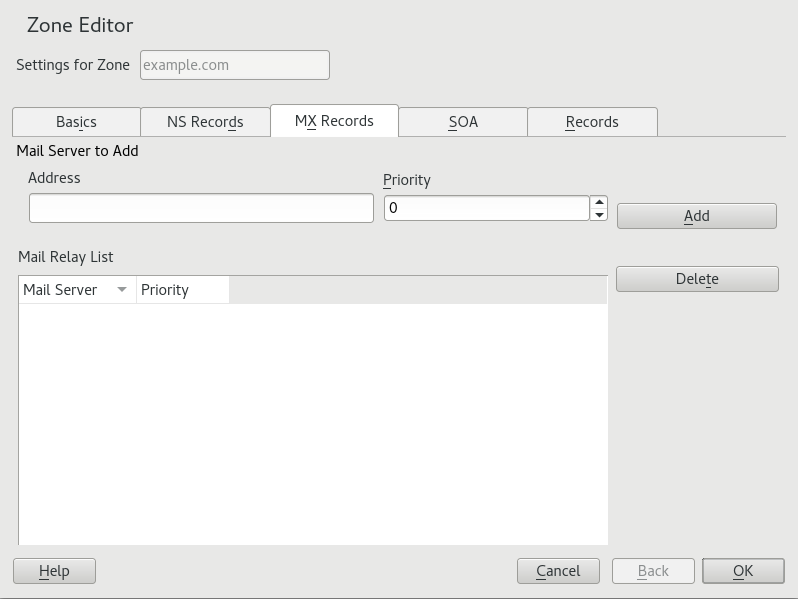

- 19.7 DNS Server: Zone Editor (MX Records)

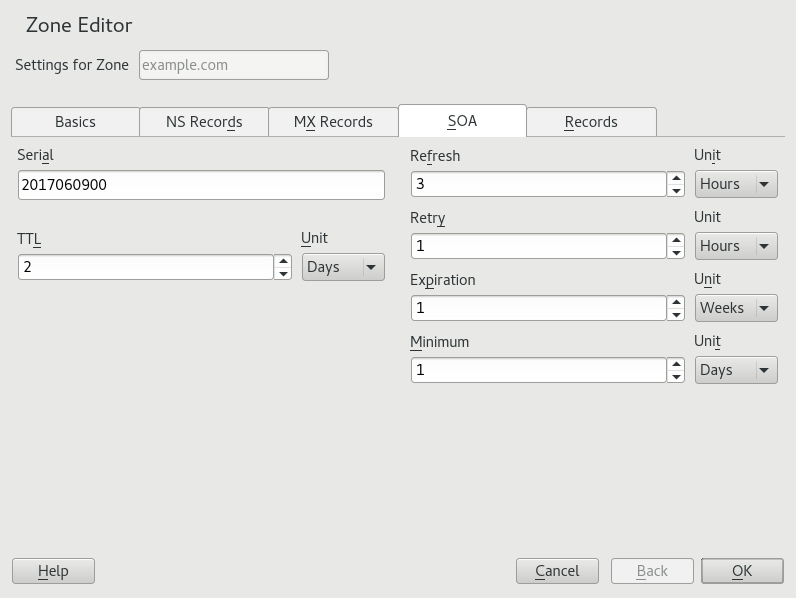

- 19.8 DNS Server: Zone Editor (SOA)

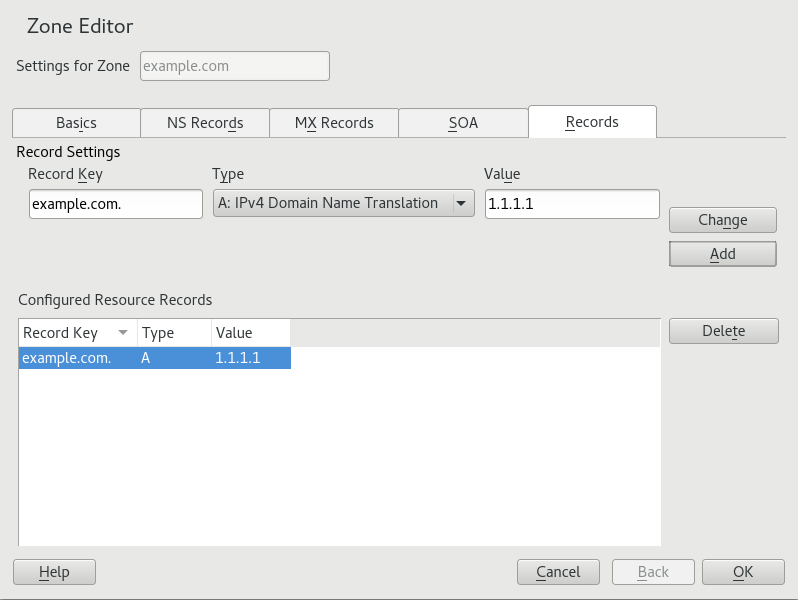

- 19.9 Adding a Record for a Master Zone

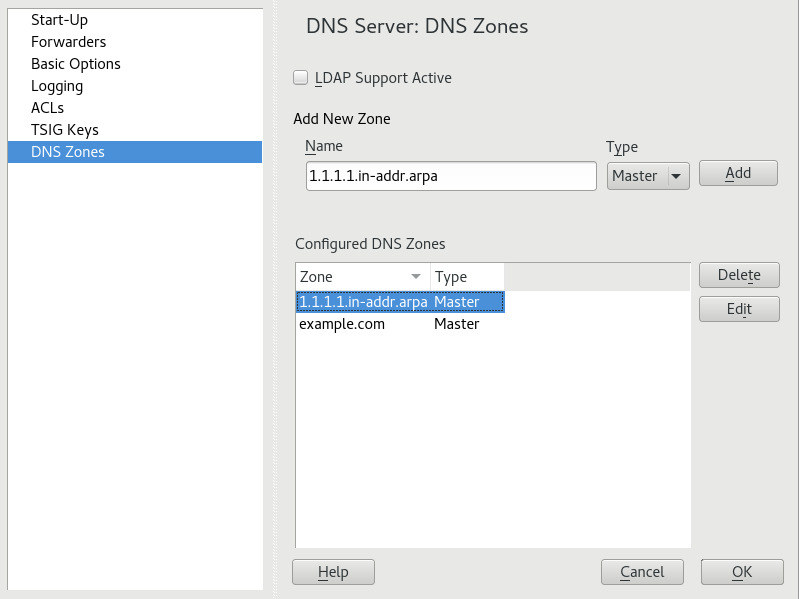

- 19.10 Adding a Reverse Zone

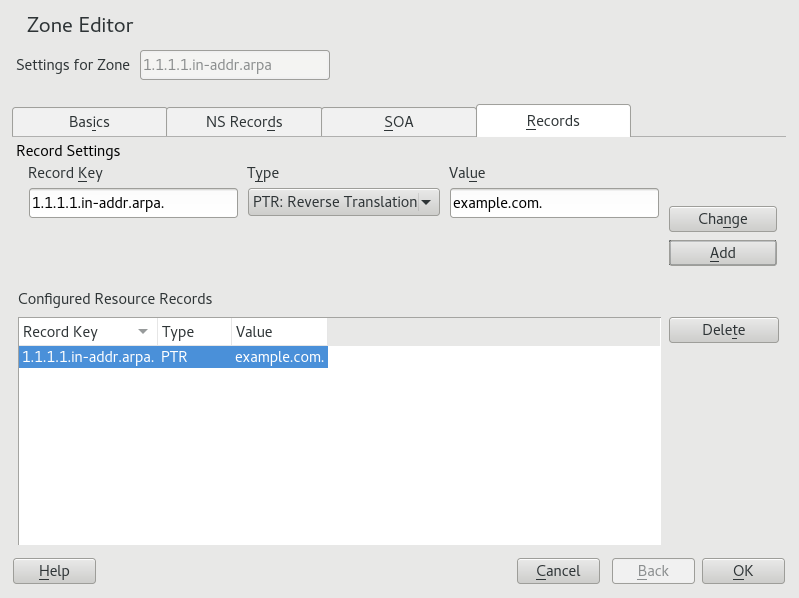

- 19.11 Adding a Reverse Record

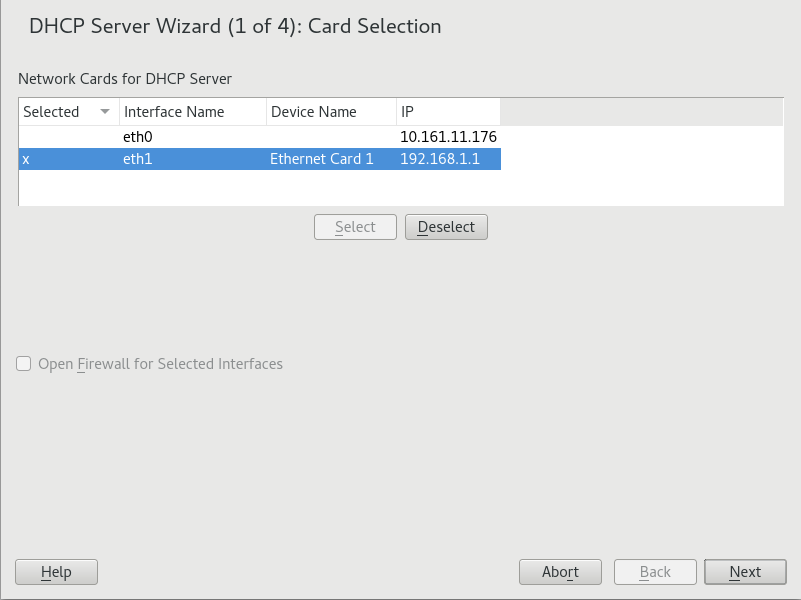

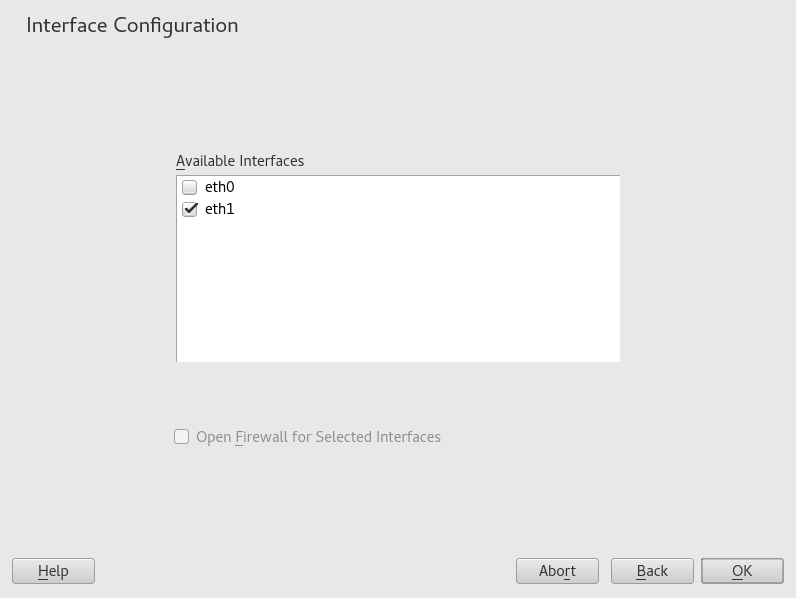

- 20.1 DHCP Server: Card Selection

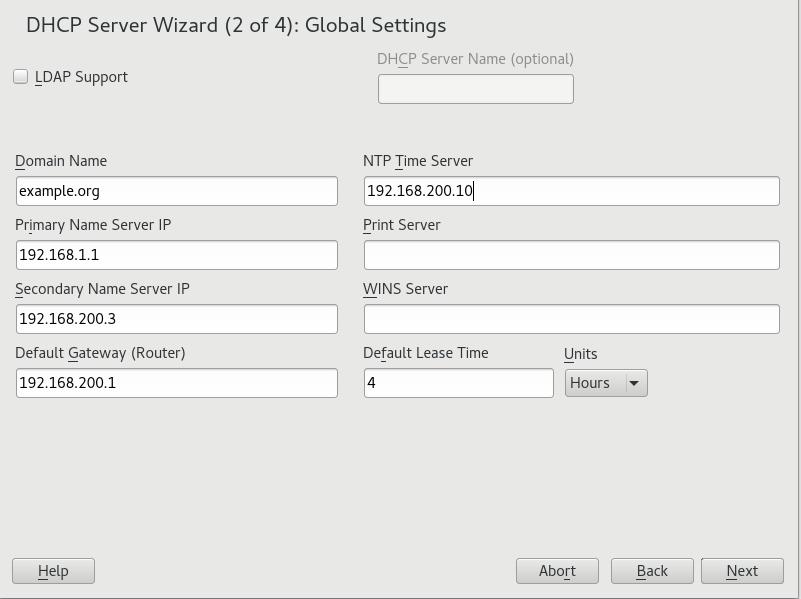

- 20.2 DHCP Server: Global Settings

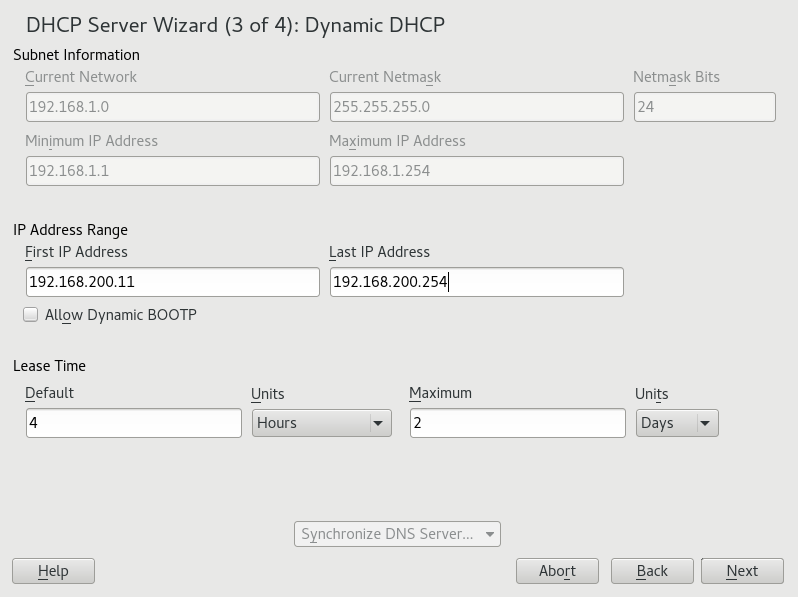

- 20.3 DHCP Server: Dynamic DHCP

- 20.4 DHCP Server: Start-Up

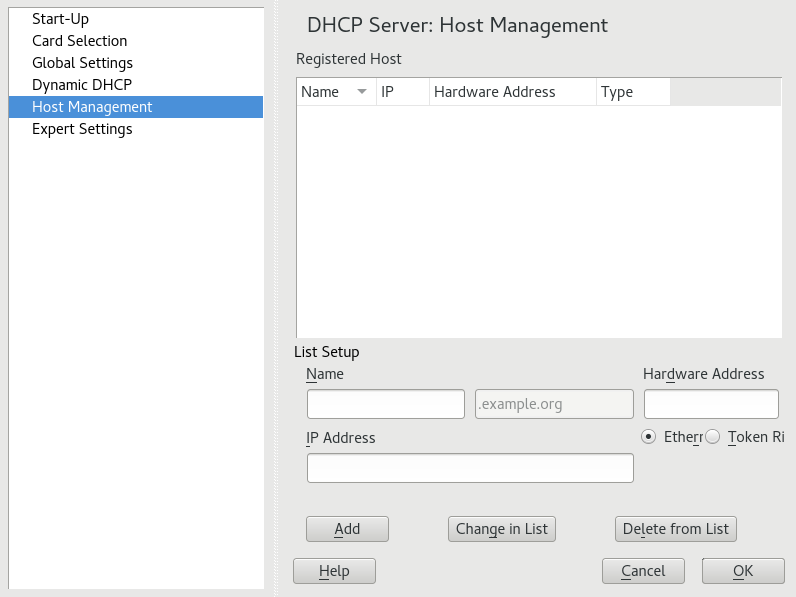

- 20.5 DHCP Server: Host Management

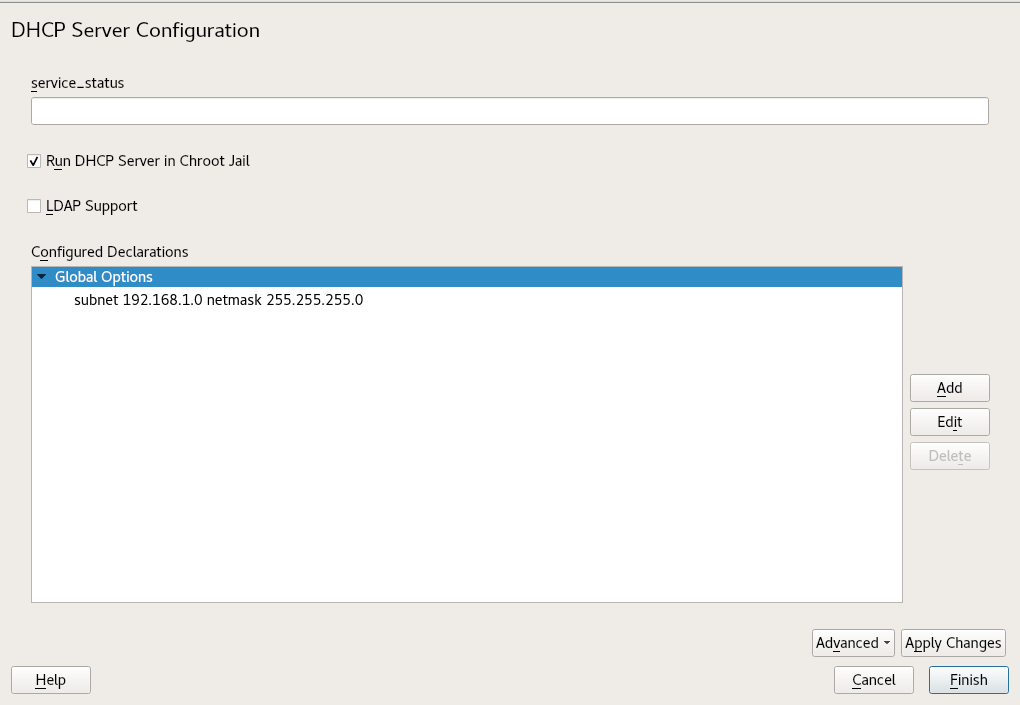

- 20.6 DHCP Server: Chroot Jail and Declarations

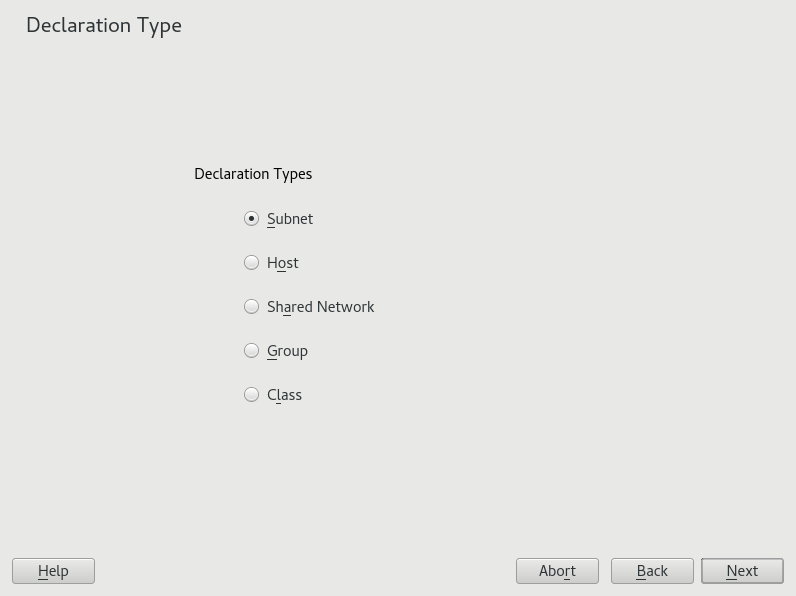

- 20.7 DHCP Server: Selecting a Declaration Type

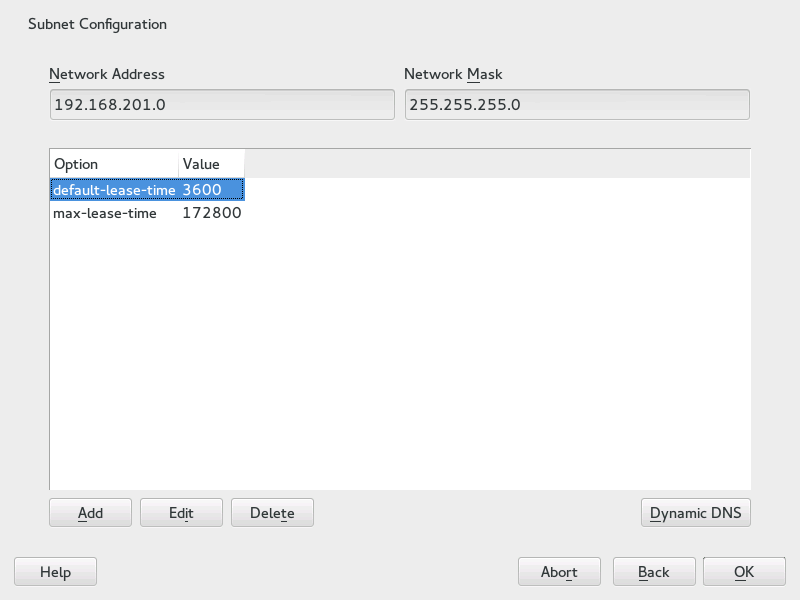

- 20.8 DHCP Server: Configuring Subnets

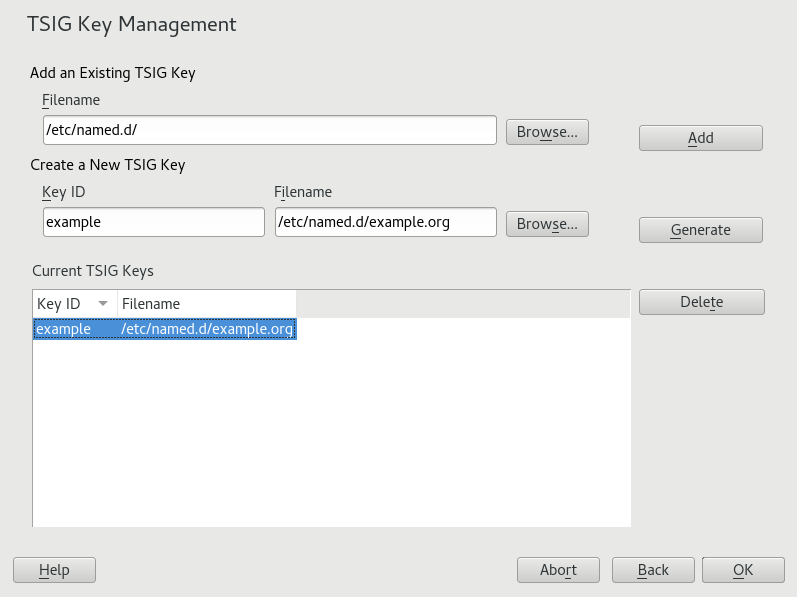

- 20.9 DHCP Server: TSIG Configuration

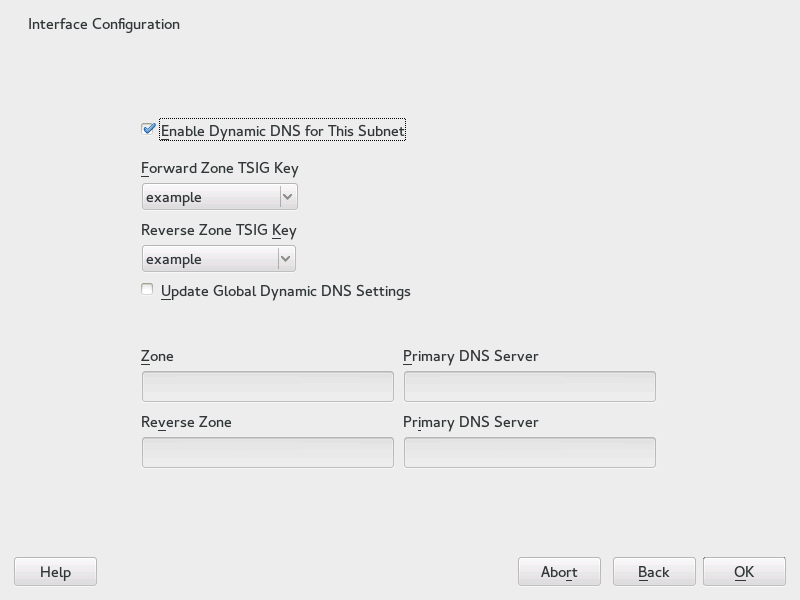

- 20.10 DHCP Server: Interface Configuration for Dynamic DNS

- 20.11 DHCP Server: Network Interface and Firewall

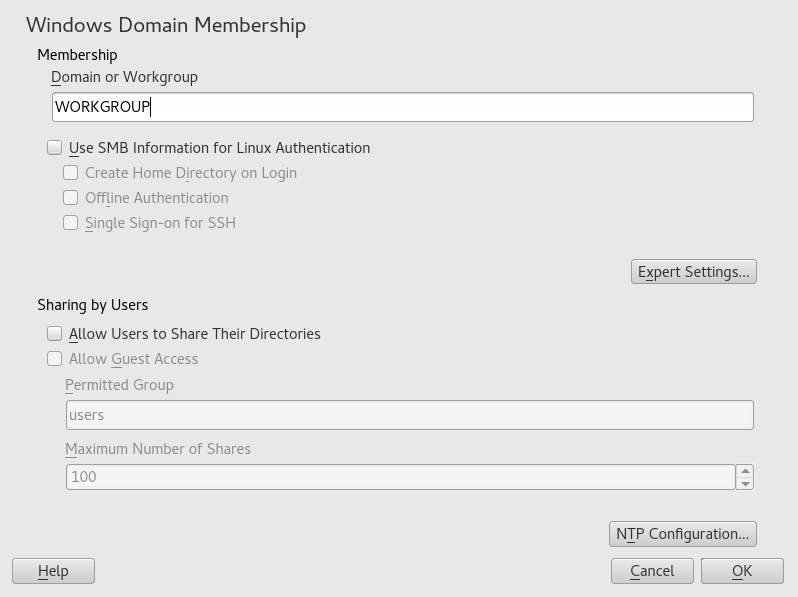

- 21.1 Determining Windows Domain Membership

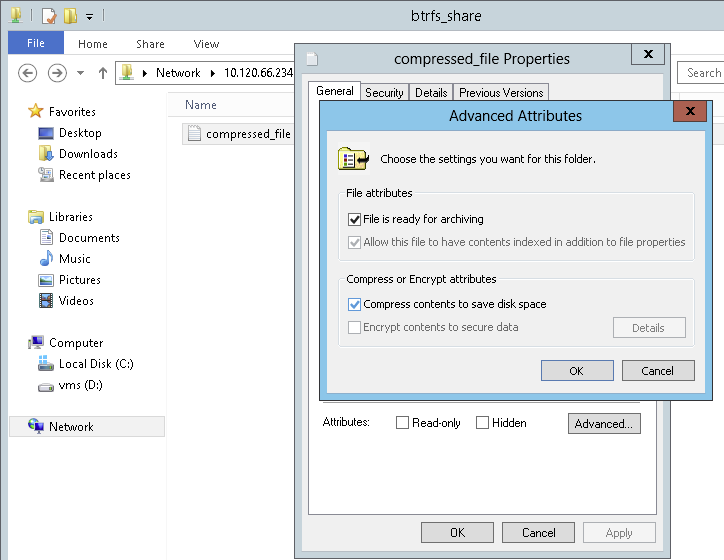

- 21.2 Windows Explorer Dialog

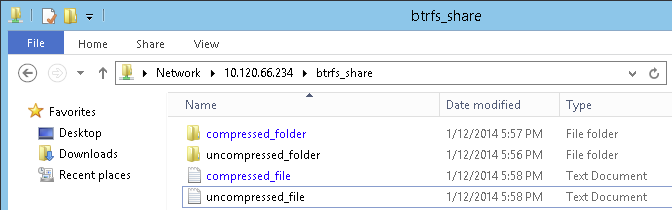

- 21.3 Windows Explorer Directory Listing with Compressed Files

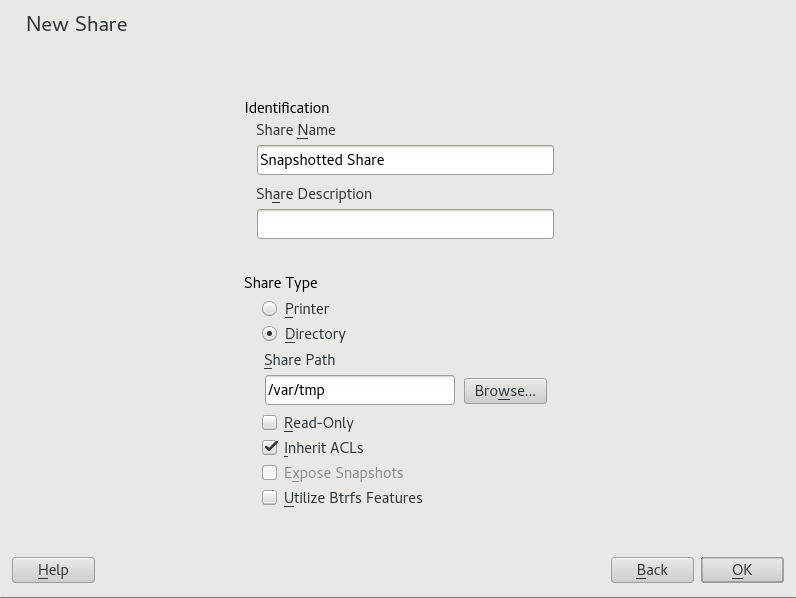

- 21.4 Adding a New Samba Share with Snapshotting Enabled

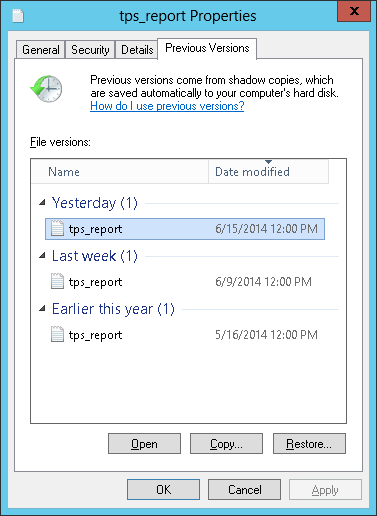

- 21.5 The tab in Windows Explorer

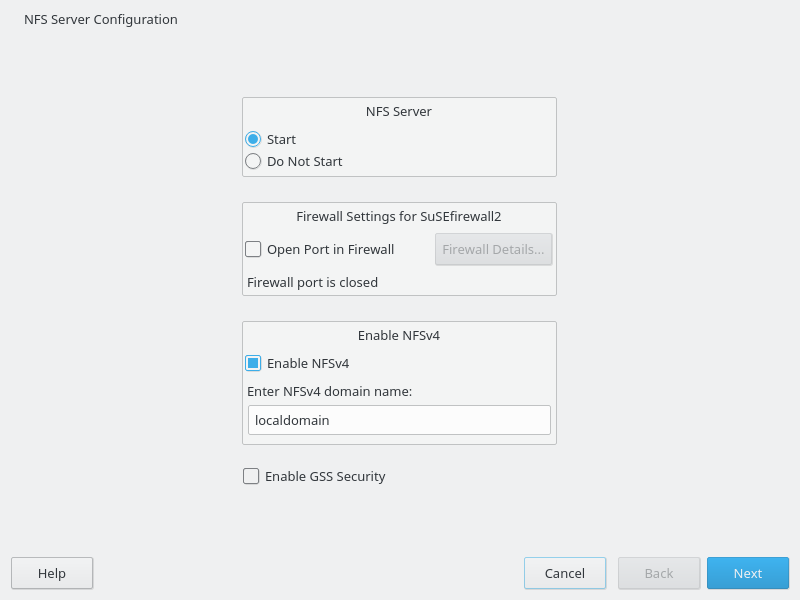

- 22.1 NFS Server Configuration Tool

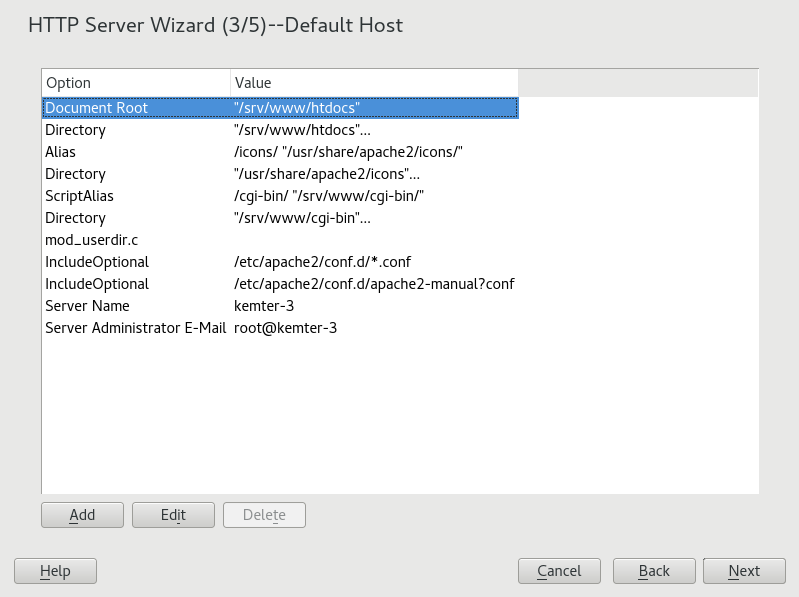

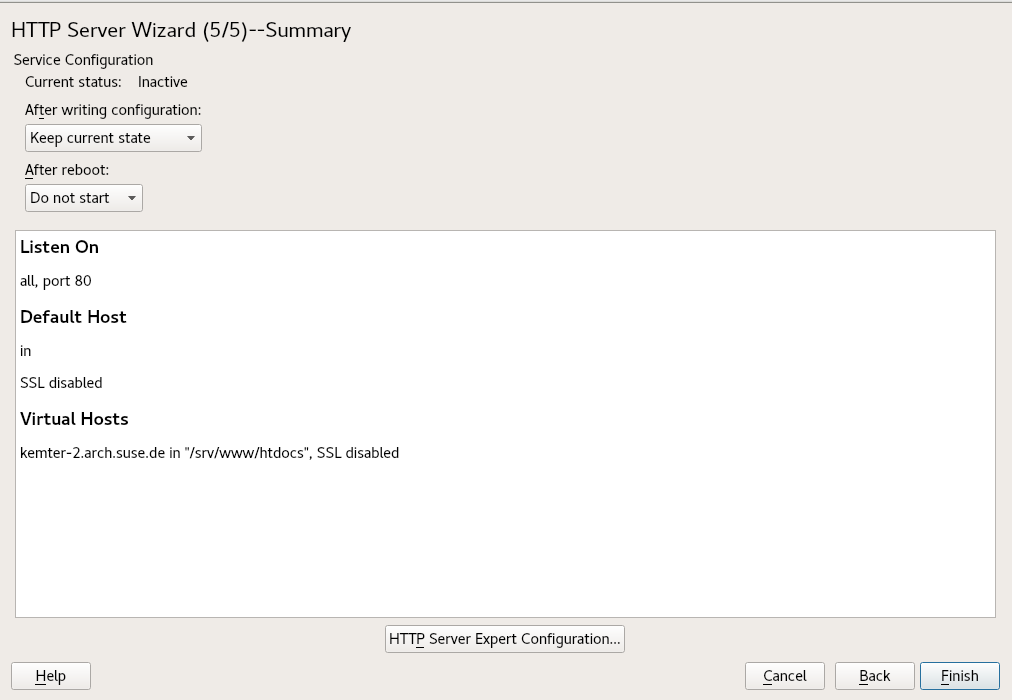

- 24.1 HTTP Server Wizard: Default Host

- 24.2 HTTP Server Wizard: Summary

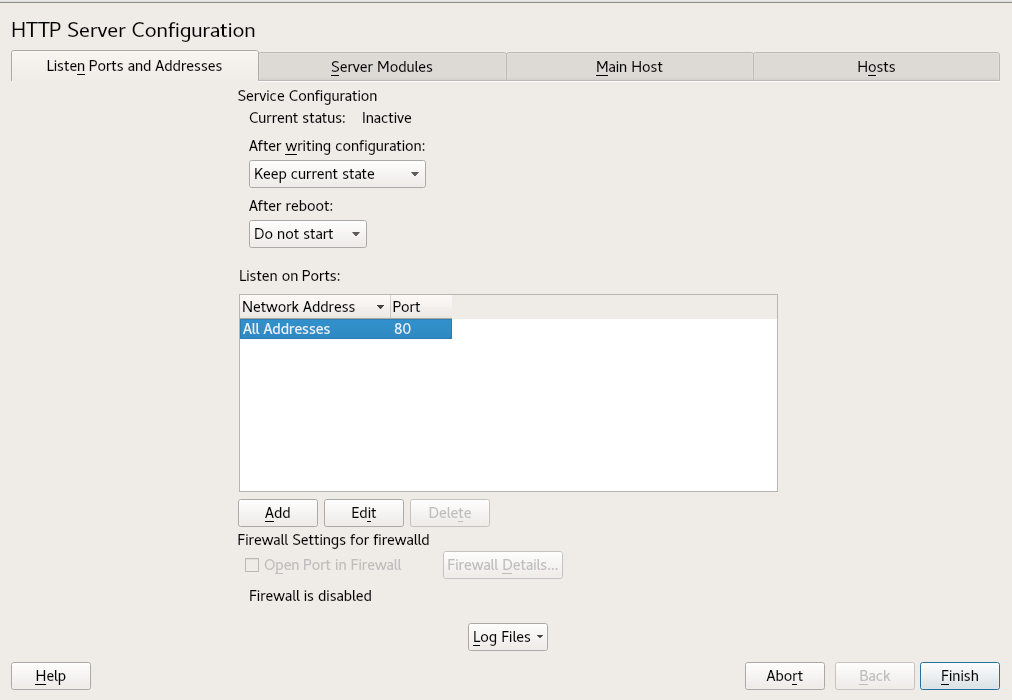

- 24.3 HTTP Server Configuration: Listen Ports and Addresses

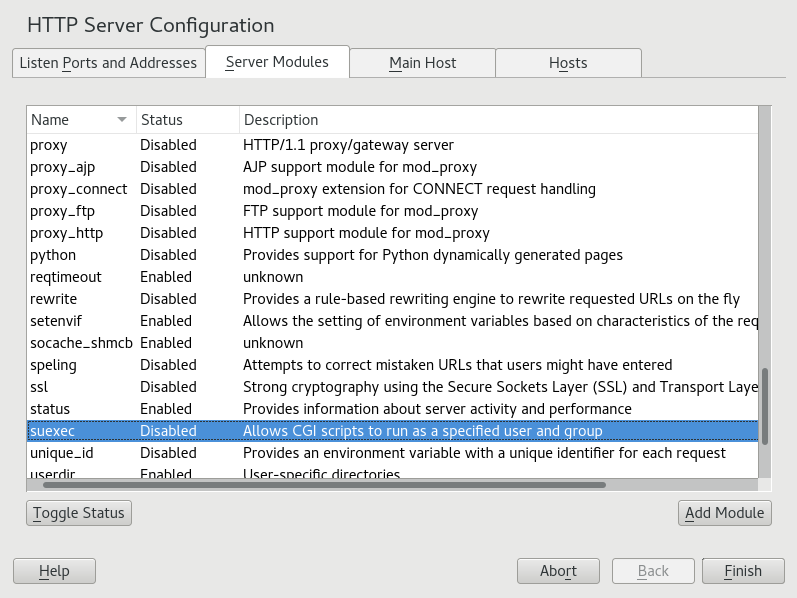

- 24.4 HTTP Server Configuration: Server Modules

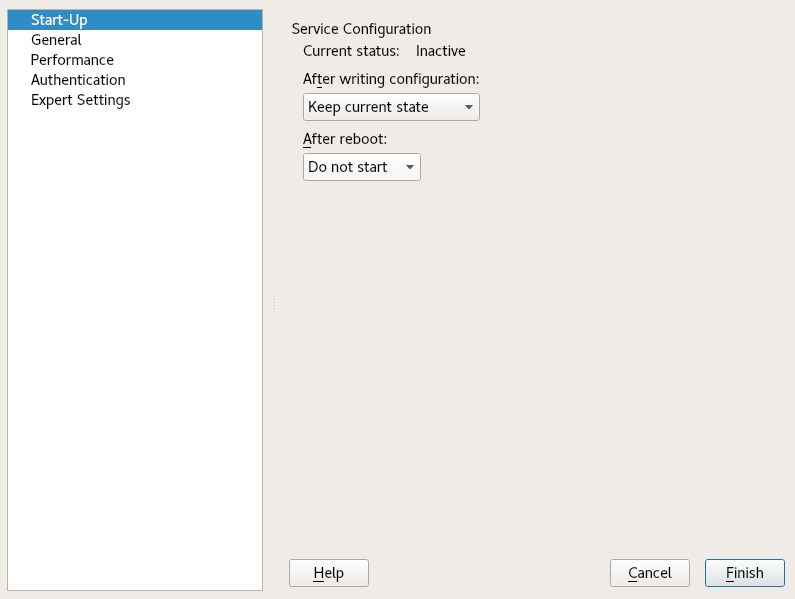

- 25.1 FTP Server Configuration — Start-Up

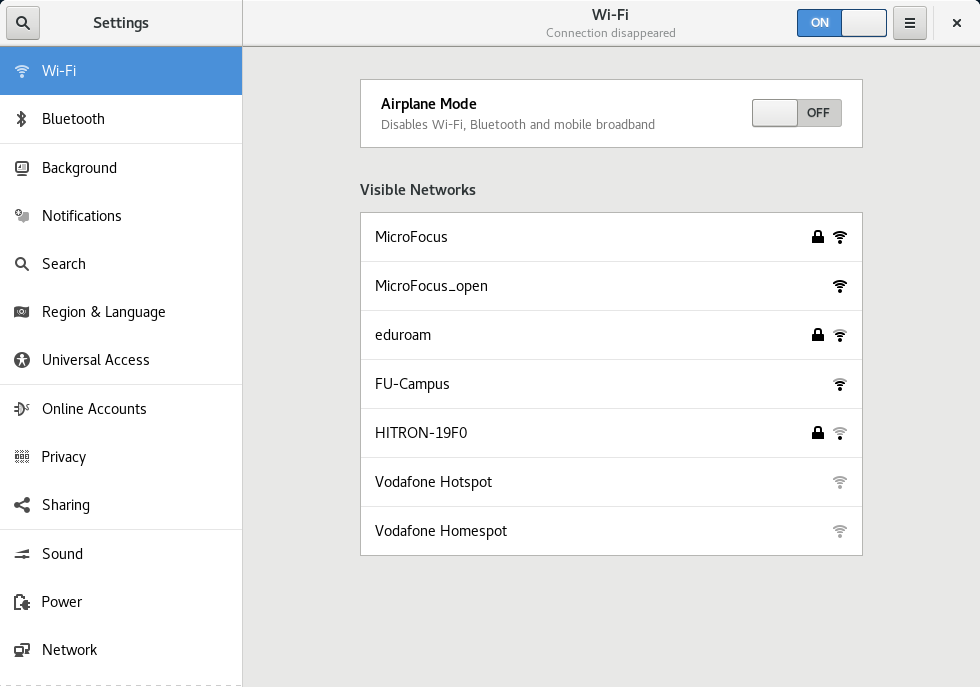

- 27.1 Integrating a Mobile Computer in an Existing Environment

- 28.1 GNOME Network Connections Dialog

- 2.1 The Most Important RPM Query Options

- 2.2 RPM Verify Options

- 7.1 Generating PFL from Fontconfig rules

- 7.2 Results from Generating PFL from Fontconfig Rules with Changed Order

- 7.3 Results from Generating PFL from Fontconfig Rules

- 10.1 Service Management Commands

- 10.2 Commands for Enabling and Disabling Services

- 10.3 System V Runlevels and

systemdTarget Units - 13.1 Private IP Address Domains

- 13.2 Parameters for /etc/host.conf

- 13.3 Databases Available via /etc/nsswitch.conf

- 13.4 Configuration Options for NSS “Databases”

- 13.5 Feature Comparison between Bonding and Team

- 15.1

ulimit: Setting Resources for the User - 27.1 Use Cases for NetworkManager

- 27.2 Overview of Various Wi-Fi Standards

- 2.1 Zypper—List of Known Repositories

- 2.2

rpm -q -i wget - 2.3 Script to Search for Packages

- 3.1 Example timeline configuration

- 7.1 Specifying Rendering Algorithms

- 7.2 Aliases and Family Name Substitutions

- 7.3 Aliases and Family Name Substitutions

- 7.4 Aliases and Family Names Substitutions

- 10.1 List Active Services

- 10.2 List Failed Services

- 10.3 List all Processes Belonging to a Service

- 12.1 Usage of grub2-mkconfig

- 12.2 Usage of grub2-mkrescue

- 12.3 Usage of grub2-script-check

- 12.4 Usage of grub2-once

- 13.1 Writing IP Addresses

- 13.2 Linking IP Addresses to the Netmask

- 13.3 Sample IPv6 Address

- 13.4 IPv6 Address Specifying the Prefix Length

- 13.5 Common Network Interfaces and Some Static Routes

- 13.6

/var/run/netconfig/resolv.conf - 13.7

/etc/hosts - 13.8

/etc/networks - 13.9

/etc/host.conf - 13.10

/etc/nsswitch.conf - 13.11 Output of the Command ping

- 13.12 Configuration for Load Balancing with Network Teaming

- 13.13 Configuration for DHCP Network Teaming Device

- 15.1 Entry in /etc/crontab

- 15.2 /etc/crontab: Remove Time Stamp Files

- 15.3

ulimit: Settings in~/.bashrc - 16.1 Example

udevRules - 19.1 Forwarding Options in named.conf

- 19.2 A Basic /etc/named.conf

- 19.3 Entry to Disable Logging

- 19.4 Zone Entry for example.com

- 19.5 Zone Entry for example.net

- 19.6 The /var/lib/named/example.com.zone File

- 19.7 Reverse Lookup

- 20.1 The Configuration File /etc/dhcpd.conf

- 20.2 Additions to the Configuration File

- 21.1 A CD-ROM Share

- 21.2 [homes] Share

- 21.3 Global Section in smb.conf

- 21.4 Using

rpcclientto Request a Windows Server 2012 Share Snapshot - 24.1 Basic Examples of Name-Based

VirtualHostEntries - 24.2 Name-Based

VirtualHostDirectives - 24.3 IP-Based

VirtualHostDirectives - 24.4 Basic

VirtualHostConfiguration - 24.5 VirtualHost CGI Configuration

- 26.1 A Request With

squidclient - 26.2 Defining ACL Rules

Copyright © 2006– 2020 SUSE LLC and contributors. All rights reserved.

Permission is granted to copy, distribute and/or modify this document under the terms of the GNU Free Documentation License, Version 1.2 or (at your option) version 1.3; with the Invariant Section being this copyright notice and license. A copy of the license version 1.2 is included in the section entitled “GNU Free Documentation License”.

For SUSE trademarks, see https://www.suse.com/company/legal/. All other third-party trademarks are the property of their respective owners. Trademark symbols (®, ™ etc.) denote trademarks of SUSE and its affiliates. Asterisks (*) denote third-party trademarks.

All information found in this book has been compiled with utmost attention to detail. However, this does not guarantee complete accuracy. Neither SUSE LLC, its affiliates, the authors nor the translators shall be held liable for possible errors or the consequences thereof.

About This Guide #Edit source

This manual gives you a general understanding of openSUSE® Leap. It is intended mainly for system administrators and home users with basic system administration knowledge. Check out the various parts of this manual for a selection of applications needed in everyday life and in-depth descriptions of advanced installation and configuration scenarios.

- Advanced Administration

Learn about advanced adminstrations tasks such as using YaST in text mode and managing software from the command line. Find out how to do system rollbacks with Snapper and how to use advanced storage techniques on openSUSE Leap.

- System

Get an introduction to the components of your Linux system and a deeper understanding of their interaction.

- Services

Learn how to configure the various network and file services that come with openSUSE Leap.

- Mobile Computers

Get an introduction to mobile computing with openSUSE Leap, get to know the various options for wireless computing and power management.

1 Available Documentation #Edit source

Note: Online Documentation and Latest Updates

Documentation for our products is available at http://doc.opensuse.org/, where you can also find the latest updates, and browse or download the documentation in various formats. The latest documentation updates are usually available in the English version of the documentation.

The following documentation is available for this product:

- Book “Start-Up”

This manual will see you through your initial contact with openSUSE® Leap. Check out the various parts of this manual to learn how to install, use and enjoy your system.

- Reference

Covers system administration tasks like maintaining, monitoring and customizing an initially installed system.

- Book “Virtualization Guide”

Describes virtualization technology in general, and introduces libvirt—the unified interface to virtualization—and detailed information on specific hypervisors.

- Book “AutoYaST Guide”

AutoYaST is a system for unattended mass deployment of openSUSE Leap systems using an AutoYaST profile containing installation and configuration data. The manual guides you through the basic steps of auto-installation: preparation, installation, and configuration.

- Book “Security and Hardening Guide”

Introduces basic concepts of system security, covering both local and network security aspects. Shows how to use the product inherent security software like AppArmor, SELinux, or the auditing system that reliably collects information about any security-relevant events. Supports the administrator with security-related choices and decisions in installing and setting up a secure SUSE Linux Enterprise Server and additional processes to further secure and harden that installation.

- Book “System Analysis and Tuning Guide”

An administrator's guide for problem detection, resolution and optimization. Find how to inspect and optimize your system by means of monitoring tools and how to efficiently manage resources. Also contains an overview of common problems and solutions and of additional help and documentation resources.

- Book “GNOME User Guide”

Introduces the GNOME desktop of openSUSE Leap. It guides you through using and configuring the desktop and helps you perform key tasks. It is intended mainly for end users who want to make efficient use of GNOME as their default desktop.

The release notes for this product are available at https://www.suse.com/releasenotes/.

2 Giving Feedback #Edit source

Your feedback and contributions to this documentation are welcome! Several channels are available:

- Bug Reports

Report issues with the documentation at https://bugzilla.opensuse.org/. To simplify this process, you can use the links next to headlines in the HTML version of this document. These preselect the right product and category in Bugzilla and add a link to the current section. You can start typing your bug report right away. A Bugzilla account is required.

- Contributions

To contribute to this documentation, use the links next to headlines in the HTML version of this document. They take you to the source code on GitHub, where you can open a pull request. A GitHub account is required.

For more information about the documentation environment used for this documentation, see the repository's README.

Alternatively, you can report errors and send feedback concerning the documentation to <doc-team@suse.com>. Make sure to include the document title, the product version and the publication date of the documentation. Refer to the relevant section number and title (or include the URL) and provide a concise description of the problem.

- Help

If you need further help on openSUSE Leap, see https://en.opensuse.org/Portal:Support.

3 Documentation Conventions #Edit source

The following notices and typographical conventions are used in this documentation:

/etc/passwd: directory names and file namesPLACEHOLDER: replace PLACEHOLDER with the actual value

PATH: the environment variable PATHls,--help: commands, options, and parametersuser: users or groupspackage name : name of a package

Alt, Alt–F1: a key to press or a key combination; keys are shown in uppercase as on a keyboard

, › : menu items, buttons

Dancing Penguins (Chapter Penguins, ↑Another Manual): This is a reference to a chapter in another manual.

Commands that must be run with

rootprivileges. Often you can also prefix these commands with thesudocommand to run them as non-privileged user.root #commandtux >sudocommandCommands that can be run by non-privileged users.

tux >commandNotices

Warning: Warning Notice

Vital information you must be aware of before proceeding. Warns you about security issues, potential loss of data, damage to hardware, or physical hazards.

Important: Important Notice

Important information you should be aware of before proceeding.

Note: Note Notice

Additional information, for example about differences in software versions.

Tip: Tip Notice

Helpful information, like a guideline or a piece of practical advice.

4 Source Code #Edit source

The source code of openSUSE Leap is publicly available. Refer to http://en.opensuse.org/Source_code for download links and more information.

5 Acknowledgments #Edit source

With a lot of voluntary commitment, the developers of Linux cooperate on a global scale to promote the development of Linux. We thank them for their efforts—this distribution would not exist without them. Special thanks, of course, goes to Linus Torvalds.

Part I Advanced Administration #Edit source

- 1 YaST in Text Mode

The ncurses-based pseudo-graphical YaST interface is designed primarily to help system administrators to manage systems without an X server. The interface offers several advantages compared to the conventional GUI. You can navigate the ncurses interface using the keyboard, and there are keyboard sho…

- 2 Managing Software with Command Line Tools

This chapter describes Zypper and RPM, two command line tools for managing software. For a definition of the terminology used in this context (for example,

repository,patch, orupdate) refer to Book “Start-Up”, Chapter 10 “Installing or Removing Software”, Section 10.1 “Definition of Terms”.- 3 System Recovery and Snapshot Management with Snapper

Snapper allows creating and managing file system snapshots. File system snapshots allow keeping a copy of the state of a file system at a certain point of time. The standard setup of Snapper is designed to allow rolling back system changes. However, you can also use it to create on-disk backups of user data. As the basis for this functionality, Snapper uses the Btrfs file system or thinly-provisioned LVM volumes with an XFS or Ext4 file system.

- 4 Remote Graphical Sessions with VNC

Virtual Network Computing (VNC) enables you to access a remote computer via a graphical desktop, and run remote graphical applications. VNC is platform-independent and accesses the remote machine from any operating system. This chapter describes how to connect to a VNC server with the desktop clients vncviewer and Remmina, and how to operate a VNC server.

openSUSE Leap supports two different kinds of VNC sessions: One-time sessions that “live” as long as the VNC connection from the client is kept up, and persistent sessions that “live” until they are explicitly terminated.

A VNC server can offer both kinds of sessions simultaneously on different ports, but an open session cannot be converted from one type to the other.

- 5 Expert Partitioner

Sophisticated system configurations require specific disk setups. All common partitioning tasks can be done during the installation. To get persistent device naming with block devices, use the block devices below /dev/disk/by-id or /dev/disk/by-uuid. Logical Volume Management (LVM) is a disk partiti…

- 6 Installing Multiple Kernel Versions

openSUSE Leap supports the parallel installation of multiple kernel versions. When installing a second kernel, a boot entry and an initrd are automatically created, so no further manual configuration is needed. When rebooting the machine, the newly added kernel is available as an additional boot parameter.

Using this functionality, you can safely test kernel updates while being able to always fall back to the proven former kernel. To do this, do not use the update tools (such as the YaST Online Update or the updater applet), but instead follow the process described in this chapter.

- 7 Graphical User Interface

openSUSE Leap includes the X.org server, Wayland and the GNOME desktop. This chapter describes the configuration of the graphical user interface for all users.

1 YaST in Text Mode #Edit source

The ncurses-based pseudo-graphical YaST interface is designed primarily to help system administrators to manage systems without an X server. The interface offers several advantages compared to the conventional GUI. You can navigate the ncurses interface using the keyboard, and there are keyboard shortcuts for practically all interface elements. The ncurses interface is light on resources, and runs fast even on modest hardware. You can run the ncurses-based version of YaST via an SSH connection, so you can administer remote systems. Keep in mind that the minimum supported size of the terminal emulator in which to run YaST is 80x25 characters.

Figure 1.1: Main Window of YaST in Text Mode #

To launch the ncurses-based version of YaST, open the terminal and run the

sudo yast2 command. Use the Tab or

arrow keys to navigate between interface elements like menu

items, fields, and buttons. All menu items and buttons in YaST can be

accessed using the appropriate function keys or keyboard shortcuts. For

example, you can cancel the current operation by pressing

F9, while the F10 key can be used to accept

the changes. Each menu item and button in YaST's ncurses-based interface

has a highlighted letter in its label. This letter is part of the keyboard

shortcut assigned to the interface element. For example, the letter

Q is highlighted in the

button. This means that you can activate the button by pressing

Alt–Alt+Q.

Tip: Refreshing YaST Dialogs

If a YaST dialog gets corrupted or distorted (for example, while resizing the window), press Ctrl–L to refresh and restore its contents.

1.2 Advanced Key Combinations #Edit source

The ncurses-based version of YaST offers several advanced key combinations.

- Shift–F1

List advanced hotkeys.

- Shift–F4

Change color schema.

- Ctrl–\

Quit the application.

- Ctrl–L

Refresh screen.

- Ctrl–DF1

List advanced hotkeys.

- Ctrl–DShift–D

Dump dialog to the log file as a screenshot.

- Ctrl–DShift–Y

Open YDialogSpy to see the widget hierarchy.

1.3 Restriction of Key Combinations #Edit source

If your window manager uses global Alt combinations, the Alt combinations in YaST might not work. Keys like Alt or Shift can also be occupied by the settings of the terminal.

- Using Alt instead of Esc

Alt shortcuts can be executed with Esc instead of Alt. For example, Esc–H replaces Alt–H. (Press Esc, then press H.)

- Backward and Forward Navigation with Ctrl–F and Ctrl–B

If the Alt and Shift combinations are taken over by the window manager or the terminal, use the combinations Ctrl–F (forward) and Ctrl–B (backward) instead.

- Restriction of Function Keys

The function keys (F1 ... F12) are also used for functions. Certain function keys might be taken over by the terminal and may not be available for YaST. However, the Alt key combinations and function keys should always be fully available on a text-only console.

1.4 YaST Command Line Options #Edit source

Besides the text mode interface, YaST provides a command line interface. To get a list of YaST command line options, use the following command:

tux >sudoyast -h

1.4.1 Installing Packages from the Command Line #Edit source

If you know the package name, and the package is provided by an active

installation repository, you can use the command line option

-i to install the package:

tux >sudoyast -i package_name

or

tux >sudoyast --install -i package_name

package_name can be a single short package name (for example gvim) installed with dependency checking, or the full path to an RPM package, which is installed without dependency checking.

While YaST offers basic functionality for managing software from the command line, consider using Zypper for more advanced package management tasks. Find more information on using Zypper in Section 2.1, “Using Zypper”.

1.4.2 Working with Individual Modules #Edit source

To save time, you can start individual YaST modules using the following command:

tux >sudoyast module_name

View a list of all modules available on your system with yast

-l or yast --list.

1.4.3 Command Line Parameters of YaST Modules #Edit source

To use YaST functionality in scripts, YaST provides command line support for individual modules. However, not all modules have command line support. To display the available options of a module, use the following command:

tux >sudoyast module_name help

If a module does not provide command line support, it is started in a text mode with the following message:

This YaST module does not support the command line interface.

The following sections describe all YaST modules with command line support, along with a brief explanation of all their commands and available options.

1.4.3.1 Common YaST Module Commands #Edit source

All YaST modules support the following commands:

- help

Lists all the module's supported commands with their description:

tux >sudoyast lan help- longhelp

Same as

help, but adds a detailed list of all command's options and their descriptions:tux >sudoyast lan longhelp- xmlhelp

Same as

longhelp, but the output is structured as an XML document and redirected to a file:tux >sudoyast lan xmlhelp xmlfile=/tmp/yast_lan.xml- interactive

Enters the interactive mode. This lets you run the module's commands without prefixing them with

sudo yast. Useexitto leave the interactive mode.

1.4.3.2 yast add-on #Edit source

Adds a new add-on product from the specified path:

tux >sudoyast add-on http://server.name/directory/Lang-AddOn-CD1/

You can use the following protocols to specify the source path: http:// ftp:// nfs:// disk:// cd:// or dvd://.

1.4.3.3 yast audit-laf #Edit source

Displays and configures the Linux Audit Framework. Refer to the Book “Security and Hardening Guide” for more details. yast audit-laf

accepts the following commands:

- set

Sets an option:

tux >sudoyast audit-laf set log_file=/tmp/audit.logFor a complete list of options, run

yast audit-laf set help.- show

Displays settings of an option:

tux >sudoyast audit-laf show diskspace space_left: 75 space_left_action: SYSLOG admin_space_left: 50 admin_space_left_action: SUSPEND action_mail_acct: root disk_full_action: SUSPEND disk_error_action: SUSPENDFor a complete list of options, run

yast audit-laf show help.

1.4.3.4 yast dhcp-server #Edit source

Manages the DHCP server and configures its settings. yast

dhcp-server accepts the following commands:

- disable

Disables the DHCP server service.

- enable

Enables the DHCP server service.

- host

Configures settings for individual hosts.

- interface

Specifies to which network interface to listen to:

tux >sudoyast dhcp-server interface current Selected Interfaces: eth0 Other Interfaces: bond0, pbu, eth1For a complete list of options, run

yast dhcp-server interface help.- options

Manages global DHCP options. For a complete list of options, run

yast dhcp-server options help.- status

Prints the status of the DHCP service.

- subnet

Manages the DHCP subnet options. For a complete list of options, run

yast dhcp-server subnet help.

1.4.3.5 yast dns-server #Edit source

Manages the DNS server configuration. yast dns-server

accepts the following commands:

- acls

Displays access control list settings:

tux >sudoyast dns-server acls show ACLs: ----- Name Type Value ---------------------------- any Predefined localips Predefined localnets Predefined none Predefined- dnsrecord

Configures zone resource records:

tux >sudoyast dnsrecord add zone=example.org query=office.example.org type=NS value=ns3For a complete list of options, run

yast dns-server dnsrecord help.- forwarders

Configures DNS forwarders:

tux >sudoyast dns-server forwarders add ip=10.0.0.100tux >sudoyast dns-server forwarders show [...] Forwarder IP ------------ 10.0.0.100For a complete list of options, run

yast dns-server forwarders help.- host

Handles 'A' and its related 'PTR' record at once:

tux >sudoyast dns-server host show zone=example.orgFor a complete list of options, run

yast dns-server host help.- logging

Configures logging settings:

tux >sudoyast dns-server logging set updates=no transfers=yesFor a complete list of options, run

yast dns-server logging help.- mailserver

Configures zone mail servers:

tux >sudoyast dns-server mailserver add zone=example.org mx=mx1 priority=100For a complete list of options, run

yast dns-server mailserver help.- nameserver

Configures zone name servers:

tux >sudoyast dns-server nameserver add zone=example.com ns=ns1For a complete list of options, run

yast dns-server nameserver help.- soa

Configures the start of authority (SOA) record:

tux >sudoyast dns-server soa set zone=example.org serial=2006081623 ttl=2D3H20SFor a complete list of options, run

yast dns-server soa help.- startup

Manages the DNS server service:

tux >sudoyast dns-server startup atbootFor a complete list of options, run

yast dns-server startup help.- transport

Configures zone transport rules. For a complete list of options, run

yast dns-server transport help.- zones

Manages DNS zones:

tux >sudoyast dns-server zones add name=example.org zonetype=masterFor a complete list of options, run

yast dns-server zones help.

1.4.3.6 yast disk #Edit source

Prints information about all disks or partitions. The only supported

command is list followed by either of the following

options:

- disks

Lists all configured disks in the system:

tux >sudoyast disk list disks Device | Size | FS Type | Mount Point | Label | Model ---------+------------+---------+-------------+-------+------------- /dev/sda | 119.24 GiB | | | | SSD 840 /dev/sdb | 60.84 GiB | | | | WD1003FBYX-0- partitions

Lists all partitions in the system:

tux >sudoyast disk list partitions Device | Size | FS Type | Mount Point | Label | Model ---------------+------------+---------+-------------+-------+------ /dev/sda1 | 1.00 GiB | Ext2 | /boot | | /dev/sdb1 | 1.00 GiB | Swap | swap | | /dev/sdc1 | 698.64 GiB | XFS | /mnt/extra | | /dev/vg00/home | 580.50 GiB | Ext3 | /home | | /dev/vg00/root | 100.00 GiB | Ext3 | / | | [...]

1.4.3.7 yast ftp-server #Edit source

Configures FTP server settings. yast ftp-server accepts

the following options:

- SSL, TLS

Controls secure connections via SSL and TLS. SSL options are valid for the

vsftpdonly.tux >sudoyast ftp-server SSL enabletux >sudoyast ftp-server TLS disable- access

Configures access permissions:

tux >sudoyast ftp-server access authen_onlyFor a complete list of options, run

yast ftp-server access help.- anon_access

Configures access permissions for anonymous users:

tux >sudoyast ftp-server anon_access can_uploadFor a complete list of options, run

yast ftp-server anon_access help.- anon_dir

Specifies the directory for anonymous users. The directory must already exist on the server:

tux >sudoyast ftp-server anon_dir set_anon_dir=/srv/ftpFor a complete list of options, run

yast ftp-server anon_dir help.- chroot

Controls change root environment (chroot):

tux >sudoyast ftp-server chroot enabletux >sudoyast ftp-server chroot disable- idle-time

Sets the maximum idle time in minutes before FTP server terminates the current connection:

tux >sudoyast ftp-server idle-time set_idle_time=15- logging

Determines whether to save the log messages into a log file:

tux >sudoyast ftp-server logging enabletux >sudoyast ftp-server logging disable- max_clients

Specifies the maximum number of concurrently connected clients:

tux >sudoyast ftp-server max_clients set_max_clients=1500- max_clients_ip

Specifies the maximum number of concurrently connected clients via IP:

tux >sudoyast ftp-server max_clients_ip set_max_clients=20- max_rate_anon

Specifies the maximum data transfer rate permitted for anonymous clients (KB/s):

tux >sudoyast ftp-server max_rate_anon set_max_rate=10000- max_rate_authen

Specifies the maximum data transfer rate permitted for locally authenticated users (KB/s):

tux >sudoyast ftp-server max_rate_authen set_max_rate=10000- port_range

Specifies the port range for passive connection replies:

tux >sudoyast ftp-server port_range set_min_port=20000 set_max_port=30000For a complete list of options, run

yast ftp-server port_range help.- show

Displays FTP server settings.

- startup

Controls the FTP start-up method:

tux >sudoyast ftp-server startup atbootFor a complete list of options, run

yast ftp-server startup help.- umask

Specifies the file umask for

authenticated:anonymoususers:tux >sudoyast ftp-server umask set_umask=177:077- welcome_message

Specifies the text to display when someone connects to the FTP server:

tux >sudoyast ftp-server welcome_message set_message="hello everybody"

1.4.3.8 yast http-server #Edit source

Configures the HTTP server (Apache2). yast http-server

accepts the following commands:

- configure

Configures the HTTP server host settings:

tux >sudoyast http-server configure host=main servername=www.example.com \ serveradmin=admin@example.comFor a complete list of options, run

yast http-server configure help.

- hosts

Configures virtual hosts:

tux >sudoyast http-server hosts create servername=www.example.com \ serveradmin=admin@example.com documentroot=/var/wwwFor a complete list of options, run

yast http-server hosts help.

- listen

Specifies the ports and network addresses where the HTTP server should listen:

tux >sudoyast http-server listen add=81tux >sudoyast http-server listen list Listen Statements: ================== :80 :81tux >sudoyast http-server delete=80For a complete list of options, run

yast http-server listen help.

- mode

Enables or disables the wizard mode:

tux >sudoyast http-server mode wizard=on

- modules

Controls the Apache2 server modules:

tux >sudoyast http-server modules enable=php5,rewritetux >sudoyast http-server modules disable=ssltux >sudohttp-server modules list [...] Enabled rewrite Disabled ssl Enabled php5 [...]

1.4.3.9 yast kdump #Edit source

Configures kdump settings. For more information

on kdump, refer to the

Book “System Analysis and Tuning Guide”, Chapter 17 “Kexec and Kdump”, Section 17.7 “Basic Kdump Configuration”. yast kdump

accepts the following commands:

- copykernel

Copies the kernel into the dump directory.

- customkernel

Specifies the kernel_string part of the name of the custom kernel. The naming scheme is

/boot/vmlinu[zx]-kernel_string[.gz].tux >sudoyast kdump customkernel kernel=kdumpFor a complete list of options, run

yast kdump customkernel help.- dumpformat

Specifies the (compression) format of the dump kernel image. Available formats are 'none', 'ELF', 'compressed', or 'lzo':

tux >sudoyast kdump dumpformat dump_format=ELF- dumplevel

Specifies the dump level number in the range from 0 to 31:

tux >sudoyast kdump dumplevel dump_level=24- dumptarget

Specifies the destination for saving dump images:

tux >sudokdump dumptarget taget=ssh server=name_server port=22 \ dir=/var/log/dump user=user_nameFor a complete list of options, run

yast kdump dumptarget help.- immediatereboot

Controls whether the system should reboot immediately after saving the core in the kdump kernel:

tux >sudoyast kdump immediatereboot enabletux >sudoyast kdump immediatereboot disable- keepolddumps

Specifies how many old dump images are kept. Specify zero to keep them all:

tux >sudoyast kdump keepolddumps no=5- kernelcommandline

Specifies the command line that needs to be passed off to the kdump kernel:

tux >sudoyast kdump kernelcommandline command="ro root=LABEL=/"- kernelcommandlineappend

Specifies the command line that you need to append to the default command line string:

tux >sudoyast kdump kernelcommandlineappend command="ro root=LABEL=/"- notificationcc

Specifies an e-mail address for sending copies of notification messages:

tux >sudoyast kdump notificationcc email="user1@example.com user2@example.com"- notificationto

Specifies an e-mail address for sending notification messages:

tux >sudoyast kdump notificationto email="user1@example.com user2@example.com"- show

Displays

kdumpsettings:tux >sudoyast kdump show Kdump is disabled Dump Level: 31 Dump Format: compressed Dump Target Settings target: file file directory: /var/crash Kdump immediate reboots: Enabled Numbers of old dumps: 5- smtppass

Specifies the file with the plain text SMTP password used for sending notification messages:

tux >sudoyast kdump smtppass pass=/path/to/file- smtpserver

Specifies the SMTP server host name used for sending notification messages:

tux >sudoyast kdump smtpserver server=smtp.server.com- smtpuser

Specifies the SMTP user name used for sending notification messages:

tux >sudoyast kdump smtpuser user=smtp_user- startup

Enables or disables start-up options:

tux >sudoyast kdump startup enable alloc_mem=128,256tux >sudoyast kdump startup disable

1.4.3.10 yast keyboard #Edit source

Configures the system keyboard for virtual consoles. It does not affect

the keyboard settings in graphical desktop environments, such as GNOME

or KDE. yast keyboard accepts the following commands:

- list

Lists all available keyboard layouts.

- set

Activates new keyboard layout setting:

tux >sudoyast keyboard set layout=czech- summary

Displays the current keyboard configuration.

1.4.3.11 yast lan #Edit source

Configures network cards. yast lan accepts the

following commands:

- add

Configures a new network card:

tux >sudoyast lan add name=vlan50 ethdevice=eth0 bootproto=dhcpFor a complete list of options, run

yast lan add help.- delete

Deletes an existing network card:

tux >sudoyast lan delete id=0- edit

Changes the configuration of an existing network card:

tux >sudoyast lan edit id=0 bootproto=dhcp- list

Displays a summary of network card configuration:

tux >sudoyast lan list id name, bootproto 0 Ethernet Card 0, NONE 1 Network Bridge, DHCP

1.4.3.12 yast language #Edit source

Configures system languages. yast language accepts the

following commands:

- list

Lists all available languages.

- set

Specifies the main system languages and secondary languages:

tux >sudoyast language set lang=cs_CZ languages=en_US,es_ES no_packages

1.4.3.13 yast mail #Edit source

Displays the configuration of the mail system:

tux >sudoyast mail summary

1.4.3.14 yast nfs #Edit source

Controls the NFS client. yast nfs accepts the following

commands:

- add

Adds a new NFS mount:

tux >sudoyast nfs add spec=remote_host:/path/to/nfs/share file=/local/mount/pointFor a complete list of options, run

yast nfs add help.- delete

Deletes an existing NFS mount:

tux >sudoyast nfs delete spec=remote_host:/path/to/nfs/share file=/local/mount/pointFor a complete list of options, run

yast nfs delete help.- edit

Changes an existing NFS mount:

tux >sudoyast nfs edit spec=remote_host:/path/to/nfs/share \ file=/local/mount/point type=nfs4For a complete list of options, run

yast nfs edit help.- list

Lists existing NFS mounts:

tux >sudoyast nfs list Server Remote File System Mount Point Options ---------------------------------------------------------------- nfs.example.com /mnt /nfs/mnt nfs nfs.example.com /home/tux/nfs_share /nfs/tux nfs

1.4.3.15 yast nfs-server #Edit source

Configures the NFS server. yast nfs-server accepts the

following commands:

- add

Adds a directory to export:

tux >sudoyast nfs-server add mountpoint=/nfs/export hosts=*.allowed_hosts.comFor a complete list of options, run

yast nfs-server add help.- delete

Deletes a directory from the NFS export:

tux >sudoyast nfs-server delete mountpoint=/nfs/export- set

Specifies additional parameters for the NFS server:

tux >sudoyast nfs-server set enablev4=yes security=yesFor a complete list of options, run

yast nfs-server set help.- start

Starts the NFS server service:

tux >sudoyast nfs-server start- stop

Stops the NFS server service:

tux >sudoyast nfs-server stop- summary

Displays a summary of the NFS server configuration:

tux >sudoyast nfs-server summary NFS server is enabled NFS Exports * /mnt * /home NFSv4 support is enabled. The NFSv4 domain for idmapping is localdomain. NFS Security using GSS is enabled.

1.4.3.16 yast nis #Edit source

Configures the NIS client. yast nis accepts the

following commands:

- configure

Changes global settings of a NIS client:

tux >sudoyast nis configure server=nis.example.com broadcast=yesFor a complete list of options, run

yast nis configure help.- disable

Disables the NIS client:

tux >sudoyast nis disable- enable

Enables your machine as NIS client:

tux >sudoyast nis enable server=nis.example.com broadcast=yes automounter=yesFor a complete list of options, run

yast nis enable help.- find

Shows available NIS servers for a given domain:

tux >sudoyast nis find domain=nisdomain.com- summary

Displays a configuration summary of a NIS client.

1.4.3.17 yast nis-server #Edit source

Configures a NIS server. yast nis-server accepts the

following commands:

- master

Configures a NIS master server:

tux >sudoyast nis-server master domain=nisdomain.com yppasswd=yesFor a complete list of options, run

yast nis-server master help.- slave

Configures a NIS slave server:

tux >sudoyast nis-server slave domain=nisdomain.com master_ip=10.100.51.65For a complete list of options, run

yast nis-server slave help.- stop

Stops a NIS server:

tux >sudoyast nis-server stop- summary

Displays a configuration summary of a NIS server:

tux >sudoyast nis-server summary

1.4.3.18 yast proxy #Edit source

Configures proxy settings. yast proxy accepts the

following commands:

- authentication

Specifies the authentication options for proxy:

tux >sudoyast proxy authentication username=tux password=secretFor a complete list of options, run

yast proxy authentication help.- enable, disable

Enables or disables proxy settings.

- set

Changes the current proxy settings:

tux >sudoyast proxy set https=proxy.example.comFor a complete list of options, run

yast proxy set help.- summary

Displays proxy settings.

1.4.3.19 yast rdp #Edit source

Controls remote desktop settings. yast rdp accepts the

following commands:

- allow

Allows remote access to the server's desktop:

tux >sudoyast rdp allow set=yes- list

Displays the remote desktop configuration summary.

1.4.3.20 yast samba-client #Edit source

Configures the Samba client settings. yast samba-client

accepts the following commands:

- configure

Changes global settings of Samba:

tux >sudoyast samba-client configure workgroup=FAMILY- isdomainmember

Checks whether the machine is a member of a domain:

tux >sudoyast samba-client isdomainmember domain=SMB_DOMAIN- joindomain

Makes the machine a member of a domain:

tux >sudoyast samba-client joindomain domain=SMB_DOMAIN user=username password=pwd- winbind

Enables or disables Winbind services (the

winbindddaemon):tux >sudoyast samba-client winbind enabletux >sudoyast samba-client winbind disable

1.4.3.21 yast samba-server #Edit source

Configures Samba server settings. yast samba-server

accepts the following commands:

- backend

Specifies the back-end for storing user information:

tux >sudoyast samba-server backend smbpasswdFor a complete list of options, run

yast samba-server backend help.- configure

Configures global settings of the Samba server:

tux >sudoyast samba-server configure workgroup=FAMILY description='Home server'For a complete list of options, run

yast samba-server configure help.- list

Displays a list of available shares:

tux >sudoyast samba-server list Status Type Name ============================== Disabled Disk profiles Enabled Disk print$ Enabled Disk homes Disabled Disk groups Enabled Disk movies Enabled Printer printers- role

Specifies the role of the Samba server:

tux >sudoyast samba-server role standaloneFor a complete list of options, run

yast samba-server role help.- service

Enables or disables the Samba services (

smbandnmb):tux >sudoyast samba-server service enabletux >sudoyast samba-server service disable- share

Manipulates a single Samba share:

tux >sudoyast samba-server share name=movies browseable=yes guest_ok=yesFor a complete list of options, run

yast samba-server share help.

1.4.3.22 yast security #Edit source

Controls the security level of the host. yast security

accepts the following commands:

- level

Specifies the security level of the host:

tux >sudoyast security level serverFor a complete list of options, run

yast security level help.- set

Sets the value of a specific option:

tux >sudoyast security set passwd=sha512 crack=yesFor a complete list of options, run

yast security set help.- summary

Displays a summary of the current security configuration:

sudoyast security summary

1.4.3.23 yast sound #Edit source

Configures sound card settings. yast sound accepts the

following commands:

- add

Configures a new sound card. Without any parameters, the command adds the first detected card.

tux >sudoyast sound add card=0 volume=75For a complete list of options, run

yast sound add help.- channels

Lists available volume channels of a sound card:

tux >sudoyast sound channels card=0 Master 75 PCM 100- modules

Lists all available sound kernel modules:

tux >sudoyast sound modules snd-atiixp ATI IXP AC97 controller (snd-atiixp) snd-atiixp-modem ATI IXP MC97 controller (snd-atiixp-modem) snd-virtuoso Asus Virtuoso driver (snd-virtuoso) [...]- playtest

Plays a test sound on a sound card:

tux >sudoyast sound playtest card=0- remove

Removes a configured sound card:

tux >sudoyast sound remove card=0tux >sudoyast sound remove all- set

Specifies new values for a sound card:

tux >sudoyast sound set card=0 volume=80- show

Displays detailed information about a sound card:

tux >sudoyast sound show card=0 Parameters of card 'ThinkPad X240' (using module snd-hda-intel): align_buffer_size Force buffer and period sizes to be multiple of 128 bytes. bdl_pos_adj BDL position adjustment offset. beep_mode Select HDA Beep registration mode (0=off, 1=on) (default=1). Default Value: 0 enable_msi Enable Message Signaled Interrupt (MSI) [...]- summary

Prints a configuration summary for all sound cards on the system:

tux >sudoyast sound summary- volume

Specifies the volume level of a sound card:

sudoyast sound volume card=0 play

1.4.3.24 yast sysconfig #Edit source

Controls the variables in files under /etc/sysconfig.

yast sysconfig accepts the following commands:

- clear

Sets empty value to a variable:

tux >sudoyast sysconfig clear=POSTFIX_LISTEN

Tip: Variable in Multiple Files

If the variable is available in several files, use the VARIABLE_NAME$FILE_NAME syntax:

tux >sudoyast sysconfig clear=CONFIG_TYPE$/etc/sysconfig/mail- details

Displays detailed information about a variable:

tux >sudoyast sysconfig details variable=POSTFIX_LISTEN Description: Value: File: /etc/sysconfig/postfix Possible Values: Any value Default Value: Configuration Script: postfix Description: Comma separated list of IP's NOTE: If not set, LISTEN on all interfaces- list

Displays summary of modified variables. Use

allto list all variables and their values:tux >sudoyast sysconfig list all AOU_AUTO_AGREE_WITH_LICENSES="false" AOU_ENABLE_CRONJOB="true" AOU_INCLUDE_RECOMMENDS="false" [...]- set

Sets a value for a variable:

tux >sudoyast sysconfig set DISPLAYMANAGER=gdm

Tip: Variable in Multiple Files

If the variable is available in several files, use the VARIABLE_NAME$FILE_NAME syntax:

tux >sudoyast sysconfig set CONFIG_TYPE$/etc/sysconfig/mail=advanced

1.4.3.25 yast tftp-server #Edit source

Configures a TFTP server. yast tftp-server accepts the

following commands:

- directory

Specifies the directory of the TFTP server:

tux >sudoyast tftp-server directory path=/srv/tftptux >sudoyast tftp-server directory list Directory Path: /srv/tftp- status

Controls the status of the TFTP server service:

tux >sudoyast tftp-server status disabletux >sudoyast tftp-server status show Service Status: falsetux >sudoyast tftp-server status enable

1.4.3.26 yast timezone #Edit source

Configures the time zone. yast timezone accepts the

following commands:

- list

Lists all available time zones grouped by region:

tux >sudoyast timezone list Region: Africa Africa/Abidjan (Abidjan) Africa/Accra (Accra) Africa/Addis_Ababa (Addis Ababa) [...]- set

Specifies new values for the time zone configuration:

tux >sudoyast timezone set timezone=Europe/Prague hwclock=local- summary

Displays the time zone configuration summary:

tux >sudoyast timezone summary Current Time Zone: Europe/Prague Hardware Clock Set To: Local time Current Time and Date: Mon 12. March 2018, 11:36:21 CET

1.4.3.27 yast users #Edit source

Manages user accounts. yast users accepts the following

commands:

- add

Adds a new user:

tux >sudoyast users add username=user1 password=secret home=/home/user1For a complete list of options, run

yast users add help.- delete

Deletes an existing user account:

tux >sudoyast users delete username=user1 delete_homeFor a complete list of options, run

yast users delete help.- edit

Changes an existing user account:

tux >sudoyast users edit username=user1 password=new_secretFor a complete list of options, run

yast users edit help.- list

Lists existing users filtered by user type:

tux >sudoyast users list systemFor a complete list of options, run

yast users list help.- show

Displays details about a user:

tux >sudoyast users show username=wwwrun Full Name: WWW daemon apache List of Groups: www Default Group: wwwrun Home Directory: /var/lib/wwwrun Login Shell: /sbin/nologin Login Name: wwwrun UID: 456For a complete list of options, run

yast users show help.

2 Managing Software with Command Line Tools #Edit source

This chapter describes Zypper and RPM, two command line tools for managing

software. For a definition of the terminology used in this context (for

example, repository, patch, or

update) refer to

Book “Start-Up”, Chapter 10 “Installing or Removing Software”, Section 10.1 “Definition of Terms”.

2.1 Using Zypper #Edit source

Zypper is a command line package manager for installing, updating and removing packages. It also manages repositories. It is especially useful for accomplishing remote software management tasks or managing software from shell scripts.

2.1.1 General Usage #Edit source

The general syntax of Zypper is:

zypper[--global-options]COMMAND[--command-options][arguments]

The components enclosed in brackets are not required. See zypper

help for a list of general options and all commands. To get help

for a specific command, type zypper help

COMMAND.

- Zypper Commands

The simplest way to execute Zypper is to type its name, followed by a command. For example, to apply all needed patches to the system, use:

tux >sudozypper patch- Global Options

Additionally, you can choose from one or more global options by typing them immediately before the command:

tux >sudozypper --non-interactive patchIn the above example, the option

--non-interactivemeans that the command is run without asking anything (automatically applying the default answers).- Command-Specific Options

To use options that are specific to a particular command, type them immediately after the command:

tux >sudozypper patch --auto-agree-with-licensesIn the above example,

--auto-agree-with-licensesis used to apply all needed patches to a system without you being asked to confirm any licenses. Instead, license will be accepted automatically.- Arguments

Some commands require one or more arguments. For example, when using the command

install, you need to specify which package or which packages you want to install:tux >sudozypper install mplayerSome options also require a single argument. The following command will list all known patterns:

tux >zypper search -t pattern

You can combine all of the above. For example, the following command will

install the mc and vim packages from

the factory repository while being verbose:

tux >sudozypper -v install --from factory mc vim

The --from option keeps all repositories

enabled (for solving any dependencies) while requesting the package from the

specified repository. --repo is an alias for --from, and you may use either one.

Most Zypper commands have a dry-run option that does a

simulation of the given command. It can be used for test purposes.

tux >sudozypper remove --dry-run MozillaFirefox

Zypper supports the global --userdata

STRING option. You can specify a string

with this option, which gets written to Zypper's log files and plug-ins

(such as the Btrfs plug-in). It can be used to mark and identify

transactions in log files.

tux >sudozypper --userdata STRING patch

2.1.2 Using Zypper Subcommands #Edit source

Zypper subcommands are executables that are stored in the zypper_execdir,

/usr/lib/zypper/commands. If a subcommand is not found

in the zypper_execdir, Zypper automatically searches the rest of your $PATH

for it. This enables writing your own local extensions and storing them in

userspace.

Executing subcommands in the Zypper shell, and using global Zypper options are not supported.

List your available subcommands:

tux > zypper help subcommand

[...]

Available zypper subcommands in '/usr/lib/zypper/commands'

appstream-cache

lifecycle

migration

search-packages

Zypper subcommands available from elsewhere on your $PATH

<none>View the help screen for a subcommand:

tux > zypper help appstream-cache2.1.3 Installing and Removing Software with Zypper #Edit source

To install or remove packages, use the following commands:

tux >sudozypper install PACKAGE_NAMEtux >sudozypper remove PACKAGE_NAME

Warning: Do Not Remove Mandatory System Packages

Do not remove mandatory system packages like glibc , zypper , kernel . If they are removed, the system can become unstable or stop working altogether.

2.1.3.1 Selecting Which Packages to Install or Remove #Edit source

There are various ways to address packages with the commands

zypper install and zypper remove.

- By Exact Package Name

tux >sudozypper install MozillaFirefox- By Exact Package Name and Version Number

tux >sudozypper install MozillaFirefox-52.2- By Repository Alias and Package Name

tux >sudozypper install mozilla:MozillaFirefoxWhere

mozillais the alias of the repository from which to install.- By Package Name Using Wild Cards

You can select all packages that have names starting or ending with a certain string. Use wild cards with care, especially when removing packages. The following command will install all packages starting with “Moz”:

tux >sudozypper install 'Moz*'

Tip: Removing all

-debuginfoPackagesWhen debugging a problem, you sometimes need to temporarily install a lot of

-debuginfopackages which give you more information about running processes. After your debugging session finishes and you need to clean the environment, run the following:tux >sudozypper remove '*-debuginfo'- By Capability

For example, to install a package without knowing its name, capabilities come in handy. The following command will install the package MozillaFirefox:

tux >sudozypper install firefox- By Capability, Hardware Architecture, or Version

Together with a capability, you can specify a hardware architecture and a version:

The name of the desired hardware architecture is appended to the capability after a full stop. For example, to specify the AMD64/Intel 64 architectures (which in Zypper is named

x86_64), use:tux >sudozypper install 'firefox.x86_64'Versions must be appended to the end of the string and must be preceded by an operator:

<(lesser than),<=(lesser than or equal),=(equal),>=(greater than or equal),>(greater than).tux >sudozypper install 'firefox>=74.2'You can also combine a hardware architecture and version requirement:

tux >sudozypper install 'firefox.x86_64>=74.2'

- By Path to the RPM file

You can also specify a local or remote path to a package:

tux >sudozypper install /tmp/install/MozillaFirefox.rpmtux >sudozypper install http://download.example.com/MozillaFirefox.rpm

2.1.3.2 Combining Installation and Removal of Packages #Edit source

To install and remove packages simultaneously, use the

+/- modifiers. To install emacs and

simultaneously remove vim , use:

tux >sudozypper install emacs -vim

To remove emacs and simultaneously install vim , use:

tux >sudozypper remove emacs +vim

To prevent the package name starting with the - being

interpreted as a command option, always use it as the second argument. If

this is not possible, precede it with --:

tux >sudozypper install -emacs +vim # Wrongtux >sudozypper install vim -emacs # Correcttux >sudozypper install -- -emacs +vim # Correcttux >sudozypper remove emacs +vim # Correct

2.1.3.3 Cleaning Up Dependencies of Removed Packages #Edit source

If (together with a certain package), you automatically want to remove any

packages that become unneeded after removing the specified package, use the

--clean-deps option:

tux >sudozypper rm --clean-deps PACKAGE_NAME

2.1.3.4 Using Zypper in Scripts #Edit source

By default, Zypper asks for a confirmation before installing or removing a

selected package, or when a problem occurs. You can override this behavior

using the --non-interactive option. This option must be

given before the actual command (install,

remove, and patch), as can be seen in

the following:

tux >sudozypper--non-interactiveinstall PACKAGE_NAME

This option allows the use of Zypper in scripts and cron jobs.

2.1.3.5 Installing or Downloading Source Packages #Edit source

To install the corresponding source package of a package, use:

tux > zypper source-install PACKAGE_NAME

When executed as root, the default location to install source

packages is /usr/src/packages/ and

~/rpmbuild when run as user. These values can be

changed in your local rpm configuration.

This command will also install the build dependencies of the specified

package. If you do not want this, add the switch -D:

tux >sudozypper source-install -D PACKAGE_NAME

To install only the build dependencies use -d.

tux >sudozypper source-install -d PACKAGE_NAME

Of course, this will only work if you have the repository with the source packages enabled in your repository list (it is added by default, but not enabled). See Section 2.1.6, “Managing Repositories with Zypper” for details on repository management.

A list of all source packages available in your repositories can be obtained with:

tux > zypper search -t srcpackageYou can also download source packages for all installed packages to a local directory. To download source packages, use:

tux > zypper source-download

The default download directory is

/var/cache/zypper/source-download. You can change it

using the --directory option. To only show missing or

extraneous packages without downloading or deleting anything, use the

--status option. To delete extraneous source packages, use

the --delete option. To disable deleting, use the

--no-delete option.

2.1.3.6 Installing Packages from Disabled Repositories #Edit source

Normally you can only install or refresh packages from enabled

repositories. The --plus-content

TAG option helps you specify

repositories to be refreshed, temporarily enabled during the current Zypper

session, and disabled after it completes.

For example, to enable repositories that may provide additional

-debuginfo or -debugsource

packages, use --plus-content debug. You can specify this

option multiple times.

To temporarily enable such 'debug' repositories to install a specific

-debuginfo package, use the option as follows:

tux >sudozypper --plus-content debug \ install "debuginfo(build-id)=eb844a5c20c70a59fc693cd1061f851fb7d046f4"

The build-id string is reported by

gdb for missing debuginfo packages.

Note: Disabled Installation Media

Repositories from the openSUSE Leap installation media are still

configured but disabled after successful installation. You can use the

--plus-content option to install packages from the

installation media instead of the online repositories. Before calling

zypper, ensure the media is available, for example by

inserting the DVD into the computer's drive.

2.1.3.7 Utilities #Edit source

To verify whether all dependencies are still fulfilled and to repair missing dependencies, use:

tux > zypper verifyIn addition to dependencies that must be fulfilled, some packages “recommend” other packages. These recommended packages are only installed if actually available and installable. In case recommended packages were made available after the recommending package has been installed (by adding additional packages or hardware), use the following command:

tux >sudozypper install-new-recommends

This command is very useful after plugging in a Web cam or Wi-Fi device. It will install drivers for the device and related software, if available. Drivers and related software are only installable if certain hardware dependencies are fulfilled.

2.1.4 Updating Software with Zypper #Edit source

There are three different ways to update software using Zypper: by

installing patches, by installing a new version of a package or by updating

the entire distribution. The latter is achieved with zypper

dist-upgrade. Upgrading openSUSE Leap is discussed in

Book “Start-Up”, Chapter 13 “Upgrading the System and System Changes”.

2.1.4.1 Installing All Needed Patches #Edit source

To install all officially released patches that apply to your system, run:

tux >sudozypper patch

All patches available from repositories configured on your computer are

checked for their relevance to your installation. If they are relevant (and

not classified as optional or

feature), they are installed immediately.

If a patch that is about to be installed includes changes that require a system reboot, you will be warned before.

The plain zypper patch command does not apply patches

from third party repositories. To update also the third party repositories,

use the with-update command option as follows:

tux >sudozypper patch --with-update

To install also optional patches, use:

tux >sudozypper patch --with-optional

To install all patches relating to a specific Bugzilla issue, use:

tux >sudozypper patch --bugzilla=NUMBER

To install all patches relating to a specific CVE database entry, use:

tux >sudozypper patch --cve=NUMBER

For example, to install a security patch with the CVE number

CVE-2010-2713, execute:

tux >sudozypper patch --cve=CVE-2010-2713

To install only patches which affect Zypper and the package management itself, use:

tux >sudozypper patch --updatestack-only

Bear in mind that other command options that would also update other

repositories will be dropped if you use the

updatestack-only command option.

2.1.4.2 Listing Patches #Edit source

To find out whether patches are available, Zypper allows viewing the following information:

- Number of Needed Patches

To list the number of needed patches (patches that apply to your system but are not yet installed), use

patch-check:tux >zypper patch-check Loading repository data... Reading installed packages... 5 patches needed (1 security patch)This command can be combined with the

--updatestack-onlyoption to list only the patches which affect Zypper and the package management itself.- List of Needed Patches

To list all needed patches (patches that apply to your system but are not yet installed), use

list-patches:tux >zypper list-patches Repository | Name | Category | Severity | Interactive | Status | S> -----------+-------------------+----------+----------+-------------+--------+--> Update | openSUSE-2019-828 | security | moderate | --- | needed | S> Found 1 applicable patch: 1 patch needed (1 security patch)Note the new

Sincecolumn. From Zypper 1.14.36, this shows when a patch was installed.- List of All Patches

To list all patches available for openSUSE Leap, regardless of whether they are already installed or apply to your installation, use

zypper patches.

It is also possible to list and install patches relevant to specific

issues. To list specific patches, use the zypper

list-patches command with the following options:

- By Bugzilla Issues

To list all needed patches that relate to Bugzilla issues, use the option

--bugzilla.To list patches for a specific bug, you can also specify a bug number:

--bugzilla=NUMBER. To search for patches relating to multiple Bugzilla issues, add commas between the bug numbers, for example:tux >zypper list-patches --bugzilla=972197,956917- By CVE Number

To list all needed patches that relate to an entry in the CVE database (Common Vulnerabilities and Exposures), use the option

--cve.To list patches for a specific CVE database entry, you can also specify a CVE number:

--cve=NUMBER. To search for patches relating to multiple CVE database entries, add commas between the CVE numbers, for example:tux >zypper list-patches --bugzilla=CVE-2016-2315,CVE-2016-2324

To list all patches regardless of whether they are needed, use the option

--all additionally. For example, to list all patches with

a CVE number assigned, use:

tux > zypper list-patches --all --cve

Issue | No. | Patch | Category | Severity | Status

------+---------------+-------------------+-------------+-----------+----------

cve | CVE-2019-0287 | SUSE-SLE-Module.. | recommended | moderate | needed

cve | CVE-2019-3566 | SUSE-SLE-SERVER.. | recommended | moderate | not needed

[...]2.1.4.3 Installing New Package Versions #Edit source

If a repository contains only new packages, but does not provide patches,

zypper patch does not show any effect. To update

all installed packages with newer available versions (while maintaining

system integrity), use:

tux >sudozypper update

To update individual packages, specify the package with either the update or install command:

tux >sudozypper update PACKAGE_NAMEtux >sudozypper install PACKAGE_NAME

A list of all new installable packages can be obtained with the command:

tux > zypper list-updatesNote that this command only lists packages that match the following criteria:

has the same vendor like the already installed package,

is provided by repositories with at least the same priority than the already installed package,

is installable (all dependencies are satisfied).

A list of all new available packages (regardless whether installable or not) can be obtained with:

tux >sudozypper list-updates --all

To find out why a new package cannot be installed, use the zypper

install or zypper update command as described

above.

2.1.4.4 Identifying Orphaned Packages #Edit source

Whenever you remove a repository from Zypper or upgrade your system, some packages can get in an “orphaned” state. These orphaned packages belong to no active repository anymore. The following command gives you a list of these:

tux >sudozypper packages --orphaned

With this list, you can decide if a package is still needed or can be removed safely.

2.1.5 Identifying Processes and Services Using Deleted Files #Edit source

When patching, updating or removing packages, there may be running processes

on the system which continue to use files having been deleted by the update

or removal. Use zypper ps to list processes using deleted

files. In case the process belongs to a known service, the service name is

listed, making it easy to restart the service. By default zypper

ps shows a table:

tux > zypper ps

PID | PPID | UID | User | Command | Service | Files

------+------+-----+-------+--------------+--------------+-------------------

814 | 1 | 481 | avahi | avahi-daemon | avahi-daemon | /lib64/ld-2.19.s->

| | | | | | /lib64/libdl-2.1->

| | | | | | /lib64/libpthrea->

| | | | | | /lib64/libc-2.19->

[...]| PID: ID of the process |

| PPID: ID of the parent process |

| UID: ID of the user running the process |

| Login: Login name of the user running the process |

| Command: Command used to execute the process |

| Service: Service name (only if command is associated with a system service) |

| Files: The list of the deleted files |

The output format of zypper ps can be controlled as

follows:

zypper ps-sCreate a short table not showing the deleted files.

tux >zypper ps -s PID | PPID | UID | User | Command | Service ------+------+------+---------+--------------+-------------- 814 | 1 | 481 | avahi | avahi-daemon | avahi-daemon 817 | 1 | 0 | root | irqbalance | irqbalance 1567 | 1 | 0 | root | sshd | sshd 1761 | 1 | 0 | root | master | postfix 1764 | 1761 | 51 | postfix | pickup | postfix 1765 | 1761 | 51 | postfix | qmgr | postfix 2031 | 2027 | 1000 | tux | bash |zypper ps-ssShow only processes associated with a system service.

PID | PPID | UID | User | Command | Service ------+------+------+---------+--------------+-------------- 814 | 1 | 481 | avahi | avahi-daemon | avahi-daemon 817 | 1 | 0 | root | irqbalance | irqbalance 1567 | 1 | 0 | root | sshd | sshd 1761 | 1 | 0 | root | master | postfix 1764 | 1761 | 51 | postfix | pickup | postfix 1765 | 1761 | 51 | postfix | qmgr | postfix

zypper ps-sssOnly show system services using deleted files.

avahi-daemon irqbalance postfix sshd

zypper ps--print "systemctl status %s"Show the commands to retrieve status information for services which might need a restart.

systemctl status avahi-daemon systemctl status irqbalance systemctl status postfix systemctl status sshd

For more information about service handling refer to

Chapter 10, The systemd Daemon.

2.1.6 Managing Repositories with Zypper #Edit source

All installation or patch commands of Zypper rely on a list of known repositories. To list all repositories known to the system, use the command:

tux > zypper reposThe result will look similar to the following output:

Example 2.1: Zypper—List of Known Repositories #

tux > zypper repos

# | Alias | Name | Enabled | GPG Check | Refresh

---+-----------------------+------------------+---------+-----------+--------

1 | Leap-15.1-Main | Main (OSS) | Yes | (r ) Yes | Yes

2 | Leap-15.1-Update | Update (OSS) | Yes | (r ) Yes | Yes

3 | Leap-15.1-NOSS | Main (NON-OSS) | Yes | (r ) Yes | Yes

4 | Leap-15.1-Update-NOSS | Update (NON-OSS) | Yes | (r ) Yes | Yes

[...]

When specifying repositories in various commands, an alias, URI or

repository number from the zypper repos command output

can be used. A repository alias is a short version of the repository name

for use in repository handling commands. Note that the repository numbers

can change after modifying the list of repositories. The alias will never

change by itself.

By default, details such as the URI or the priority of the repository are not displayed. Use the following command to list all details:

tux > zypper repos -d2.1.6.1 Adding Repositories #Edit source

To add a repository, run

tux >sudozypper addrepo URI ALIAS

URI can either be an Internet repository, a network resource, a directory or a CD or DVD (see http://en.opensuse.org/openSUSE:Libzypp_URIs for details). The ALIAS is a shorthand and unique identifier of the repository. You can freely choose it, with the only exception that it needs to be unique. Zypper will issue a warning if you specify an alias that is already in use.

2.1.6.2 Refreshing Repositories #Edit source

zypper enables you to fetch changes in packages from

configured repositories. To fetch the changes, run:

tux >sudozypper refresh

Note: Default Behavior of zypper

By default, some commands perform refresh

automatically, so you do not need to run the command explicitly.

The refresh command enables you to view changes also in

disabled repositories, by using the --plus-content

option:

tux >sudozypper --plus-content refresh

This option fetches changes in repositories, but keeps the disabled repositories in the same state—disabled.

2.1.6.3 Removing Repositories #Edit source

To remove a repository from the list, use the command zypper

removerepo together with the alias or number of the repository

you want to delete. For example, to remove the repository

Leap-42.3-NOSS

from Example 2.1, “Zypper—List of Known Repositories”, use one of the following commands:

tux >sudozypper removerepo 4tux >sudozypper removerepo "Leap-42.3-NOSS"

2.1.6.4 Modifying Repositories #Edit source

Enable or disable repositories with zypper modifyrepo.

You can also alter the repository's properties (such as refreshing

behavior, name or priority) with this command. The following command will

enable the repository named updates, turn on

auto-refresh and set its priority to 20:

tux >sudozypper modifyrepo -er -p 20 'updates'

Modifying repositories is not limited to a single repository—you can also operate on groups:

-a: all repositories |

-l: local repositories |

-t: remote repositories |

-m TYPE: repositories

of a certain type (where TYPE can be one of the

following: http, https, ftp,

cd, dvd, dir, file,

cifs, smb, nfs, hd,

iso) |

To rename a repository alias, use the renamerepo

command. The following example changes the alias from Mozilla

Firefox to firefox:

tux >sudozypper renamerepo 'Mozilla Firefox' firefox

2.1.7 Querying Repositories and Packages with Zypper #Edit source

Zypper offers various methods to query repositories or packages. To get lists of all products, patterns, packages or patches available, use the following commands:

tux >zypper productstux >zypper patternstux >zypper packagestux >zypper patches

To query all repositories for certain packages, use

search. To get information regarding particular packages,

use the info command.

2.1.7.1 Searching for Software #Edit source

The zypper search command works on package names, or,

optionally, on package summaries and descriptions. Strings wrapped in

/ are interpreted as regular expressions. By default,

the search is not case-sensitive.

- Simple search for a package name containing

fire tux >zypper search "fire"- Simple search for the exact package

MozillaFirefox tux >zypper search --match-exact "MozillaFirefox"- Also search in package descriptions and summaries

tux >zypper search -d fire- Only display packages not already installed

tux >zypper search -u fire- Display packages containing the string

firnot followed bee tux >zypper se "/fir[^e]/"

2.1.7.2 Searching for Specific Capability #Edit source

To search for packages which provide a special capability, use the command

what-provides. For example, if you want to know which

package provides the Perl module SVN::Core, use the

following command:

tux > zypper what-provides 'perl(SVN::Core)'

The what-provides

PACKAGE_NAME is similar to

rpm -q --whatprovides

PACKAGE_NAME, but RPM is only able to query the

RPM database (that is the database of all installed packages). Zypper, on

the other hand, will tell you about providers of the capability from any

repository, not only those that are installed.

2.1.7.3 Showing Package Information #Edit source