Virtualization Guide

- About This Manual

- I Introduction

- II Managing Virtual Machines with

libvirt - III Hypervisor-Independent Features

- IV Managing Virtual Machines with Xen

- 17 Setting Up a Virtual Machine Host

- 18 Virtual Networking

- 19 Managing a Virtualization Environment

- 20 Block Devices in Xen

- 21 Virtualization: Configuration Options and Settings

- 22 Administrative Tasks

- 23 XenStore: Configuration Database Shared between Domains

- 24 Xen as a High-Availability Virtualization Host

- V Managing Virtual Machines with QEMU

- VI Managing Virtual Machines with LXC

- Glossary

- A Appendix

- B XM, XL Toolstacks and Libvirt framework

- C GNU Licenses

9 Basic VM Guest Management

Most management tasks, such as starting or stopping a VM Guest, can

either be done using the graphical application Virtual Machine Manager or on the command

line using virsh. Connecting to the graphical console

via VNC is only possible from a graphical user interface.

Note: Managing VM Guests on a Remote VM Host Server

If started on a VM Host Server, the libvirt tools Virtual Machine Manager,

virsh, and virt-viewer can be used to

manage VM Guests on the host. However, it is also possible to manage

VM Guests on a remote VM Host Server. This requires configuring remote

access for libvirt on the host. For instructions, see

Chapter 10, Connecting and Authorizing.

To connect to such a remote host with Virtual Machine Manager, you need to set

up a connection as explained in

Section 10.2.2, “Managing Connections with Virtual Machine Manager”. If connecting to a

remote host using virsh or

virt-viewer, you need to specify a connection URI with

the parameter -c (for example, virsh -c

qemu+tls://saturn.example.com/system or virsh -c

xen+ssh://). The form of connection URI depends on the

connection type and the hypervisor—see

Section 10.2, “Connecting to a VM Host Server” for details.

Examples in this chapter are all listed without a connection URI.

9.1 Listing VM Guests #

The VM Guest listing shows all VM Guests managed by libvirt

on a VM Host Server.

9.1.1 Listing VM Guests with Virtual Machine Manager #

The main window of the Virtual Machine Manager lists all VM Guests for each VM Host Server it is connected to. Each VM Guest entry contains the machine's name, its status (, , or ) displayed as an icon and literally, and a CPU usage bar.

9.1.2 Listing VM Guests with virsh #

Use the command virsh list to get a

list of VM Guests:

- List all running guests

virsh list

- List all running and inactive guests

virsh list --all

For more information and further options, see virsh help

list or man 1 virsh.

9.2 Accessing the VM Guest via Console #

VM Guests can be accessed via a VNC connection (graphical console) or, if supported by the guest operating system, via a serial console.

9.2.1 Opening a Graphical Console #

Opening a graphical console to a VM Guest lets you interact with the machine like a physical host via a VNC connection. If accessing the VNC server requires authentication, you are prompted to enter a user name (if applicable) and a password.

When you click into the VNC console, the cursor is “grabbed” and cannot be used outside the console anymore. To release it, press Alt–Ctrl.

Tip: Seamless (Absolute) Cursor Movement

To prevent the console from grabbing the cursor and to enable seamless cursor movement, add a tablet input device to the VM Guest. See Section 13.5, “Enabling Seamless and Synchronized Mouse Pointer Movement” for more information.

Certain key combinations such as Ctrl–Alt–Del are

interpreted by the host system and are not passed to the VM Guest. To

pass such key combinations to a VM Guest, open the menu from the VNC window and choose the desired key

combination entry. The menu is only available

when using Virtual Machine Manager and virt-viewer. With Virtual Machine Manager, you can

alternatively use the “sticky key” feature as explained in

Tip: Passing Key Combinations to Virtual Machines.

Note: Supported VNC Viewers

Principally all VNC viewers can connect to the console of a

VM Guest. However, if you are using SASL authentication and/or TLS/SSL

connection to access the guest, the options are limited. Common VNC

viewers such as tightvnc or

tigervnc support neither SASL authentication nor

TLS/SSL. The only supported alternative to Virtual Machine Manager and

virt-viewer is vinagre.

9.2.1.1 Opening a Graphical Console with Virtual Machine Manager #

In the Virtual Machine Manager, right-click a VM Guest entry.

Choose from the pop-up menu.

9.2.1.2

Opening a Graphical Console with virt-viewer

#

virt-viewer is a simple VNC viewer with added

functionality for displaying VM Guest consoles. For example, it can be

started in “wait” mode, where it waits for a VM Guest to

start before it connects. It also supports automatically reconnecting to

a VM Guest that is rebooted.

virt-viewer addresses VM Guests by name, by ID or by

UUID. Use virsh list --all to get

this data.

To connect to a guest that is running or paused, use either the ID, UUID, or name. VM Guests that are shut off do not have an ID—you can only connect to them by UUID or name.

- Connect to guest with the ID

8 virt-viewer 8

- Connect to the inactive guest named

sles12; the connection window will open once the guest starts virt-viewer --wait sles12

With the

--waitoption, the connection will be upheld even if the VM Guest is not running at the moment. When the guest starts, the viewer will be launched.

For more information, see virt-viewer

--help or man 1 virt-viewer.

Note: Password Input on Remote connections with SSH

When using virt-viewer to open a connection to a

remote host via SSH, the SSH password needs to be entered twice. The

first time for authenticating with libvirt, the second time for

authenticating with the VNC server. The second password needs to be

provided on the command line where virt-viewer was started.

9.2.2 Opening a Serial Console #

Accessing the graphical console of a virtual machine requires a graphical

environment on the client accessing the VM Guest.

As an alternative, virtual machines

managed with libvirt can also be accessed from the shell via the serial

console and virsh. To open a serial console to a

VM Guest named “sles12”, run the following command:

virsh console sles12

virsh console takes two optional flags:

--safe ensures exclusive access to the console,

--force disconnects any existing sessions before

connecting. Both features need to be supported by the guest operating

system.

Being able to connect to a VM Guest via serial console requires that the guest operating system supports serial console access and is properly supported. Refer to the guest operating system manual for more information.

Tip: Enabling Serial Console Access for SUSE Linux Enterprise and openSUSE Guests

Serial console access in SUSE Linux Enterprise and openSUSE is disabled by default. To enable it, proceed as follows:

- SLES 12 / openSUSE

Launch the YaST Boot Loader module and switch to the tab. Add

console=ttyS0to the field .- SLES 11

Launch the YaST Boot Loader module and select the boot entry for which to activate serial console access. Choose and add

console=ttyS0to the field . Additionally, edit/etc/inittaband uncomment the line with the following content:#S0:12345:respawn:/sbin/agetty -L 9600 ttyS0 vt102

9.3 Changing a VM Guest's State: Start, Stop, Pause #

Starting, stopping or pausing a VM Guest can be done with either

Virtual Machine Manager or virsh. You can also configure a

VM Guest to be automatically started when booting the VM Host Server.

When shutting down a VM Guest, you may either shut it down gracefully, or force the shutdown. The latter is equivalent to pulling the power plug on a physical host and is only recommended if there are no alternatives. Forcing a shutdown may cause file system corruption and loss of data on the VM Guest.

Tip: Graceful Shutdown

To be able to perform a graceful shutdown, the VM Guest must be configured to support ACPI. If you have created the guest with the Virtual Machine Manager, ACPI should be available in the VM Guest.

Depending on the guest operating system, availability of ACPI may not be sufficient to perform a graceful shutdown. It is strongly recommended to test shutting down and rebooting a guest before using it in production. openSUSE or SUSE Linux Enterprise Desktop, for example, can require PolKit authorization for shutdown and reboot. Make sure this policy is turned off on all VM Guests.

If ACPI was enabled during a Windows XP/Windows Server 2003 guest installation, turning it on in the VM Guest configuration only is not sufficient. For more information, see:

Regardless of the VM Guest's configuration, a graceful shutdown is always possible from within the guest operating system.

9.3.1 Changing a VM Guest's State with Virtual Machine Manager #

Changing a VM Guest's state can be done either from Virtual Machine Manager's main window, or from a VNC window.

Procedure 9.1: State Change from the Virtual Machine Manager Window #

Right-click a VM Guest entry.

Choose , , or one of the from the pop-up menu.

Procedure 9.2: State change from the VNC Window #

Open a VNC Window as described in Section 9.2.1.1, “Opening a Graphical Console with Virtual Machine Manager”.

Choose , , or one of the options either from the toolbar or from the menu.

9.3.1.1 Automatically Starting a VM Guest #

You can automatically start a guest when the VM Host Server boots. This feature is not enabled by default and needs to be enabled for each VM Guest individually. There is no way to activate it globally.

Double-click the VM Guest entry in Virtual Machine Manager to open its console.

Choose › to open the VM Guest configuration window.

Choose and check .

Save the new configuration with .

9.3.2 Changing a VM Guest's State with virsh #

In the following examples, the state of a VM Guest named “sles12” is changed.

- Start

virsh start sles12

- Pause

virsh suspend sles12

- Resume (a Suspended VM Guest)

virsh resume sles12

- Reboot

virsh reboot sles12

- Graceful shutdown

virsh shutdown sles12

- Force shutdown

virsh destroy sles12

- Turn on automatic start

virsh autostart sles12

- Turn off automatic start

virsh autostart --disable sles12

9.4 Saving and Restoring the State of a VM Guest #

Saving a VM Guest preserves the exact state of the guest’s memory. The operation is similar to hibernating a computer. A saved VM Guest can be quickly restored to its previously saved running condition.

When saved, the VM Guest is paused, its current memory state is saved to disk, and then the guest is stopped. The operation does not make a copy of any portion of the VM Guest’s virtual disk. The amount of time taken to save the virtual machine depends on the amount of memory allocated. When saved, a VM Guest’s memory is returned to the pool of memory available on the VM Host Server.

The restore operation loads a VM Guest’s previously saved memory state file and starts it. The guest is not booted but instead resumed at the point where it was previously saved. The operation is similar to coming out of hibernation.

The VM Guest is saved to a state file. Make sure there is enough space on the partition you are going to save to. For an estimation of the file size in megabytes to be expected, issue the following command on the guest:

free -mh | awk '/^Mem:/ {print $3}'Warning: Always Restore Saved Guests

After using the save operation, do not boot or start the saved VM Guest. Doing so would cause the machine's virtual disk and the saved memory state to get out of synchronization. This can result in critical errors when restoring the guest.

To be able to work with a saved VM Guest again, use the restore operation.

If you used virsh to save a VM Guest, you cannot

restore it using Virtual Machine Manager. In this case, make sure to restore using

virsh.

Important:

Only for VM Guests with Disk Types raw,

qcow2, qed

Saving and restoring VM Guests is only possible if the

VM Guest is using a virtual disk of the type

raw (.img),

qcow2, or qed.

9.4.1 Saving/Restoring with Virtual Machine Manager #

Procedure 9.3: Saving a VM Guest #

Open a VNC connection window to a VM Guest. Make sure the guest is running.

Choose › › .

Procedure 9.4: Restoring a VM Guest #

Open a VNC connection window to a VM Guest. Make sure the guest is not running.

Choose › .

If the VM Guest was previously saved using Virtual Machine Manager, you will not be offered an option to the guest. However, note the caveats on machines saved with

virshoutlined in Warning: Always Restore Saved Guests.

9.4.2 Saving and Restoring with virsh #

Save a running VM Guest with the command virsh

save and specify the file which it is saved to.

- Save the guest named

opensuse13 virsh save opensuse13 /virtual/saves/opensuse13.vmsav

- Save the guest with the ID

37 virsh save 37 /virtual/saves/opensuse13.vmsave

To restore a VM Guest, use virsh restore:

virsh restore /virtual/saves/opensuse13.vmsave

9.5 Creating and Managing Snapshots #

VM Guest snapshots are snapshots of the complete virtual machine including the state of CPU, RAM, and the content of all writable disks. To use virtual machine snapshots, you must have at least one non-removable and writable block device using the qcow2 disk image format.

Note

Snapshots are supported on KVM VM Host Servers only.

Snapshots let you restore the state of the machine at a particular point in time. This is for example useful to undo a faulty configuration or the installation of a lot of packages. It is also helpful for testing purposes, as it allows you to go back to a defined state at any time.

Snapshots can be taken either from running guests or from a guest currently not running. Taking a screenshot from a guest that is shut down ensures data integrity. In case you want to create a snapshot from a running system, be aware that the snapshot only captures the state of the disk(s), not the state of the memory. Therefore you need to ensure that:

All running programs have written their data to the disk. If you are unsure, terminate the application and/or stop the respective service.

Buffers have been written to disk. This can be achieved by running the command

syncon the VM Guest.

Starting a snapshot reverts the machine back to the state it was in when the snapshot was taken. Any changes written to the disk after that point in time will be lost when starting the snapshot.

Starting a snapshot will restore the machine to the state (shut off or running) it was in when the snapshot was taken. After starting a snapshot that was created while the VM Guest was shut off, you will need to boot it.

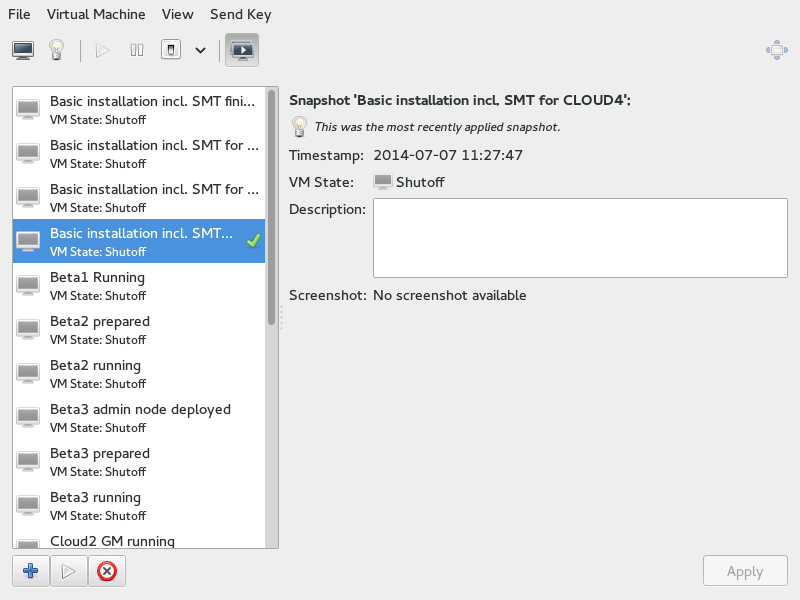

9.5.1 Creating and Managing Snapshots with Virtual Machine Manager #

To open the snapshot management view in Virtual Machine Manager, open the VNC window as described in Section 9.2.1.1, “Opening a Graphical Console with Virtual Machine Manager”. Now either choose › or click in the toolbar.

The list of existing snapshots for the chosen VM Guest is displayed in the left-hand part of the window. The snapshot that was last started is marked with a green tick. The right-hand part of the window shows details of the snapshot currently marked in the list. These details include the snapshot's title and time stamp, the state of the VM Guest at the time the snapshot was taken and a description. Snapshots of running guests also include a screenshot. The can be changed directly from this view. Other snapshot data cannot be changed.

9.5.1.1 Creating a Snapshot #

To take a new snapshot of a VM Guest, proceed as follows:

Shut down the VM Guest in case you want to create a snapshot from a guest that is not running.

Click in the bottom left corner of the VNC window.

The window opens.

Provide a and, optionally, a description. The name cannot be changed after the snapshot has been taken. To be able to identify the snapshot later easily, use a “speaking name”.

Confirm with .

9.5.1.2 Deleting a Snapshot #

To delete a snapshot of a VM Guest, proceed as follows:

Click in the bottom left corner of the VNC window.

Confirm the deletion with .

9.5.1.3 Starting a Snapshot #

To start a snapshot, proceed as follows:

Click in the bottom left corner of the VNC window.

Confirm the start with .

9.5.2 Creating and Managing Snapshots with virsh #

To list all existing snapshots for a domain

(ADMIN_SERVER in the following), run the

snapshot-list command:

tux > virsh snapshot-list

Name Creation Time State

------------------------------------------------------------

Basic installation incl. SMT finished 2013-09-18 09:45:29 +0200 shutoff

Basic installation incl. SMT for CLOUD3 2013-12-11 15:11:05 +0100 shutoff

Basic installation incl. SMT for CLOUD3-HA 2014-03-24 13:44:03 +0100 shutoff

Basic installation incl. SMT for CLOUD4 2014-07-07 11:27:47 +0200 shutoff

Beta1 Running 2013-07-12 12:27:28 +0200 shutoff

Beta2 prepared 2013-07-12 17:00:44 +0200 shutoff

Beta2 running 2013-07-29 12:14:11 +0200 shutoff

Beta3 admin node deployed 2013-07-30 16:50:40 +0200 shutoff

Beta3 prepared 2013-07-30 17:07:35 +0200 shutoff

Beta3 running 2013-09-02 16:13:25 +0200 shutoff

Cloud2 GM running 2013-12-10 15:44:58 +0100 shutoff

CLOUD3 RC prepared 2013-12-20 15:30:19 +0100 shutoff

CLOUD3-HA Build 680 prepared 2014-03-24 14:20:37 +0100 shutoff

CLOUD3-HA Build 796 installed (zypper up) 2014-04-14 16:45:18 +0200 shutoff

GMC2 post Cloud install 2013-09-18 10:53:03 +0200 shutoff

GMC2 pre Cloud install 2013-09-18 10:31:17 +0200 shutoff

GMC2 prepared (incl. Add-On Installation) 2013-09-17 16:22:37 +0200 shutoff

GMC_pre prepared 2013-09-03 13:30:38 +0200 shutoff

OS + SMT + eth[01] 2013-06-14 16:17:24 +0200 shutoff

OS + SMT + Mirror + eth[01] 2013-07-30 15:50:16 +0200 shutoff

The snapshot that was last started is shown with the

snapshot-current command:

tux > virsh snapshot-current --name admin_server

Basic installation incl. SMT for CLOUD4

Details about a particular snapshot can be obtained by running the

snapshot-info command:

tux > virsh snapshot-info sles "Basic installation incl. SMT for CLOUD4"

Name: Basic installation incl. SMT for CLOUD4

Domain: admin_server

Current: yes

State: shutoff

Location: internal

Parent: Basic installation incl. SMT for CLOUD3-HA

Children: 0

Descendants: 0

Metadata: yes9.5.2.1 Creating a Snapshot #

To take a new snapshot of a VM Guest currently not running, use the

snapshot-create-as command as follows:

virsh snapshot-create-as --domain admin_server1 --name "Snapshot 1"2 \ --description "First snapshot"3

Domain name. Mandatory. | |

Name of the snapshot. It is recommended to use a “speaking name”, since that makes it easier to identify the snapshot. Mandatory. | |

Description for the snapshot. Optional. |

To take a snapshot of a running VM Guest, you need to specify the

--live parameter:

virsh snapshot-create-as --domain admin_server --name "Snapshot 2" \ --description "First live snapshot" --live

Refer to the SNAPSHOT COMMANDS section in

man 1 virsh for more details.

9.5.2.2 Deleting a Snapshot #

To delete a snapshot of a VM Guest, use the

snapshot-delete command:

virsh snapshot-delete --domain admin_server --snapshotname "Snapshot 2"

9.5.2.3 Starting a Snapshot #

To start a snapshot, use the snapshot-revert

command:

virsh snapshot-revert --domain admin_server --snapshotname "Snapshot 1"

To start the current snapshot (the one the VM Guest was started

off), it is sufficient to use --current rather than

specifying the snapshot name:

virsh snapshot-revert --domain admin_server --current

9.6 Deleting a VM Guest #

By default, deleting a VM Guest using virsh removes only

its XML configuration. Since attached storage is not deleted by default, you

can reuse it with another VM Guest. With Virtual Machine Manager, you can also delete a

guest's storage files as well—this will completely erase the guest.

9.6.1 Deleting a VM Guest with Virtual Machine Manager #

In the Virtual Machine Manager, right-click a VM Guest entry.

From the context menu, choose .

A confirmation window opens. Clicking will permanently erase the VM Guest. The deletion is not recoverable.

You can also permanently delete the guest's virtual disk by activating . The deletion is not recoverable either.

9.6.2 Deleting a VM Guest with virsh #

To delete a VM Guest, it needs to be shut down first. It is not possible to delete a running guest. For information on shutting down, see Section 9.3, “Changing a VM Guest's State: Start, Stop, Pause”.

To delete a VM Guest with virsh, run

virsh undefine

VM_NAME.

virsh undefine sles12

There is no option to automatically delete the attached storage files. If they are managed by libvirt, delete them as described in Section 11.2.4, “Deleting Volumes from a Storage Pool”.

9.7 Migrating VM Guests #

One of the major advantages of virtualization is that VM Guests are portable. When a VM Host Server needs to go down for maintenance, or when the host gets overloaded, the guests can easily be moved to another VM Host Server. KVM and Xen even support “live” migrations during which the VM Guest is constantly available.

9.7.1 Migration Requirements #

To successfully migrate a VM Guest to another VM Host Server, the following requirements need to be met:

It is recommended that the source and destination systems have the same architecture. However, it is possible to migrate between hosts with AMD* and Intel* architectures.

Storage devices must be accessible from both machines (for example, via NFS or iSCSI) and must be configured as a storage pool on both machines. For more information, see Chapter 11, Managing Storage.

This is also true for CD-ROM or floppy images that are connected during the move. However, you can disconnect them prior to the move as described in Section 13.8, “Ejecting and Changing Floppy or CD/DVD-ROM Media with Virtual Machine Manager”.

libvirtdneeds to run on both VM Host Servers and you must be able to open a remotelibvirtconnection between the target and the source host (or vice versa). Refer to Section 10.3, “Configuring Remote Connections” for details.If a firewall is running on the target host, ports need to be opened to allow the migration. If you do not specify a port during the migration process,

libvirtchooses one from the range 49152:49215. Make sure that either this range (recommended) or a dedicated port of your choice is opened in the firewall on the target host.Host and target machine should be in the same subnet on the network, otherwise networking will not work after the migration.

No running or paused VM Guest with the same name must exist on the target host. If a shut-down machine with the same name exists, its configuration will be overwritten.

All CPU models except host cpu model are supported when migrating VM Guests.

SATA disk device type is not migratable.

File system pass-through feature is incompatible with migration.

The VM Host Server and VM Guest need to have proper timekeeping installed. See Chapter 15, VM Guest Clock Settings.

Section 28.3.1.2, “virtio-blk-data-plane” is not supported for migration.

No physical devices can be passed from host to guest. Live migration is currently not supported when using devices with PCI pass-through or SR-IOV. In case live migration needs to be supported, you need to use software virtualization (paravirtualization or full virtualization).

Cache mode setting is an important setting for migration. See: Section 14.5, “Effect of Cache Modes on Live Migration”.

The image directory should be located in the same path on both hosts.

9.7.2 Migrating with Virtual Machine Manager #

When using the Virtual Machine Manager to migrate VM Guests, it does not matter on which machine it is started. You can start Virtual Machine Manager on the source or the target host or even on a third host. In the latter case you need to be able to open remote connections to both the target and the source host.

Start Virtual Machine Manager and establish a connection to the target or the source host. If the Virtual Machine Manager was started neither on the target nor the source host, connections to both hosts need to be opened.

Right-click the VM Guest that you want to migrate and choose . Make sure the guest is running or paused—it is not possible to migrate guests that are shut down.

Tip: Increasing the Speed of the Migration

To increase the speed of the migration somewhat, pause the VM Guest. This is the equivalent of the former so-called “offline migration” option of Virtual Machine Manager.

Choose a for the VM Guest. If the desired target host does not show up, make sure that you are connected to the host.

To change the default options for connecting to the remote host, under , set the , and the target host's (IP address or host name) and . If you specify a , you must also specify an .

Under , choose whether the move should be permanent (default) or temporary, using .

Additionally, there is the option , which allows migrating without disabling the cache of the VM Host Server. This can speed up the migration but only works when the current configuration allows for a consistent view of the VM Guest storage without using

cache="none"/0_DIRECT.

Note: Bandwidth Option

In recent versions of Virtual Machine Manager, the option of setting a bandwidth for the migration has been removed. To set a specific bandwidth, use

virshinstead.To perform the migration, click .

When the migration is complete, the window closes and the VM Guest is now listed on the new host in the Virtual Machine Manager window. The original VM Guest will still be available on the target host (in shut down state).

9.7.3 Migrating with virsh #

To migrate a VM Guest with virsh

migrate, you need to have direct or remote shell access

to the VM Host Server, because the command needs to be run on the host. The

migration command looks like this:

virsh migrate [OPTIONS] VM_ID_or_NAME CONNECTION_URI [--migrateuri tcp://REMOTE_HOST:PORT]

The most important options are listed below. See virsh help

migrate for a full list.

--liveDoes a live migration. If not specified, the guest will be paused during the migration (“offline migration”).

--suspendDoes an offline migration and does not restart the VM Guest on the target host.

--persistentBy default a migrated VM Guest will be migrated temporarily, so its configuration is automatically deleted on the target host if it is shut down. Use this switch to make the migration persistent.

--undefinesourceWhen specified, the VM Guest definition on the source host will be deleted after a successful migration (however, virtual disks attached to this guest will not be deleted).

The following examples use mercury.example.com as the source system and

jupiter.example.com as the target system; the VM Guest's name is

opensuse131 with Id 37.

- Offline migration with default parameters

virsh migrate 37 qemu+ssh://tux@jupiter.example.com/system

- Transient live migration with default parameters

virsh migrate --live opensuse131 qemu+ssh://tux@jupiter.example.com/system

- Persistent live migration; delete VM definition on source

virsh migrate --live --persistent --undefinesource 37 \ qemu+tls://tux@jupiter.example.com/system

- Offline migration using port 49152

virsh migrate opensuse131 qemu+ssh://tux@jupiter.example.com/system \ --migrateuri tcp://@jupiter.example.com:49152

Note: Transient Compared to Persistent Migrations

By default virsh migrate creates a temporary

(transient) copy of the VM Guest on the target host. A shut down

version of the original guest description remains on the source host. A

transient copy will be deleted from the server after it is shut down.

To create a permanent copy of a guest on the target host, use

the switch --persistent. A shut down version of the

original guest description remains on the source host, too. Use the

option --undefinesource together with

--persistent for a “real” move where a

permanent copy is created on the target host and the version on the

source host is deleted.

It is not recommended to use --undefinesource without

the --persistent option, since this will result in the

loss of both VM Guest definitions when the guest is shut down on

the target host.

9.7.4 Step-by-Step Example #

9.7.4.1 Exporting the Storage #

First you need to export the storage, to share the Guest image between

host. This can be done by an NFS server. In the following example we

want to share the /volume1/VM directory for all

machines that are on the network 10.0.1.0/24. We will use a SUSE Linux Enterprise

NFS server. As root user, edit the /etc/exports

file and add:

/volume1/VM 10.0.1.0/24 (rw,sync,no_root_squash)

You need to restart the NFS server:

root #systemctl restart nfsserverroot #exportfs /volume1/VM 10.0.1.0/24

9.7.4.2 Defining the Pool on the Target Hosts #

On each host where you want to migrate the VM Guest, the pool must

be defined to be able to access the volume (that contains the Guest

image). Our NFS server IP address is 10.0.1.99, its share is the

/volume1/VM directory, and we want to get it

mounted in the /var/lib/libvirt/images/VM

directory. The pool name will be VM. To define

this pool, create a VM.xml file with the following

content:

<pool type='netfs'>

<name>VM</name>

<source>

<host name='10.0.1.99'/>

<dir path='/volume1/VM'/>

<format type='auto'/>

</source>

<target>

<path>/var/lib/libvirt/images/VM</path>

<permissions>

<mode>0755</mode>

<owner>-1</owner>

<group>-1</group>

</permissions>

</target>

</pool>

Then load it into libvirt using the pool-define

command:

root # virsh pool-define VM.xml

An alternative way to define this pool is to use the

virsh command:

root # virsh pool-define-as VM --type netfs --source-host 10.0.1.99 \

--source-path /volume1/VM --target /var/lib/libvirt/images/VM

Pool VM created

The following commands assume that you are in the interactive shell of

virsh which can also be reached by using the command

virsh without any arguments.

Then the pool can be set to start automatically at host boot (autostart

option):

virsh # pool-autostart VM

Pool VM marked as autostartedIf you want to disable the autostart:

virsh # pool-autostart VM --disable

Pool VM unmarked as autostartedCheck if the pool is present:

virsh #pool-list --all Name State Autostart ------------------------------------------- default active yes VM active yesvirsh #pool-info VM Name: VM UUID: 42efe1b3-7eaa-4e24-a06a-ba7c9ee29741 State: running Persistent: yes Autostart: yes Capacity: 2,68 TiB Allocation: 2,38 TiB Available: 306,05 GiB

Warning: Pool Needs to Exist on All Target Hosts

Remember: this pool must be defined on each host where you want to be able to migrate your VM Guest.

9.7.4.3 Creating the Volume #

The pool has been defined—now we need a volume which will contain the disk image:

virsh # vol-create-as VM sled12.qcow12 8G --format qcow2

Vol sled12.qcow12 createdThe volume names shown will be used later to install the guest with virt-install.

9.7.4.4 Creating the VM Guest #

Let's create a openSUSE Leap VM Guest with the

virt-install command. The VM

pool will be specified with the --disk option,

cache=none is recommended if you do not want to use

the --unsafe option while doing the migration.

root # virt-install --connect qemu:///system --virt-type kvm --name \

sled12 --memory 1024 --disk vol=VM/sled12.qcow2,cache=none --cdrom \

/mnt/install/ISO/SLE-12-Desktop-DVD-x86_64-Build0327-Media1.iso --graphics \

vnc --os-variant sled12

Starting install...

Creating domain...9.7.4.5 Migrate the VM Guest #

Everything is ready to do the migration now. Run the

migrate command on the VM Host Server that is currently

hosting the VM Guest, and choose the destination.

virsh # migrate --live sled12 --verbose qemu+ssh://IP/Hostname/system Password: Migration: [ 12 %]

9.8 Monitoring #

9.8.1 Monitoring with Virtual Machine Manager #

After starting Virtual Machine Manager and connecting to the VM Host Server, a CPU usage graph of all the running guests is displayed.

It is also possible to get information about disk and network usage with this tool, however, you must first activate this in :

Run

virt-manager.Select › .

Change the tab from to .

Activate the check boxes for the kind of activity you want to see: , , and .

If desired, also change the update interval using .

Close the dialog.

Activate the graphs that should be displayed under › .

Afterward, the disk and network statistics are also displayed in the main window of the Virtual Machine Manager.

More precise data is available from the VNC window. Open a VNC window as described in Section 9.2.1, “Opening a Graphical Console”. Choose from the toolbar or the menu. The statistics are displayed from the entry of the left-hand tree menu.

9.8.2 Monitoring with virt-top #

virt-top is a command line tool similar to the

well-known process monitoring tool

top. virt-top uses libvirt and

therefore is capable of showing statistics for VM Guests running on

different hypervisors. It is recommended to use

virt-top instead of hypervisor-specific tools like

xentop.

By default virt-top shows statistics for all running

VM Guests. Among the data that is displayed is the percentage of memory

used (%MEM) and CPU (%CPU) and the

uptime of the guest (TIME). The data is updated

regularly (every three seconds by default). The following shows the output

on a VM Host Server with seven VM Guests, four of them inactive:

virt-top 13:40:19 - x86_64 8/8CPU 1283MHz 16067MB 7.6% 0.5%

7 domains, 3 active, 3 running, 0 sleeping, 0 paused, 4 inactive D:0 O:0 X:0

CPU: 6.1% Mem: 3072 MB (3072 MB by guests)

ID S RDRQ WRRQ RXBY TXBY %CPU %MEM TIME NAME

7 R 123 1 18K 196 5.8 6.0 0:24.35 sled12_sp1

6 R 1 0 18K 0 0.2 6.0 0:42.51 sles12_sp1

5 R 0 0 18K 0 0.1 6.0 85:45.67 opensuse_leap

- (Ubuntu_1410)

- (debian_780)

- (fedora_21)

- (sles11sp3)By default the output is sorted by ID. Use the following key combinations to change the sort field:

| Shift–P: CPU usage |

| Shift–M: Total memory allocated by the guest |

| Shift–T: Time |

| Shift–I: ID |

To use any other field for sorting, press Shift–F and select a field from the list. To toggle the sort order, use Shift–R.

virt-top also supports different views on the

VM Guests data, which can be changed on-the-fly by pressing the following

keys:

| 0: default view |

| 1: show physical CPUs |

| 2: show network interfaces |

| 3: show virtual disks |

virt-top supports more hot keys to change the view of

the data and many command line switches that affect the behavior of

the program. For more information, see man 1 virt-top.

9.8.3 Monitoring with kvm_stat #

kvm_stat can be used to trace KVM performance

events. It monitors /sys/kernel/debug/kvm, so it

needs the debugfs to be mounted. On openSUSE Leap it should be

mounted by default. In case it is not mounted, use the following

command:

mount -t debugfs none /sys/kernel/debug

kvm_stat can be used in three different modes:

kvm_stat # update in 1 second intervals

kvm_stat -1 # 1 second snapshot

kvm_stat -l > kvmstats.log # update in 1 second intervals in log format

# can be imported to a spreadsheetExample 9.1: Typical Output of kvm_stat #

kvm statistics efer_reload 0 0 exits 11378946 218130 fpu_reload 62144 152 halt_exits 414866 100 halt_wakeup 260358 50 host_state_reload 539650 249 hypercalls 0 0 insn_emulation 6227331 173067 insn_emulation_fail 0 0 invlpg 227281 47 io_exits 113148 18 irq_exits 168474 127 irq_injections 482804 123 irq_window 51270 18 largepages 0 0 mmio_exits 6925 0 mmu_cache_miss 71820 19 mmu_flooded 35420 9 mmu_pde_zapped 64763 20 mmu_pte_updated 0 0 mmu_pte_write 213782 29 mmu_recycled 0 0 mmu_shadow_zapped 128690 17 mmu_unsync 46 -1 nmi_injections 0 0 nmi_window 0 0 pf_fixed 1553821 857 pf_guest 1018832 562 remote_tlb_flush 174007 37 request_irq 0 0 signal_exits 0 0 tlb_flush 394182 148

See http://clalance.blogspot.com/2009/01/kvm-performance-tools.html for further information on how to interpret these values.