Virtualization Guide

- Preface

- I Introduction

- II Managing virtual machines with

libvirt - III Hypervisor-independent features

- IV Managing virtual machines with Xen

- 22 Setting up a virtual machine host

- 23 Virtual networking

- 24 Managing a virtualization environment

- 25 Block devices in Xen

- 26 Virtualization: configuration options and settings

- 27 Administrative tasks

- 28 XenStore: configuration database shared between domains

- 29 Xen as a high-availability virtualization host

- 30 Xen: converting a paravirtual (PV) guest into a fully virtual (FV/HVM) guest

- V Managing virtual machines with QEMU

- VI Troubleshooting

- Glossary

- A Configuring GPU Pass-Through for NVIDIA cards

- B GNU licenses

27 Administrative tasks #Edit source

27.1 The boot loader program #Edit source

The boot loader controls how the virtualization software boots and runs. You can modify the boot loader properties by using YaST, or by directly editing the boot loader configuration file.

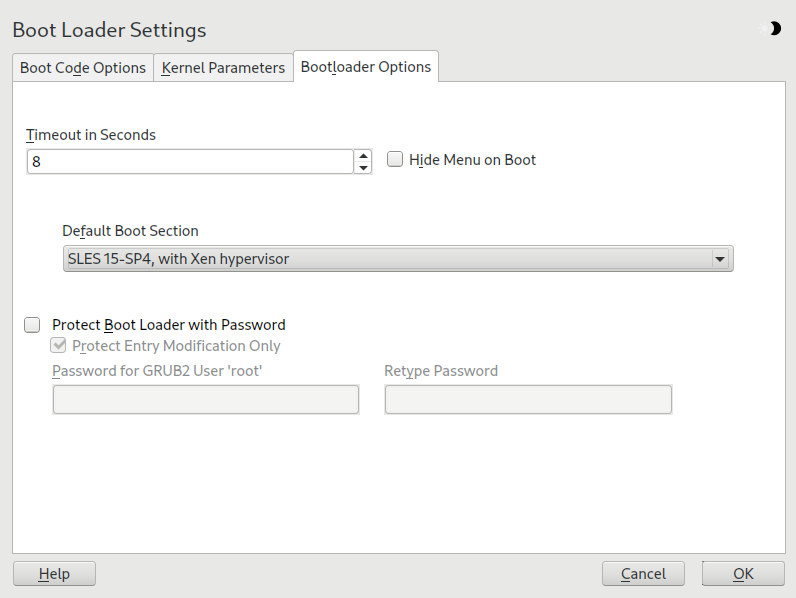

The YaST boot loader program is located at › › . Click the tab and select the line containing the Xen kernel as the .

Figure 27.1: Boot loader settings #

Confirm with . Next time you boot the host, it can provide the Xen virtualization environment.

You can use the Boot Loader program to specify functionality, such as:

Pass kernel command-line parameters.

Specify the kernel image and initial RAM disk.

Select a specific hypervisor.

Pass additional parameters to the hypervisor. See http://xenbits.xen.org/docs/unstable/misc/xen-command-line.html for their complete list.

You can customize your virtualization environment by editing the

/etc/default/grub file. Add the following line to

this file: GRUB_CMDLINE_XEN="<boot_parameters>".

Do not forget to run grub2-mkconfig -o

/boot/grub2/grub.cfg after editing the file.

27.2 Sparse image files and disk space #Edit source

If the host’s physical disk reaches a state where it has no available space, a virtual machine using a virtual disk based on a sparse image file cannot write to its disk. Consequently, it reports I/O errors.

If this situation occurs, you should free up available space on the physical disk, remount the virtual machine’s file system, and set the file system back to read-write.

To check the actual disk requirements of a sparse image file, use the

command du -h <image file>.

To increase the available space of a sparse image file, first increase the file size and then the file system.

Warning: Back up before resizing

Touching the sizes of partitions or sparse files always bears the risk of data failure. Do not work without a backup.

The resizing of the image file can be done online, while the VM Guest is running. Increase the size of a sparse image file with:

>sudodd if=/dev/zero of=<image file> count=0 bs=1M seek=<new size in MB>

For example, to increase the file

/var/lib/xen/images/sles/disk0 to a size of 16GB,

use the command:

>sudodd if=/dev/zero of=/var/lib/xen/images/sles/disk0 count=0 bs=1M seek=16000

Note: Increasing non-sparse images

It is also possible to increase the image files of devices that are not sparse files. However, you must know exactly where the previous image ends. Use the seek parameter to point to the end of the image file and use a command similar to the following:

>sudodd if=/dev/zero of=/var/lib/xen/images/sles/disk0 seek=8000 bs=1M count=2000

Be sure to use the right seek, else data loss may happen.

If the VM Guest is running during the resize operation, also resize the loop device that provides the image file to the VM Guest. First detect the correct loop device with the command:

>sudolosetup -j /var/lib/xen/images/sles/disk0

Then resize the loop device, for example /dev/loop0,

with the following command:

>sudolosetup -c /dev/loop0

Finally check the size of the block device inside the guest system with

the command fdisk -l /dev/xvdb. Replace the device

name with your increased disk.

The resizing of the file system inside the sparse file involves tools that are depending on the actual file system.

27.3 Migrating Xen VM Guest systems #Edit source

With Xen it is possible to migrate a VM Guest system from one VM Host Server to another with almost no service interruption. This could be used, for example, to move a busy VM Guest to a VM Host Server that has stronger hardware or is not yet loaded. Or, if a service of a VM Host Server is required, all VM Guest systems running on this machine can be migrated to other machines to avoid interruption of service. These are only two examples—many more reasons may apply to your personal situation.

Before starting, certain preliminary considerations regarding the VM Host Server should be taken into account:

All VM Host Server systems should use a similar CPU. The frequency is not so important, but they should be using the same CPU family. To get more information about the used CPU, use

cat /proc/cpuinfo. Find more details about comparing host CPU features in Section 27.3.1, “Detecting CPU features”.All resources that are used by a specific guest system must be available on all involved VM Host Server systems—for example all used block devices must exist on both VM Host Server systems.

If the hosts included in the migration process run in different subnets, make sure that either DHCP relay is available to the guests, or for guests with static network configuration, set up the network manually.

Using special features like

PCI Pass-Throughmay be problematic. Do not implement these when deploying for an environment that should migrate VM Guest systems between different VM Host Server systems.For fast migrations, a fast network is mandatory. If possible, use GB Ethernet and fast switches. Deploying VLAN may also help avoid collisions.

27.3.1 Detecting CPU features #Edit source

By using the cpuid and

xen_maskcalc.py tools, you can compare

features of a CPU on the host from where you are migrating the source

VM Guest with the features of CPUs on the target hosts. This way you

can better predict if the guest migrations will be successful.

Run the

cpuid -1rcommand on each Dom0 that is supposed to run or receive the migrated VM Guest and capture the output in text files, for example:tux@vm_host1 >sudo cpuid -1r > vm_host1.txttux@vm_host2 >sudo cpuid -1r > vm_host2.txttux@vm_host3 >sudo cpuid -1r > vm_host3.txtCopy all the output text files on a host with the

xen_maskcalc.pyscript installed.Run the

xen_maskcalc.pyscript on all output text files:>sudoxen_maskcalc.py vm_host1.txt vm_host2.txt vm_host3.txt cpuid = [ "0x00000001:ecx=x00xxxxxx0xxxxxxxxx00xxxxxxxxxxx", "0x00000007,0x00:ebx=xxxxxxxxxxxxxxxxxx00x0000x0x0x00" ]Copy the output

cpuid=[...]configuration snipped into thexlconfiguration of the migrated guestdomU.cfgor alternatively to itslibvirt's XML configuration.Start the source guest with the trimmed CPU configuration. The guest can now only use CPU features which are present on each of the hosts.

27.3.1.1 More information #Edit source

You can find more details about cpuid at

http://etallen.com/cpuid.html.

You can download the latest version of the CPU mask calculator from https://github.com/twizted/xen_maskcalc.

27.3.2 Preparing block devices for migrations #Edit source

The block devices needed by the VM Guest system must be available on all involved VM Host Server systems. This is done by implementing a specific kind of shared storage that serves as a container for the root file system of the migrated VM Guest system. Common possibilities include:

iSCSIcan be set up to give access to the same block devices from different systems at the same time.NFSis a widely used root file system that can easily be accessed from different locations. For more information, see Book “Reference”, Chapter 22 “Sharing file systems with NFS”.DRBDcan be used if only two VM Host Server systems are involved. This adds certain extra data security, because the used data is mirrored over the network. .SCSIcan also be used if the available hardware permits shared access to the same disks.NPIVis a special mode to use Fibre channel disks. However, in this case, all migration hosts must be attached to the same Fibre channel switch. For more information about NPIV, see Section 25.1, “Mapping physical storage to virtual disks”. Commonly, this works if the Fibre channel environment supports 4 Gbps or faster connections.

27.3.3 Migrating VM Guest systems #Edit source

The actual migration of the VM Guest system is done with the command:

>sudoxl migrate <domain_name> <host>

The speed of the migration depends on how fast the memory print can be saved to disk, sent to the new VM Host Server and loaded there. This means that small VM Guest systems can be migrated faster than big systems with a lot of memory.

27.4 Monitoring Xen #Edit source

For a regular operation of many virtual guests, having a possibility to check the sanity of all the different VM Guest systems is indispensable. Xen offers several tools besides the system tools to gather information about the system.

Tip: Monitoring the VM Host Server

Basic monitoring of the VM Host Server (I/O and CPU) is available via the Virtual Machine Manager. Refer to Section 10.8.1, “Monitoring with Virtual Machine Manager” for details.

27.4.1 Monitor Xen with xentop #Edit source

The preferred terminal application to gather information about Xen

virtual environment is xentop. Be aware that this

tool needs a rather broad terminal, else it inserts line breaks into

the display.

xentop has several command keys that can give you

more information about the system that is monitored. For example:

- D

Change the delay between the refreshes of the screen.

- N

Also display network statistics. Note, that only standard configurations are displayed. If you use a special configuration like a routed network, no network is displayed.

- B

Display the respective block devices and their cumulated usage count.

For more information about xentop, see the manual

page man 1 xentop.

Tip: virt-top

libvirt offers the hypervisor-agnostic tool

virt-top, which is recommended for monitoring

VM Guests. See

Section 10.8.2, “Monitoring with virt-top” for

details.

27.4.2 Additional tools #Edit source

There are many system tools that also help monitoring or debugging a running openSUSE system. Many of these are covered in Book “System Analysis and Tuning Guide”, Chapter 2 “System monitoring utilities”. Especially useful for monitoring a virtualization environment are the following tools:

- ip

The command-line utility

ipmay be used to monitor arbitrary network interfaces. This is especially useful if you have set up a network that is routed or applied a masqueraded network. To monitor a network interface with the namealice.0, run the following command:>watch ip -s link show alice.0- bridge

In a standard setup, all the Xen VM Guest systems are attached to a virtual network bridge.

bridgeallows you to determine the connection between the bridge and the virtual network adapter in the VM Guest system. For example, the output ofbridge linkmay look like the following:2: eth0 state DOWN : <NO-CARRIER, ...,UP> mtu 1500 master br0 8: vnet0 state UNKNOWN : <BROADCAST, ...,LOWER_UP> mtu 1500 master virbr0 \ state forwarding priority 32 cost 100

This shows that there are two virtual bridges defined on the system. One is connected to the physical Ethernet device

eth0, the other one is connected to a VLAN interfacevnet0.- iptables-save

Especially when using masquerade networks, or if several Ethernet interfaces are set up together with a firewall setup, it may be helpful to check the current firewall rules.

The command

iptablesmay be used to check all the different firewall settings. To list all the rules of a chain, or even of the complete setup, you may use the commandsiptables-saveoriptables -S.

27.5 Providing host information for VM Guest systems #Edit source

In a standard Xen environment, the VM Guest systems have only limited

information about the VM Host Server system they are running on. If a guest

should know more about the VM Host Server it runs on,

vhostmd can provide more information to selected

guests. To set up your system to run vhostmd,

proceed as follows:

Install the package vhostmd on the VM Host Server.

To add or remove

metricsections from the configuration, edit the file/etc/vhostmd/vhostmd.conf. However, the default works well.Check the validity of the

vhostmd.confconfiguration file with the command:>cd /etc/vhostmd>xmllint --postvalid --noout vhostmd.confStart the vhostmd daemon with the command

sudo systemctl start vhostmd.If vhostmd should be started automatically during start-up of the system, run the command:

>sudosystemctl enable vhostmdAttach the image file

/dev/shm/vhostmd0to the VM Guest system named alice with the command:>xl block-attach opensuse /dev/shm/vhostmd0,,xvdb,roLog on the VM Guest system.

Install the client package

vm-dump-metrics.Run the command

vm-dump-metrics. To save the result to a file, use the option-d <filename>.

The result of the vm-dump-metrics is an XML

output. The respective metric entries follow the DTD

/etc/vhostmd/metric.dtd.

For more information, see the manual pages man 8

vhostmd and /usr/share/doc/vhostmd/README

on the VM Host Server system. On the guest, see the manual page man 1

vm-dump-metrics.